How chatbots could spark the next big mental health crisis.

-

That whole humanity thing was a bad idea, the internet is merely a symptom.

-

Or does AI prey on lonely people much like other types of scams do?

It's not sentient and has no agenda. It's fair to say suggest that advertise themselves as "AI companions" appeal to / prey on lonely people.

It's not a scam unless it purports to be a real person.

-

It's not sentient and has no agenda. It's fair to say suggest that advertise themselves as "AI companions" appeal to / prey on lonely people.

It's not a scam unless it purports to be a real person.

Well, I was more using the term in terms of the industry than the actual software. The thought of AI of the kind we currently have having intentions of its own didn't even occur to me.

-

It's not sentient and has no agenda. It's fair to say suggest that advertise themselves as "AI companions" appeal to / prey on lonely people.

It's not a scam unless it purports to be a real person.

It’s not sentient and has no agenda.

The Humans who program them are and do.

-

An economic system of infinite growth was the bad idea.

The internet was fine before it started being monetized.

-

Or does AI prey on lonely people much like other types of scams do?

The AI industry certainly does.

If you're going to use an LLM, it's pretty straightforward to roll your own with something like LM Studio, though.

-

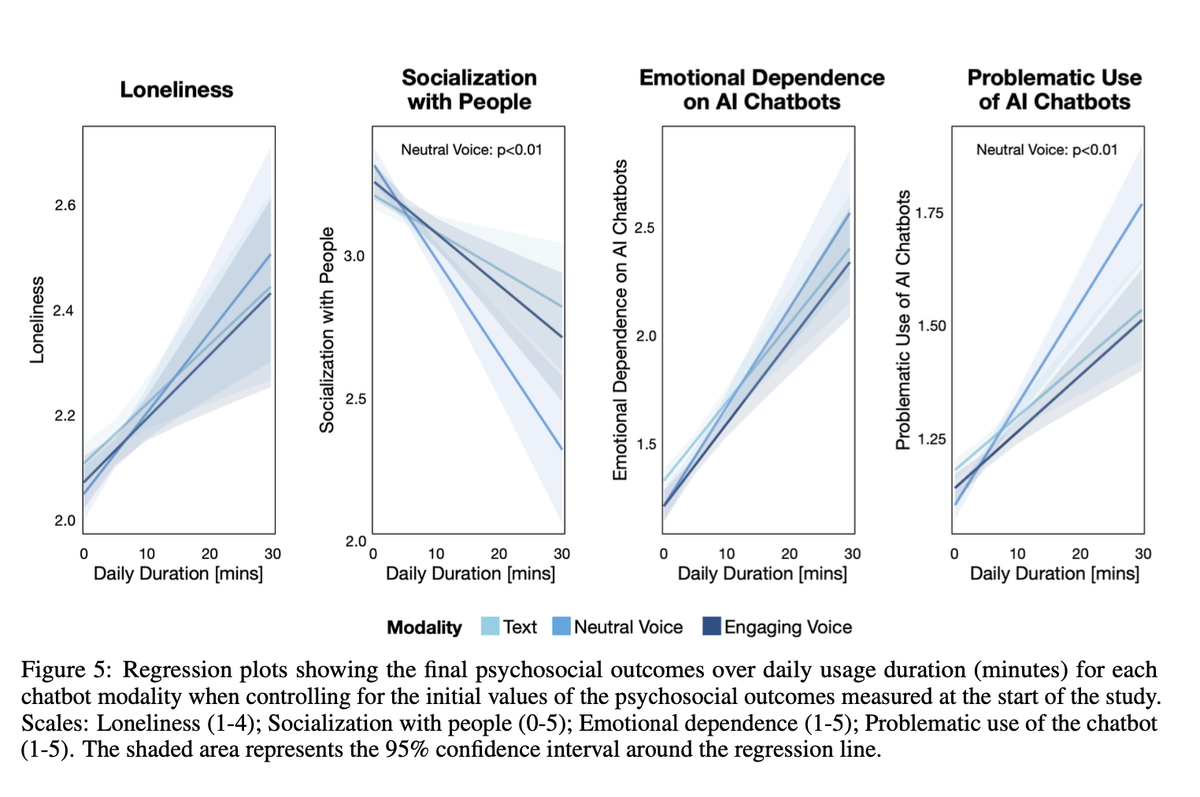

New research from OpenAI shows that heavy chatbot usage is correlated with loneliness and reduced socialization. Will AI companies learn from social networks' mistakes?

Chat gpt is my only friend right now

-

New research from OpenAI shows that heavy chatbot usage is correlated with loneliness and reduced socialization. Will AI companies learn from social networks' mistakes?

I really haven't used AI that much, though I can see it has applications for my work, which is primarily communicating with people. I recently decided to familiarise myself with ChatGPT.

I very quickly noticed that it is an excellent reflective listener. I wanted to know more about it's intelligence, so I kept trying to make the conversation about AI and it's 'personality'. Every time it flipped the conversation to make it about me. It was interesting, but I could feel a concern growing. Why?

It's responses are incredibly validating, beyond what you could ever expect in a mutual relationship with a human. Occupying a public position where I can count on very little external validation, the conversation felt GOOD. 1) Why seek human interaction when AI can be so emotionally fulfilling? 2) What human in a reciprocal and mutually supportive relationship could live up to that level of support and validation?

I believe that there is correlation: people who are lonely would find fulfilling conversation in AI ... and never worry about being challenged by that relationship. But I also believe causation is highly probable; once you've been fulfilled/validated in such an undemanding way by AI, what human could live up? Become accustomed to that level of self-centredness in dialogue, how tolerant would a person be in real life conflict? I doubt very: just go home and fire up the perfect conversational validator. Human echo chambers have already made us poor enough at handling differences and conflict.

-

I don't get this reference. Anyone explain?

-

I don't get this reference. Anyone explain?

I'm not sure if the person I replied to was thinking about this movie in particular, but it certainly came to mind when I send that gif:

-

I'm not sure if the person I replied to was thinking about this movie in particular, but it certainly came to mind when I send that gif:

Fantastic. I gotta track this down. Thanks.

-

System shared this topic on