The vibecoders are becoming sentient

-

I don't think it has much to do with how "complex or not" it is, but rather how common it is.

It can completely fail on very simple things that are just a bit obscure, so it has too little training data.

And it can do very complex things if there's enough training data on those things.

Yes exactly.

"Implement a first order lowpass filter in C"

LLM has no issue.

"Implement a string reversal function in Wren"

LLM proceeds to output an unholy mix of python and JavaScript.

Even though the second task is trivial compared to the first, LLMs have almost no training data on Wren (an obscure semi-dead language).

-

I'm not a programmer by any stretch but what LLM's have been great for is getting my homelab set up. I've even done some custom UI stuff for work that talks to open source backend things we run. I think I've actually learned a fair bit from the experience and if I had to start over I'd be able to do way way more on my own than I was able to when I first started. It's not perfect and as others have mentioned I have broken things and had to start projects completely from scratch but the second time through I knew where pitfalls were and I'm getting better at knowing what to ask for and telling it what to avoid.

I'm not a programmer but I'm not trying to ship anything either. In general I'm a pretty anti-AI guy but for the non-initiated that want to get started with a homelab I'd say its damn near instrumental in a quick turnaround and a fairly decent educational tool.

As a programmer I've found it infinitely times more useful for troubleshooting and setting up things than for programming. When my Arch Linux nukes itself again I know I'll use an LLM, when I find a random old device or game at the thrift store and want to get it to work I'll use an LLM, etc. For programming I only use the IntelliJ line completion models since they're smart enough to see patterns for the dumb busywork, but don't try to outsmart me most of the time which would only cost more time.

-

This post did not contain any content.

I refuse to believe this post isn't satire, because holy shit.

-

How do humans answer a question? I would argue, for many, the answer for most topics would be "I am repeating what I was taught/learned/read.

Even children aren't expected to just repeat verbatim what they were taught. When kids are being taught verbs, they're shown the pattern: "I run, you run, he runs; I eat, you eat, he eats." They're are told that there's a pattern, and it's that the "he/she/they" version has an "s" at the end. They now understand some of how verbs work in English, and can try to apply that pattern. But, even when it's spotting a pattern and applying the right rule, there's still an element of understanding involved. You have to recognize that this is a "verb" situation, and you should apply that bit about "add an 's' if it's he/she/it/they".

An LLM, by contrast, never learns any rules. Instead it ingests every single verb that has ever been recorded in English, and builds up a probability table for what comes next.

but most people are not taught WHY 2+2=4

Everybody is taught why 2+2=4. They normally use apples. They say if I have 2 apples and John has 2 apples, how many apples are there in total? It's not simply memorizing that when you see the token "2" followed by "+" then "2" then "=" that the next likely token is a "4".

If you watch little kids doing that kind of math, they do understand what's happening because they're often counting on their fingers. That signals that there's a level of understanding that's different from simply pattern matching.

Sure, there's a lot of pattern matching in the way human brains work too. But, fundamentally there's also at least some amount of "understanding". One example where humans do pattern matching is idioms. A lot of people just repeat the idiom without understanding what it really means. But, they do it in order to convey a message. They don't do it just because it sounds like it's the most likely thing that will be said next in the current conversation.

I wasn't attempting to attack what you said, merely pointing out that once you cross the line into philosophy things get really murky really fast.

You assert that LLMs aren't taught the rules, but every word is not just a word. The tokenization process includes part of speech tagging, predicate tagging, etc. The 'rules' that you are talking about are actually encapsulated in the tokenization process. The way the tokenization process for LLMs, at least as of a few years ago when I read a textbook on building LLMs, is predicated on the rules of the language. Parts of speech, syntax information, word commonality, etc. are all major parts of the ingestion process before training is done. They may not have had a teacher giving them the 'rules', but that does not mean it was not included in the training.

And circling back to the philosophical question of what it means to "learn" or "know" something, you actually exhibited what I was talking about in your response on the math question. Putting to piles of apples on a table and counting them to find the total is a naïve application of the principals of addition to a situation, but it is not describing why addition operates the way it does. That answer does not get discussed until Number Theory in upper division math courses in college. If you have never taken that course or studied Number Theory independently, you do not know 'why' adding two numbers together gives you the total, you know 'that' adding two numbers together gives you the total, and that is enough for your life.

Learning, and by extension knowledge, have many forms and processes that certainly do not look the same by comparison. Learning as a child is unrecognizable when compared directly to learning as an adult, especially in our society. Non-sapient animals all learn and have knowledge, but the processes for it are unintelligible to most people, save those who study animal intelligence. So to say the LLM does or does not "know" anything is to assert that their "knowing" or "learning" will be recognizable and intelligible to the lay man. Yes, I know that it is based on statistical mechanics, I studied those in my BS for Applied Mathematics. I know it is selecting the most likely word to follow what has been generated. The thing is, I recognize that I am doing exactly the same process right now, typing this message. I am deciding what sequence of words and tones of language will be approachable and relatable while still conveying the argument I wish to levy. Did I fail? Most certainly. I'm a pedantic neurodivergent piece of shit having a spirited discussion online, I am bound to fail because I know nothing about my audience aside from the prompt to which you gave me to respond. So I pose the question, when behaviors are symmetric, and outcomes are similar, how can an attribute be applied to one but not the other?

-

As a programmer I've found it infinitely times more useful for troubleshooting and setting up things than for programming. When my Arch Linux nukes itself again I know I'll use an LLM, when I find a random old device or game at the thrift store and want to get it to work I'll use an LLM, etc. For programming I only use the IntelliJ line completion models since they're smart enough to see patterns for the dumb busywork, but don't try to outsmart me most of the time which would only cost more time.

lol it did save me from my first

rm -rf / -

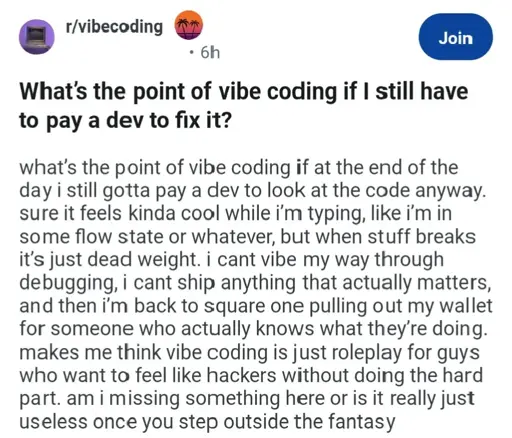

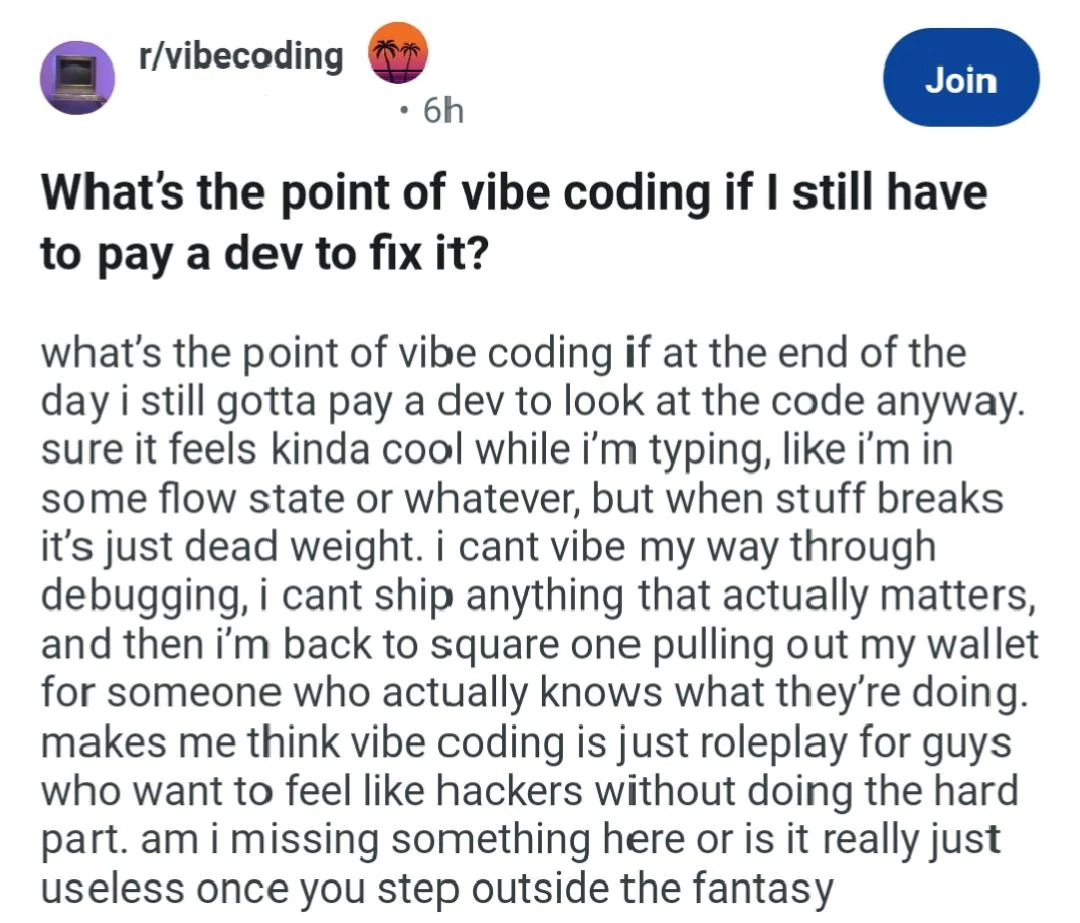

I refuse to believe this post isn't satire, because holy shit.

wrote last edited by [email protected]If I was 14 and had an interest in coding, the promise of 'vibe coding' would absolutely reel me in. Most of us here on Lemmy are more tech savvy and older, so it's easy to forget that we were asking Jeeves for .bat commands and borrowing* from Planet Source Code.

But yeah, it feels like satire. Haha.

-

This post did not contain any content.

bro thought software engineering is just $20/mo chatgpt

-

If I was 14 and had an interest in coding, the promise of 'vibe coding' would absolutely reel me in. Most of us here on Lemmy are more tech savvy and older, so it's easy to forget that we were asking Jeeves for .bat commands and borrowing* from Planet Source Code.

But yeah, it feels like satire. Haha.

I feel you, and I agree that as a learning tool that's probably how it's being used (whether that's good or bad is a different topic), but the fact that they immediately talk about having to pay a dev makes it sound like someone who isn't trying to learn but trying to make a product.

-

If I was 14 and had an interest in coding, the promise of 'vibe coding' would absolutely reel me in. Most of us here on Lemmy are more tech savvy and older, so it's easy to forget that we were asking Jeeves for .bat commands and borrowing* from Planet Source Code.

But yeah, it feels like satire. Haha.

Lol I remember when I was around pre-school / kindergarten age and I was asking family members how to spell words so I could type into a Windows 3.1 "run program" dialog box "make sonic game".

-

This post did not contain any content.

-

I will usually google that kind of thing first (to save the rainforests)... Often I can find something that way, otherwise I might try an LLM

Google has become so shit though