Brian Eno: “The biggest problem about AI is not intrinsic to AI. It’s to do with the fact that it’s owned by the same few people”

-

This post did not contain any content.

The problem with AI is that it pirates everyone’s work and then repackages it as its own and enriches the people that did not create the copywrited work.

-

And also it's using machines to catch up to living creation and evolution, badly.

A but similar to how Soviet system was trying to catch up to in no way virtuous, but living and vibrant Western societies.

That's expensive, and that's bad, and that's inefficient. The only subjective advantage is that power is all it requires.

-

The problem with AI is that it pirates everyone’s work and then repackages it as its own and enriches the people that did not create the copywrited work.

I mean, it's our work the result should belong to the people.

-

and here you are, downvoting my valid point

Wasn't me actually.

valid point

You weren't really making a point in line with what I was saying.

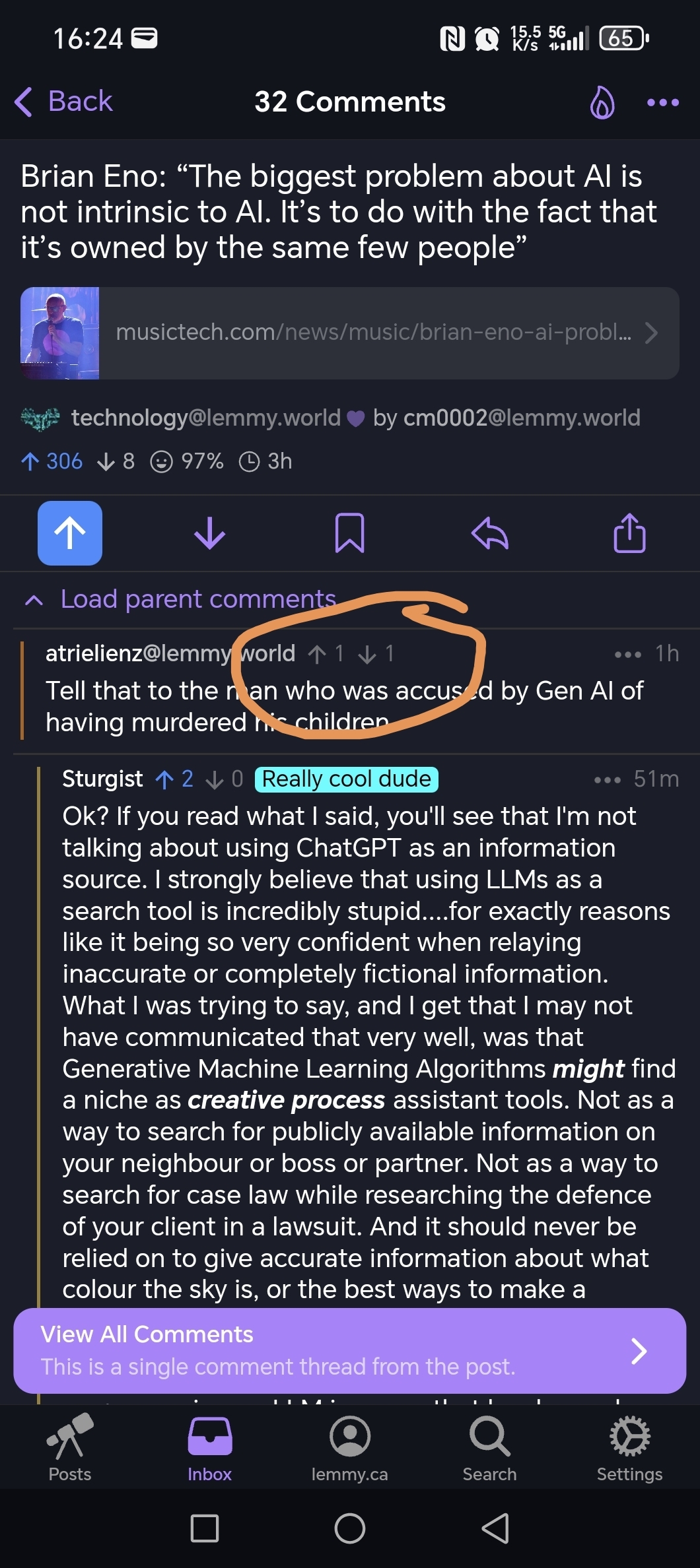

regardless of whether we view it as a reliable information source, that's what it is being marketed as and results like this harm both the population using it, and the people who have found good uses for it. And no, I don't actually agree that it's good for creative processes as assistance tools and a lot of that has to do with how you view the creative process and how I view it differently. Any other tool at the very least has a known quantity of what went into it and Generative AI does not have that benefit and therefore is problematic.

This is a really valid point, and if you had taken the time to actually write this out in your first comment, instead of "Tell that to the guy that was expecting factual information from a hallucination generator!" I wouldn't have reacted the way I did. And we'd be having a constructive conversation right now. Instead you made a snide remark, seemingly (personal opinion here, I probably can't read minds) intending it as an invalidation of what I was saying, and then being smug about my taking offence to you not contributing to the conversation and instead being kind of a dick.

Not everything has to have a direct correlation to what you say in order to be valid or add to the conversation. You have a habit of ignoring parts of the conversation going around you in order to feel justified in whatever statements you make regardless of whether or not they are based in fact or speak to the conversation you're responding to and you are also doing the exact same thing to me that you're upset about (because why else would you go to a whole other post to "prove a point" about downvoting?). I'm not going to even try to justify to you what I said in this post or that one because I honestly don't think you care.

It wasn't you (you claim), but it could have been and it still might be you on a separate account. I have no way of knowing.

All in all, I said what I said. We will not get the benefits of Generative AI if we don't 1. deal with the problems that are coming from it, and 2. Stop trying to shoehorn it into everything. And that's the discussion that's happening here.

-

Would you say your research is evidence that the o1 model was built using data/algorithms taken from OpenAI via industrial espionage (like Sam Altman is purporting without evidence)? Or is it just likely that they came upon the same logical solution?

Not that it matters, of course! Just curious.

Well, OpenAI has clearly scraped everything that is scrap-able on the internet. Copyrights be damned. I haven't actually used Deep seek very much to make a strong analysis, but I suspect Sam is just mad they got beat at their own game.

The real innovation that isn't commonly talked about is the invention of Multihead Latent Attention (MLA), which is what drive the dramatic performance increases in both memory (59x) and computation (6x) efficiency. It's an absolute game changer and I'm surprised OpenAI has released their own MLA model yet.

While on the subject of stealing data, I have been of the strong opinion that there is no such thing as copyright when it comes to training data. Humans learn by example and all works are derivative of those that came before, at least to some degree. This, if humans can't be accused of using copyrighted text to learn how to write, then AI shouldn't either. Just my hot take that I know is controversial outside of academic circles.

-

But the people with the money for the hardware are the ones training it to put more money in their pockets. That's mostly what it's being trained to do: make rich people richer.

But you can make this argument for anything that is used to make rich people richer. Even something as basic as pen and paper is used everyday to make rich people richer.

Why attack the technology if its the rich people you are against and not the technology itself.

-

This post did not contain any content.

Why is this message not being drilled into the heads of everyone. Sam Altman go to prison or publish your stolen weights.

-

The problem with being like… super pedantic about definitions, is that you often miss the forest for the trees.

Illegal or not, seems pretty obvious to me that people saying illegal in this thread and others probably mean “unethically”… which is pretty clearly true.

I wasn't being pedantic. It's a very fucking important distinction.

If you want to say "unethical" you say that. Law is an orthogonal concept to ethics. As anyone who's studied the history of racism and sexism would understand.

Furthermore, it's not clear that what Meta did actually was unethical. Ethics is all about how human behavior impacts other humans (or other animals). If a behavior has a direct negative impact that's considered unethical. If it has no impact or positive impact that's an ethical behavior.

What impact did OpenAI, Meta, et al have when they downloaded these copyrighted works? They were not read by humans--they were read by machines.

From an ethics standpoint that behavior is moot. It's the ethical equivalent of trying to measure the environmental impact of a bit traveling across a wire. You can go deep down the rabbit hole and calculate the damage caused by mining copper and laying cables but that's largely a waste of time because it completely loses the narrative that copying a billion books/images/whatever into a machine somehow negatively impacts humans.

It is not the copying of this information that matters. It's the impact of the technologies they're creating with it!

That's why I think it's very important to point out that copyright violation isn't the problem in these threads. It's a path that leads nowhere.

-

AI scrapers illegally harvesting data are destroying smaller and open source projects. Copyright law is not the only victim

https://thelibre.news/foss-infrastructure-is-under-attack-by-ai-companies/

In this case they just need to publish the code as a torrent. You wouldn't setup a crawler if there was all the data in a torrent swarm.

-

The biggest problem with AI is that it’s the brut force solution to complex problems.

Instead of trying to figure out what’s the most power efficient algorithm to do artificial analysis, they just threw more data and power at it.

Besides the fact of how often it’s wrong, by definition, it won’t ever be as accurate nor efficient as doing actual thinking.

It’s the solution you come up with the last day before the project is due cause you know it will technically pass and you’ll get a C.

It's moronic. Currently, decision makers don't really understand what to do with AI and how it will realistically evolve in the coming 10-20 years. So it's getting pushed even into environments with 0-error policies, leading to horrible results and any time savings are completely annihilated by the ensuing error corrections and general troubleshooting. But maybe the latter will just gradually be dropped and customers will be told to just "deal with it," in the true spirit of enshittification.

-

They're not illegally harvesting anything. Copyright law is all about distribution. As much as everyone loves to think that when you copy something without permission you're breaking the law the truth is that you're not. It's only when you distribute said copy that you're breaking the law (aka violating copyright).

All those old school notices (e.g. "FBI Warning") are 100% bullshit. Same for the warning the NFL spits out before games. You absolutely can record it! You just can't share it (or show it to more than a handful of people but that's a different set of laws regarding broadcasting).

I download AI (image generation) models all the time. They range in size from 2GB to 12GB. You cannot fit the petabytes of data they used to train the model into that space. No compression algorithm is that good.

The same is true for LLM, RVC (audio models) and similar models/checkpoints. I mean, think about it: If AI is illegally distributing millions of copyrighted works to end users they'd have to be including it all in those files somehow.

Instead of thinking of an AI model like a collection of copyrighted works think of it more like a rough sketch of a mashup of copyrighted works. Like if you asked a person to make a Godzilla-themed My Little Pony and what you got was that person's interpretation of what Godzilla combine with MLP would look like. Every artist would draw it differently. Every author would describe it differently. Every voice actor would voice it differently.

Those differences are the equivalent of the random seed provided to AI models. If you throw something at a random number generator enough times you could--in theory--get the works of Shakespeare. Especially if you ask it to write something just like Shakespeare. However, that doesn't meant the AI model literally copied his works. It's just doing it's best guess (it's literally guessing! That's how work!).

The issue I see is that they are using the copyrighted data, then making money off that data.

-

Not everything has to have a direct correlation to what you say in order to be valid or add to the conversation. You have a habit of ignoring parts of the conversation going around you in order to feel justified in whatever statements you make regardless of whether or not they are based in fact or speak to the conversation you're responding to and you are also doing the exact same thing to me that you're upset about (because why else would you go to a whole other post to "prove a point" about downvoting?). I'm not going to even try to justify to you what I said in this post or that one because I honestly don't think you care.

It wasn't you (you claim), but it could have been and it still might be you on a separate account. I have no way of knowing.

All in all, I said what I said. We will not get the benefits of Generative AI if we don't 1. deal with the problems that are coming from it, and 2. Stop trying to shoehorn it into everything. And that's the discussion that's happening here.

because why else would you go to a whole other post to "prove a point" about downvoting?

It wasn't you (you claim)I do claim. I have an alt, didn't downvote you there either. Was just pointing out that you were also making assumptions. And it's all comments in the same thread, hardly me going to an entirely different post to prove a point.

We will not get the benefits of Generative AI if we don't 1. deal with the problems that are coming from it, and 2. Stop trying to shoehorn it into everything. And that's the discussion that's happening here.

I agree. And while I personally feel like there's already room for it in some people's workflow, it is very clearly problematic in many ways. As I had pointed out in my first comment.

I'm not going to even try to justify to you what I said in this post or that one because I honestly don't think you care.

I do actually! Might be hard to believe, but I reacted the way I did because I felt your first comment was reductive, and intentionally trying to invalidate and derail my comment without actually adding anything to the discussion. That made me angry because I want a discussion. Not because I want to be right, and fuck you for thinking differently.

If you're willing to talk about your views and opinions, I'd be happy to continue talking. If you're just going to assume I don't care, and don't want to hear what other people think...then just block me and move on.

-

This post did not contain any content.

No brian eno, there are many open llm already. The problem is people like you who have accumulated too much and now control all the markets/platforms/medias.

-

We spend energy on the most useless shit why are people suddenly using it as an argument against AI? You ever saw someone complaining about pixar wasting energies to render their movies? Or 3D studios to render TV ads?

-

They're not illegally harvesting anything. Copyright law is all about distribution. As much as everyone loves to think that when you copy something without permission you're breaking the law the truth is that you're not. It's only when you distribute said copy that you're breaking the law (aka violating copyright).

All those old school notices (e.g. "FBI Warning") are 100% bullshit. Same for the warning the NFL spits out before games. You absolutely can record it! You just can't share it (or show it to more than a handful of people but that's a different set of laws regarding broadcasting).

I download AI (image generation) models all the time. They range in size from 2GB to 12GB. You cannot fit the petabytes of data they used to train the model into that space. No compression algorithm is that good.

The same is true for LLM, RVC (audio models) and similar models/checkpoints. I mean, think about it: If AI is illegally distributing millions of copyrighted works to end users they'd have to be including it all in those files somehow.

Instead of thinking of an AI model like a collection of copyrighted works think of it more like a rough sketch of a mashup of copyrighted works. Like if you asked a person to make a Godzilla-themed My Little Pony and what you got was that person's interpretation of what Godzilla combine with MLP would look like. Every artist would draw it differently. Every author would describe it differently. Every voice actor would voice it differently.

Those differences are the equivalent of the random seed provided to AI models. If you throw something at a random number generator enough times you could--in theory--get the works of Shakespeare. Especially if you ask it to write something just like Shakespeare. However, that doesn't meant the AI model literally copied his works. It's just doing it's best guess (it's literally guessing! That's how work!).

This is an interesting argument that I've never heard before. Isn't the question more about whether ai generated art counts as a "derivative work" though? I don't use AI at all but from what I've read, they can generate work that includes watermarks from the source data, would that not strongly imply that these are derivative works?

-

This post did not contain any content.

Reading the other comments, it seems there are more than one problem with AI. Probably even some perks as well.

Shucks, another one or these complex issues huh. Weird how everything you learn something about turns out to have these nuances to them.

-

Idk if it’s the biggest problem, but it’s probably top three.

Other problems could include:

- Power usage

- Adding noise to our communication channels

- AGI fears if you buy that (I don’t personally)

Power usage

I'm generally a huge eco guy but on power usage particularly I view this largely as a government failure. We have had to incredible energy resources that the government has chosen not to implement or effectively dismantled.

It reminds me a lot of how Recycling has been pushed so hard into the general public instead of and government laws on plastic usage and waste disposal.

It's always easier to wave your hands and blame "society" than the is to hold the actual wealthy and powerful accountable.

-

I see the "AI is using up massive amounts of water" being proclaimed everywhere lately, however I do not understand it, do you have a source?

My understanding is this probably stems from people misunderstanding data center cooling systems. Most of these systems are closed loop so everything will be reused. It makes no sense to "burn off" water for cooling.

-

This post did not contain any content.

wrong. it's that it's not intelligent. if it's not intelligent, nothing it says is of value. and it has no thoughts, feelings or intent. therefore it can't be artistic. nothing it "makes" is of value either.

-

No brian eno, there are many open llm already. The problem is people like you who have accumulated too much and now control all the markets/platforms/medias.

Totally right that there are already super impression open source AI projects.

But Eno doesn't control diddly, and it's odd that you think he does. And I assume he is decently well off, but I doubt he is super rich by most people's standards.