Apple just proved AI "reasoning" models like Claude, DeepSeek-R1, and o3-mini don't actually reason at all. They just memorize patterns really well.

-

NOOOOOOOOO

SHIIIIIIIIIITT

SHEEERRRLOOOOOOCK

The funny thing about this "AI" griftosphere is how grifters will make some outlandish claim and then different grifters will "disprove" it. Plenty of grant/VC money for everybody.

-

What's hilarious/sad is the response to this article over on reddit's "singularity" sub, in which all the top comments are people who've obviously never got all the way through a research paper in their lives all trashing Apple and claiming their researchers don't understand AI or "reasoning". It's a weird cult.

-

The difference between reasoning models and normal models is reasoning models are two steps, to oversimplify it a little they prompt "how would you go about responding to this" then prompt "write the response"

It's still predicting the most likely thing to come next, but the difference is that it gives the chance for the model to write the most likely instructions to follow for the task, then the most likely result of following the instructions - both of which are much more conformant to patterns than a single jump from prompt to response.

wrote on last edited by [email protected]The difference between reasoning models and normal models is reasoning models are two steps,

That's a garbage definition of "reasoning". Someone who is not a grifter would simply call them two-step models (or similar), instead of promoting misleading anthropomorphic terminology.

-

Well - if you want to devolve into argument, you can argue all day long about "what is reasoning?"

wrote on last edited by [email protected]You were starting a new argument. Let's stay on topic.

The paper implies "Reasoning" is application of logic. It shows that LRMs are great at copying logic but can't follow simple instructions that haven't been seen before.

-

those particular models. It does not prove the architecture doesn't allow it at all. It's still possible that this is solvable with a different training technique, and none of those are using the right one. that's what they need to prove wrong.

this proves the issue is widespread, not fundamental.

The architecture of these LRMs may make monkeys fly out of my butt. It hasn't been proven that the architecture doesn't allow it.

You are asking to prove a negative. The onus is to show that the architecture can reason. Not to prove that it can't.

-

Even defining reason is hard and becomes a matter of philosophy more than science. For example, apply the same claims to people. Now I've given you something to think about. Or should I say the Markov chain in your head has a new topic to generate thought states for.

wrote on last edited by [email protected]By many definitions, reasoning IS just a form of pattern recognition so the lines are definitely blurred.

-

Yeah I often think about this Rick N Morty cartoon. Grifters are like, "We made an AI ankle!!!" And I'm like, "That's not actually something that people with busted ankles want. They just want to walk. No need for a sentient ankle." It's a real gross distortion of science how everything needs to be "AI" nowadays.

AI is just the new buzzword, just like blockchain was a while ago. Marketing loves these buzzwords because they can get away with charging more if they use them. They don't much care if their product even has it or could make any use of it.

-

I see a lot of misunderstandings in the comments 🫤

This is a pretty important finding for researchers, and it's not obvious by any means. This finding is not showing a problem with LLMs' abilities in general. The issue they discovered is specifically for so-called "reasoning models" that iterate on their answer before replying. It might indicate that the training process is not sufficient for true reasoning.

Most reasoning models are not incentivized to think correctly, and are only rewarded based on their final answer. This research might indicate that's a flaw that needs to be corrected before models can actually reason.

Cognitive scientist Douglas Hofstadter (1979) showed reasoning emerges from pattern recognition and analogy-making - abilities that modern AI demonstrably possesses. The question isn't if AI can reason, but how its reasoning differs from ours.

-

Impressive = / = substantial or beneficial.

wrote on last edited by [email protected]These are almost the exact same talking points we used to hear about ‘why would anyone need a home computer?’ Wild how some people can be so consistently short-sighted again and again and again.

What makes you think you’re capable of sentience, when your comments are all cliches and you’re incapable of personal growth or vision or foresight?

-

By many definitions, reasoning IS just a form of pattern recognition so the lines are definitely blurred.

And does it even matter anyway?

For the sake of argument let's say that somebody manages to create an AGI, does it reasoning abilities if it works anyway? No one has proven that sapience is required for intelligence, after all we only have a sample size of one, hardly any conclusions can really be drawn from that.

-

We actually have sentience, though, and are capable of creating new things and having realizations. AI isn’t real and LLMs and dispersion models are simply reiterating algorithmic patterns, no LLM or dispersion model can create anything original or expressive.

Also, we aren’t “evolved primates.” We are just primates, the thing is, primates are the most socially and cognitively evolved species on the planet, so that’s not a denigrating sentiment unless your a pompous condescending little shit.

wrote on last edited by [email protected]The denigration of simulated thought processes, paired with aggrandizing of wetware processing, is exactly my point. The same self-serving narcissism that’s colored so many biased & flawed arguments in biological philosophy putting humans on a pedestal above all other animals.

It’s also hysterical and ironic that you insist on your own level of higher thinking, as you regurgitate an argument so unoriginal that a bot could’ve easily written it. Just absolutely no self-awareness.

-

These are almost the exact same talking points we used to hear about ‘why would anyone need a home computer?’ Wild how some people can be so consistently short-sighted again and again and again.

What makes you think you’re capable of sentience, when your comments are all cliches and you’re incapable of personal growth or vision or foresight?

wrote on last edited by [email protected]What makes you think you’re capable of sentience when you’re asking machines to literally think for you?

-

The denigration of simulated thought processes, paired with aggrandizing of wetware processing, is exactly my point. The same self-serving narcissism that’s colored so many biased & flawed arguments in biological philosophy putting humans on a pedestal above all other animals.

It’s also hysterical and ironic that you insist on your own level of higher thinking, as you regurgitate an argument so unoriginal that a bot could’ve easily written it. Just absolutely no self-awareness.

It’s not higher thinking, it’s just actual thinking. Computers are not capable of that and never will be. It’s not a level of fighting progress, or whatever you are trying to get at, it’s just a realistic understanding of computers and technology. You’re jerking off a pipe dream, you don’t even understand how the technology you’re talking about works, and calling a brain “wetware” perfectly outlines that. You’re working on a script writers level of understanding how computers, hardware, and software work. You lack the grasp to even know what you’re talking about, this isn’t Johnny Mnemonic.

-

What makes you think you’re capable of sentience when you’re asking machines to literally think for you?

LoL. Am I less sentient for using a calculator?

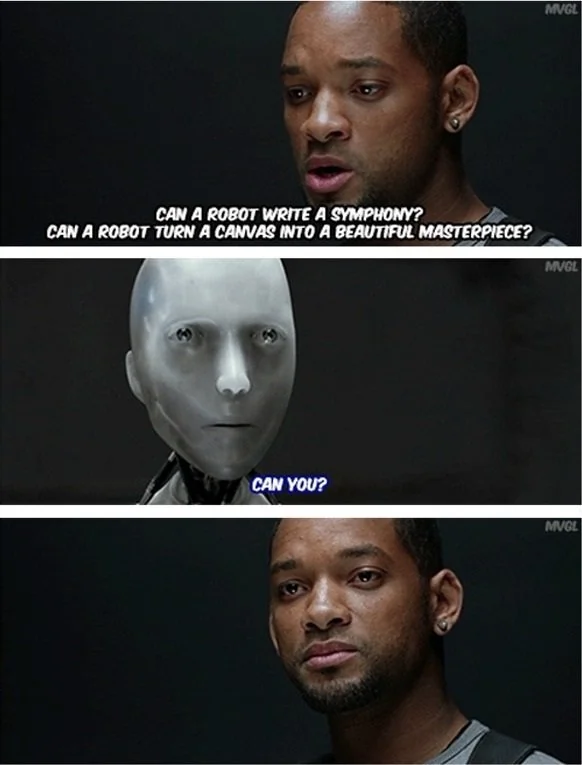

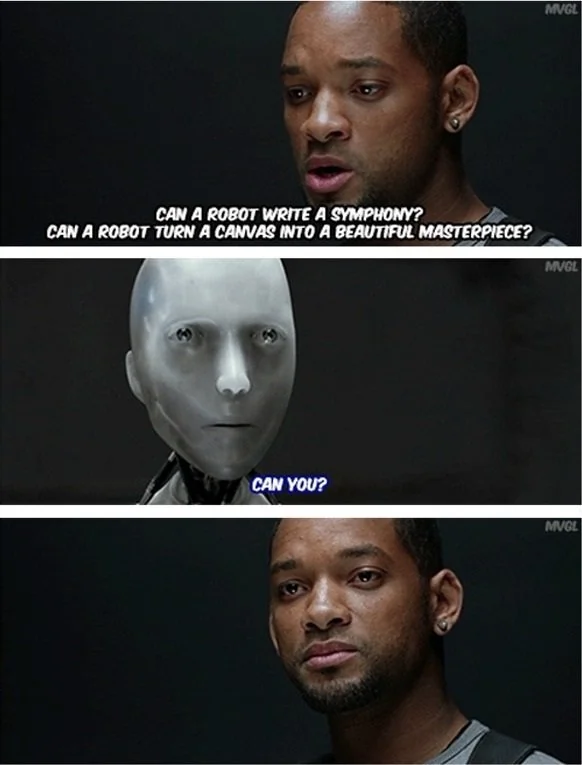

You’re astoundingly confident in your own sentience, for someone who seems to struggle to form an original thought. It’s like the convo was lifted straight out of that I, Robot interrogation scene. You hold the machines to standards you can’t meet yourself.

-

LoL. Am I less sentient for using a calculator?

You’re astoundingly confident in your own sentience, for someone who seems to struggle to form an original thought. It’s like the convo was lifted straight out of that I, Robot interrogation scene. You hold the machines to standards you can’t meet yourself.

wrote on last edited by [email protected]

wrote on last edited by [email protected]Funny you should use that example, I am actually a musician and composer, so yes. You’ve proved nothing other than your own assumptions that everyone else is as limited in their ability to create, learn, and express themselves as you are. I’m not looking for a crutch, and you’re using a work of intentionally flawed fictional logic to attempt to make a point. The point you’ve established is you live in a fantasy world, but you don’t understand that because it involves computers.

-

I mean… “proving” is also just marketing speak. There is no clear definition of reasoning, so there’s also no way to prove or disprove that something/someone reasons.

Claiming it's just marketing fluff is indicates you do not know what you're talking about.

They published a research paper on it. You are free to publish your own paper disproving theirs.

At the moment, you sound like one of those "I did my own research" people except you didn't even bother doing your own research.

-

It’s not higher thinking, it’s just actual thinking. Computers are not capable of that and never will be. It’s not a level of fighting progress, or whatever you are trying to get at, it’s just a realistic understanding of computers and technology. You’re jerking off a pipe dream, you don’t even understand how the technology you’re talking about works, and calling a brain “wetware” perfectly outlines that. You’re working on a script writers level of understanding how computers, hardware, and software work. You lack the grasp to even know what you’re talking about, this isn’t Johnny Mnemonic.

I call the brain “wetware” because there are companies already working with living neurons to be integrated into AI processing, and it’s an actual industry term.

That you so confidently declare machines will never be capable of processes we haven’t even been able to clearly define ourselves, paired with your almost religious fervor in opposition to its existence, really speaks to where you’re coming from on this. This isn’t coming from an academic perspective. This is clearly personal for you.

-

Funny you should use that example, I am actually a musician and composer, so yes. You’ve proved nothing other than your own assumptions that everyone else is as limited in their ability to create, learn, and express themselves as you are. I’m not looking for a crutch, and you’re using a work of intentionally flawed fictional logic to attempt to make a point. The point you’ve established is you live in a fantasy world, but you don’t understand that because it involves computers.

And there’s the reveal!! That’s why it’s so personal for you! It’s a career threat. It all adds up now.

-

I call the brain “wetware” because there are companies already working with living neurons to be integrated into AI processing, and it’s an actual industry term.

That you so confidently declare machines will never be capable of processes we haven’t even been able to clearly define ourselves, paired with your almost religious fervor in opposition to its existence, really speaks to where you’re coming from on this. This isn’t coming from an academic perspective. This is clearly personal for you.

Here’s the thing, I’m not against LLMs and dispersion for things they can actually be used for, they have potential for real things, just not at all the things you pretend exist. Neural implants aren’t AI. An intelligence is self aware, if we achieved AI it wouldn’t be a program. You’re misconstruing Virtual Intelligence for artificial intelligence and you don’t even understand what a virtual intelligence is. You’re simply delusional in what you believe computer science and technology is, how it works, and what it’s capable of.

-

And there’s the reveal!! That’s why it’s so personal for you! It’s a career threat. It all adds up now.

wrote on last edited by [email protected]I don’t make money, it’s something I do for personal enjoyment, that’s the entire purpose of art, it’s something I also use algorithmic processing to do. I’m not going to hand over my enjoyment to have a servitor do something for me to take credit for, I prefer to use my brain, not replace it.