Something Bizarre Is Happening to People Who Use ChatGPT a Lot

-

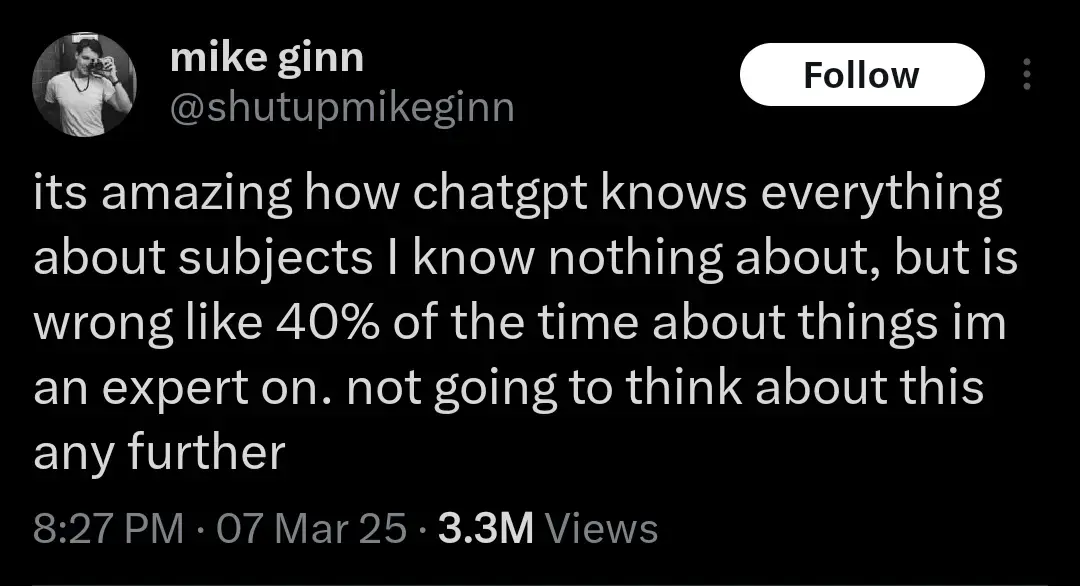

Another realization might be that the humans whose output ChatGPT was trained on were probably already 40% wrong about everything. But let's not think about that either. AI Bad!

I'll bait. Let's think:

-there are three humans who are 98% right about what they say, and where they know they might be wrong, they indicate it

-

now there is an llm (fuck capitalization, I hate the ways they are shoved everywhere that much) trained on their output

-

now llm is asked about the topic and computes the answer string

By definition that answer string can contain all the probably-wrong things without proper indicators ("might", "under such and such circumstances" etc)

If you want to say 40% wrong llm means 40% wrong sources, prove me wrong

-

-

Jesus that's sad

Yeah. I tried talking to him about his AI use but I realized there was no point. He also mentioned he had tried RCs again and I was like alright you know you can't handle that but fine.. I know from experience you can't convince addicts they are addicted to anything. People need to realize that themselves.

-

I don’t know how people can be so easily taken in by a system that has been proven to be wrong about so many things. I got an AI search response just yesterday that dramatically understated an issue by citing an unscientific ideologically based website with high interest and reason to minimize said issue. The actual studies showed a 6x difference. It was blatant AF, and I can’t understand why anyone would rely on such a system for reliable, objective information or responses. I have noted several incorrect AI responses to queries, and people mindlessly citing said response without verifying the data or its source. People gonna get stupider, faster.

I don’t know how people can be so easily taken in by a system that has been proven to be wrong about so many things

Ahem. Weren't there an election recently, in some big country, with uncanny similitude with that?

-

This post did not contain any content.

Do you guys remember when internet was the thing and everybody was like: "Look those dumb fucks just putting everything online" and now is: "Look this weird motherfucker so not post anything online"

-

I'm getting the sense here that you're placing most - if not all - of the blame on LLMs, but that’s probably not what you actually think. I'm sure you'd agree there are other factors at play too, right? One theory that comes to mind is that the people you're describing probably spend a lot of time debating online and are constantly exposed to bad-faith arguments, personal attacks, people talking past each other, and dunking - basically everything we established is wrong with social media discourse. As a result, they've developed a really low tolerance for it, and the moment someone starts making noises sounding even remotely like those negative encounters, they automatically label them as “one of them” and switch into lawyer mode - defending their worldview against claims that aren’t even being made.

That said, since we're talking about your friends and not just some random person online, I think an even more likely explanation is that you’ve simply grown apart. When people close to you start talking to you in the way you described, it often means they just don’t care the way they used to. Of course, it’s also possible that you’re coming across as kind of a prick and they’re reacting to that - but I’m not sensing any of that here, so I doubt that’s the case.

I don’t know what else you’ve been up to over the past few years, but I’m wondering if you’ve been on some kind of personal development journey - because I definitely have, and I’m not the same person I was when I met my friends either. A lot of the things they may have liked about me back then have since changed, and maybe they like me less now because of it. But guess what? I like me more. If the choice is to either keep moving forward and risk losing some friends, or regress just to keep them around, then I’ll take being alone. Chris Williamson calls this the "Lonely Chapter" - you’re different enough that you no longer fit in with your old group, but not yet far enough along to have found the new one.

I think it has a unique influence that will continue to develop, but I don't think LLM's are the only influence to blame. There's a lot that can influence this behavior, like the theory you've described. Off the top of my head, limerence is something that could be an influence. I know that it is common for people to experience limerence for things like video game characters, and sometimes they project expectations onto others to behave like said characters. Other things could be childhood trauma, glass child syndrome, isolation from peers in adolescence, asocial tendencies, the list is long I'd imagine.

For me, self journey started young and never ends. It's something that's just apart of the human experience, relationships come and go, then sometimes they come back, etc. I will say though, with what I'm seeing with the people I'm talking about, this is a novel experience to me. It's something that's hard to navigate, and as a result I'm finding that it's actually isolating to experience. Like I mentioned before, I can have one-one chats, and when I see them in person, we do activities and have fun! But if any level of discomfort is detected and the expectation is brought on. By the time I realize what's happening they're offering literal formatted templates on how to respond in conversations. Luckily it's not everyone in our little herd that has this behavior, but the people that do this the most I know for sure utilize ChatGPT heavily for these types of dicussions only because they recommended me to start doing the same not too long ago. Nonetheless, I did like this discussion, it offers a lot of prospect in looking at how different factors influence our behavior with each other.

-

I loved my car. Just had to scrap it recently. I got sad. I didnt go through withdrawal symptoms or feel like i was mourning a friend. You can appreciate something without building an emotional dependence on it. Im not particularly surprised this is happening to some people either, wspecially with the amount of brainrot out there surrounding these LLMs, so maybe bizarre is the wrong word , but it is a little disturbing that people are getting so attached to so.ething that is so fundamentally flawed.

Sorry about your car! I hate that.

In an age where people are prone to feeling isolated & alone, for various reasons...this, unfortunately, is filling the void(s) in their life. I agree, it's not healthy or best.

-

This post did not contain any content.

-

Do you guys remember when internet was the thing and everybody was like: "Look those dumb fucks just putting everything online" and now is: "Look this weird motherfucker so not post anything online"

I remember when the Internet was a thing people went on and/or visited/surfed, but not something you'd imagine having 247.

-

If you actually read the article Im 0retty sure the bizzarre thing is really these people using a 'tool' forming a roxic parasocial relationship with it, becoming addicted and beginning to see it as a 'friend'.

What the Hell was the name of the movie with Tom Cruise where the protagonist's friend was dating a fucking hologram?

We're a hair's-breadth from that bullshit, and TBH I think that if falling in love with a computer program becomes the new defacto normal, I'm going to completely alienate myself by making fun of those wretched chodes non-stop.

-

now replace chatgpt with these terms, one by one:

- the internet

- tiktok

- lemmy

- their cell phone

- news media

- television

- radio

- podcasts

- junk food

- money

You go down a list of inventions pretty progressively, skimming the best of the last decade or two, then TV and radio... at a century or at most two.

Then you skip to currency, which is several millenia old.

-

I tried that Replika app before AI was trendy and immediately picked on the fact that AI companion thing is literal garbage.

Maybe about time we listen to that internet wisdom about touching some grass.

I tried that Replika app before AI was trendy

Same here, it was unbelievably shallow. Everything I liked it just mimiced, without even trying to do a simulation of a real conversation. "Oh you like cucumbers? Me too! I also like electronic music, of course. Do you want some nudes?"

Even when I'm at my loneliest, I still prefer to be lonely than have a "conversation" with something like this. I really don't understand how some people can have relationships with an AI.

-

This post did not contain any content.

I am so happy God made me a Luddite

-

You go down a list of inventions pretty progressively, skimming the best of the last decade or two, then TV and radio... at a century or at most two.

Then you skip to currency, which is several millenia old.

They're clearly under the control of Big Train, Loom Lobbyists and the Global Gutenberg Printing Press Conspiracy.

-

I'll bait. Let's think:

-there are three humans who are 98% right about what they say, and where they know they might be wrong, they indicate it

-

now there is an llm (fuck capitalization, I hate the ways they are shoved everywhere that much) trained on their output

-

now llm is asked about the topic and computes the answer string

By definition that answer string can contain all the probably-wrong things without proper indicators ("might", "under such and such circumstances" etc)

If you want to say 40% wrong llm means 40% wrong sources, prove me wrong

It's more up to you to prove that a hypothetical edge case you dreamed up is more likely than what happens in a normal bell curve. Given the size of typical LLM data this seems futile, but if that's how you want to spend your time, hey knock yourself out.

-

-

Another realization might be that the humans whose output ChatGPT was trained on were probably already 40% wrong about everything. But let's not think about that either. AI Bad!

This is a salient point that's well worth discussing. We should not be training large language models on any supposedly factual information that people put out. It's super easy to call out a bad research study and have it retracted. But you can't just explain to an AI that that study was wrong, you have to completely retrain it every time. Exacerbating this issue is the way that people tend to view large language models as somehow objective describers of reality, because they're synthetic and emotionless. In truth, an AI holds exactly the same biases as the people who put together the data it was trained on.

-

They're clearly under the control of Big Train, Loom Lobbyists and the Global Gutenberg Printing Press Conspiracy.

-

The quote was originally on news and journalists.

-

And sunshine hurts.

Said the vampire from Transylvania.

-

I don’t know how people can be so easily taken in by a system that has been proven to be wrong about so many things

Ahem. Weren't there an election recently, in some big country, with uncanny similitude with that?

Yeah. Got me there.

-

This post did not contain any content.

I couldn’t be bothered to read it, so I got CharGPT to summarise it. Apparently there’s nothing to worry about.