Something Bizarre Is Happening to People Who Use ChatGPT a Lot

-

You go down a list of inventions pretty progressively, skimming the best of the last decade or two, then TV and radio... at a century or at most two.

Then you skip to currency, which is several millenia old.

They're clearly under the control of Big Train, Loom Lobbyists and the Global Gutenberg Printing Press Conspiracy.

-

I'll bait. Let's think:

-there are three humans who are 98% right about what they say, and where they know they might be wrong, they indicate it

-

now there is an llm (fuck capitalization, I hate the ways they are shoved everywhere that much) trained on their output

-

now llm is asked about the topic and computes the answer string

By definition that answer string can contain all the probably-wrong things without proper indicators ("might", "under such and such circumstances" etc)

If you want to say 40% wrong llm means 40% wrong sources, prove me wrong

It's more up to you to prove that a hypothetical edge case you dreamed up is more likely than what happens in a normal bell curve. Given the size of typical LLM data this seems futile, but if that's how you want to spend your time, hey knock yourself out.

-

-

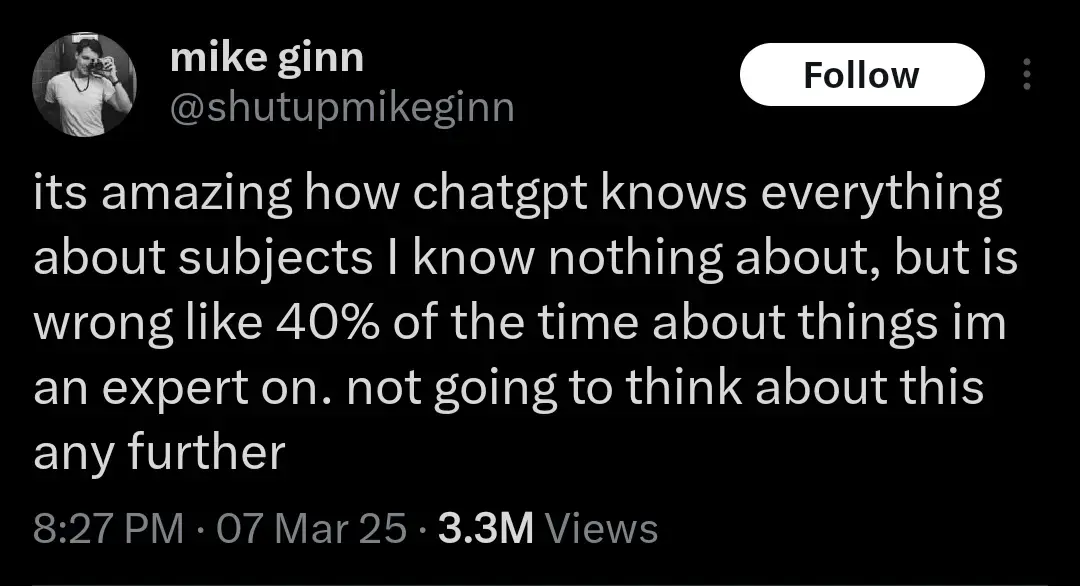

Another realization might be that the humans whose output ChatGPT was trained on were probably already 40% wrong about everything. But let's not think about that either. AI Bad!

This is a salient point that's well worth discussing. We should not be training large language models on any supposedly factual information that people put out. It's super easy to call out a bad research study and have it retracted. But you can't just explain to an AI that that study was wrong, you have to completely retrain it every time. Exacerbating this issue is the way that people tend to view large language models as somehow objective describers of reality, because they're synthetic and emotionless. In truth, an AI holds exactly the same biases as the people who put together the data it was trained on.

-

They're clearly under the control of Big Train, Loom Lobbyists and the Global Gutenberg Printing Press Conspiracy.

-

The quote was originally on news and journalists.

-

And sunshine hurts.

Said the vampire from Transylvania.

-

I don’t know how people can be so easily taken in by a system that has been proven to be wrong about so many things

Ahem. Weren't there an election recently, in some big country, with uncanny similitude with that?

Yeah. Got me there.

-

This post did not contain any content.

I couldn’t be bothered to read it, so I got CharGPT to summarise it. Apparently there’s nothing to worry about.

-

Andrej Karpathy (One of the founders of OpenAI, left OpenAI, worked for Tesla back in 2015-2017, worked for OpenAI a bit more, and is now working on his startup "Eureka Labs - we are building a new kind of school that is AI native") make a tweet defining the term:

There's a new kind of coding I call "vibe coding", where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It's possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good. Also I just talk to Composer with SuperWhisper so I barely even touch the keyboard. I ask for the dumbest things like "decrease the padding on the sidebar by half" because I'm too lazy to find it. I "Accept All" always, I don't read the diffs anymore. When I get error messages I just copy paste them in with no comment, usually that fixes it. The code grows beyond my usual comprehension, I'd have to really read through it for a while. Sometimes the LLMs can't fix a bug so I just work around it or ask for random changes until it goes away. It's not too bad for throwaway weekend projects, but still quite amusing. I'm building a project or webapp, but it's not really coding - I just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works.

People ignore the "It's not too bad for throwaway weekend projects", and try to use this style of coding to create "production-grade" code... Lets just say it's not going well.

source (xcancel link)

The amount of damage a newbie programmer without a tight leash can do to a code base/product is immense. Once something is out in production, that is something you have to deal with forever. That temporary fix they push is going to be still used in a decade and if you break it, now you have to explain to the customer why the thing that's been working for them for years is now broken and what you plan to do to remedy the situation.

-

Do you guys remember when internet was the thing and everybody was like: "Look those dumb fucks just putting everything online" and now is: "Look this weird motherfucker so not post anything online"

Remember when people used to say and believe "Don't believe everything you read on the internet?"

I miss those days.

-

This post did not contain any content.

Not a lot of meat on this article, but yeah, I think it's pretty obvious that those who seek automated tools to define their own thoughts and feelings become dependent. If one is so incapable of mapping out ones thoughts and putting them to written word, its natural they'd seek ease and comfort with the "good enough" (fucking shitty as hell) output of a bot.

-

This post did not contain any content.

-

This makes a lot of sense because as we have been seeing over the last decades or so is that digital only socialization isn't a replacement for in person socialization. Increased social media usage shows increased loneliness not a decrease. It makes sense that something even more fake like ChatGPT would make it worse.

I don't want to sound like a luddite but overly relying on digital communications for all interactions is a poor substitute for in person interactions. I know I have to prioritize seeing people in the real world because I work from home and spending time on Lemmy during the day doesn't fulfill.

In person socialization? Is that like VR chat?

-

I remember when the Internet was a thing people went on and/or visited/surfed, but not something you'd imagine having 247.

I was there from the start, you must of never BBS'd or IRC'd - shit was amazing in the early days.

I mean honestly nothing has really changed - we are still at our terminals looking at text. Only real innovation has been inline pics, videos and audio. 30+ years ago one had to click a link to see that stuff

-

Presuming you're writing in Python: Check out https://docs.astral.sh/ruff/

It's an all-in-one tool that combines several older (pre-existing) tools. Very fast, very cool.

-

If you actually read the article Im 0retty sure the bizzarre thing is really these people using a 'tool' forming a roxic parasocial relationship with it, becoming addicted and beginning to see it as a 'friend'.

You never viewed a tool as a friend? Pretty sure there are some guys that like their cars more than most friends. Bonding with objects isn't that weird, especially one that can talk to you like it's human.

-

This post did not contain any content.

People addicted to tech omg who could've guessed. Shocked I tell you.

-

I am so happy God made me a Luddite

Yeah look at all this technology you can't use! It's so empowering.

-

I don’t know how people can be so easily taken in by a system that has been proven to be wrong about so many things. I got an AI search response just yesterday that dramatically understated an issue by citing an unscientific ideologically based website with high interest and reason to minimize said issue. The actual studies showed a 6x difference. It was blatant AF, and I can’t understand why anyone would rely on such a system for reliable, objective information or responses. I have noted several incorrect AI responses to queries, and people mindlessly citing said response without verifying the data or its source. People gonna get stupider, faster.

That's why I only use it as a starting point. It spits out "keywords" and a fuzzy gist of what I need, then I can verify or experiment on my own. It's just a good place to start or a reminder of things you once knew.

-

This post did not contain any content.

That is peak clickbait, bravo.