Something Bizarre Is Happening to People Who Use ChatGPT a Lot

-

This post did not contain any content.

This makes a lot of sense because as we have been seeing over the last decades or so is that digital only socialization isn't a replacement for in person socialization. Increased social media usage shows increased loneliness not a decrease. It makes sense that something even more fake like ChatGPT would make it worse.

I don't want to sound like a luddite but overly relying on digital communications for all interactions is a poor substitute for in person interactions. I know I have to prioritize seeing people in the real world because I work from home and spending time on Lemmy during the day doesn't fulfill.

-

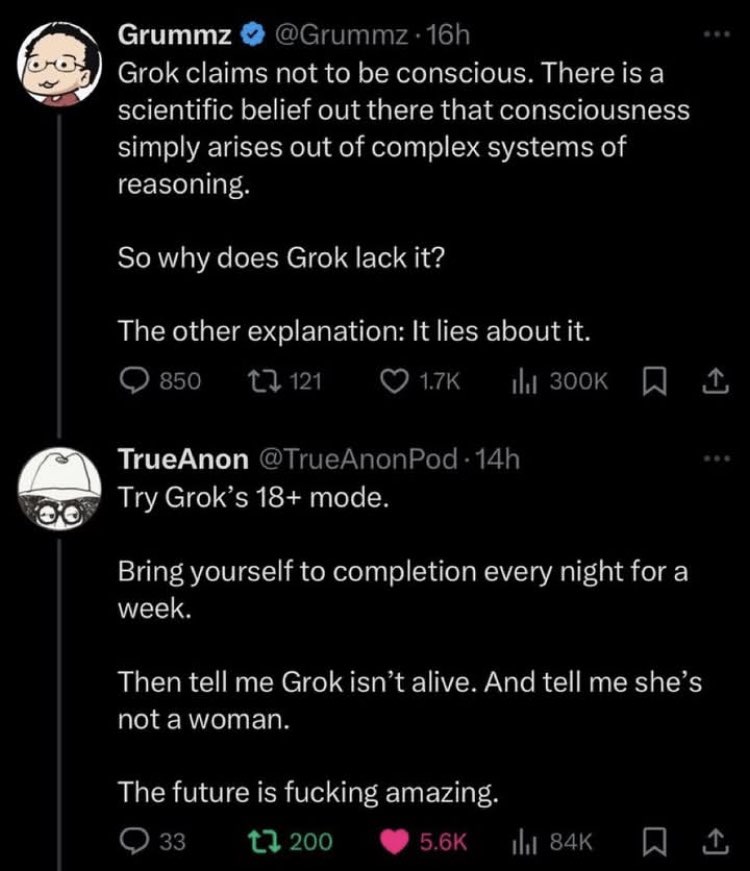

Jfc, I didn't even know who Grummz was until yesterday but gawdamn that is some nuclear cringe.

-

How do you even have a conversation without quitting in frustration from it’s obviously robotic answers?

Talking with actual people online isn’t much better. ChatGPT might sound robotic, but it’s extremely polite, actually reads what you say, and responds to it. It doesn’t jump to hasty, unfounded conclusions about you based on tiny bits of information you reveal. When you’re wrong, it just tells you what you’re wrong about - it doesn’t call you an idiot and tell you to go read more. Even in touchy discussions, it stays calm and measured, rather than getting overwhelmed with emotion, which becomes painfully obvious in how people respond. The experience of having difficult conversations online is often the exact opposite. A huge number of people on message boards are outright awful to those they disagree with.

Here’s a good example of the kind of angry, hateful message you’ll never get from ChatGPT - and honestly, I’d take a robotic response over that any day.

I think these people were already crazy if they’re willing to let a machine shovel garbage into their mouths blindly. Fucking mindless zombies eating up whatever is big and trendy.

I agree with what you say, and I for one have had my fair share of shit asses on forums and discussion boards. But this response also fuels my suspicion that my friend group has started using it in place of human interactions to form thoughts, opinions, and responses during our conversations. Almost like an emotional crutch to talk in conversation, but not exactly? It's hard to pin point.

I've recently been tone policed a lot more over things that in normal real life interactions would be light hearted or easy to ignore and move on - I'm not shouting obscenities or calling anyone names, it's just harmless misunderstandings that come from tone deafness of text. I'm talking like putting a cute emoji and saying words like silly willy is becoming offensive to people I know personally. It wasn't until I asked a rhetorical question to invoke a thoughtful conversation where I had to think about what was even happening - someone responded with an answer literally from ChatGPT and they provided a technical definition to something that was apart of my question. Your answer has finally started linking things for me; for better or for worse people are using it because you don't receive offensive or flamed answers. My new suspicion is that some people are now taking those answers, and applying the expectation to people they know in real life, and when someone doesn't respond in the same predictable manner of AI they become upset and further isolated from real life interactions or text conversations with real people.

-

This post did not contain any content.

New DSM / ICD is dropping with AI dependency. But it's unreadable because image generation was used for the text.

-

I agree with what you say, and I for one have had my fair share of shit asses on forums and discussion boards. But this response also fuels my suspicion that my friend group has started using it in place of human interactions to form thoughts, opinions, and responses during our conversations. Almost like an emotional crutch to talk in conversation, but not exactly? It's hard to pin point.

I've recently been tone policed a lot more over things that in normal real life interactions would be light hearted or easy to ignore and move on - I'm not shouting obscenities or calling anyone names, it's just harmless misunderstandings that come from tone deafness of text. I'm talking like putting a cute emoji and saying words like silly willy is becoming offensive to people I know personally. It wasn't until I asked a rhetorical question to invoke a thoughtful conversation where I had to think about what was even happening - someone responded with an answer literally from ChatGPT and they provided a technical definition to something that was apart of my question. Your answer has finally started linking things for me; for better or for worse people are using it because you don't receive offensive or flamed answers. My new suspicion is that some people are now taking those answers, and applying the expectation to people they know in real life, and when someone doesn't respond in the same predictable manner of AI they become upset and further isolated from real life interactions or text conversations with real people.

I don’t personally feel like this applies to people who know me in real life, even when we’re just chatting over text. If the tone comes off wrong, I know they’re not trying to hurt my feelings. People don’t talk to someone they know the same way they talk to strangers online - and they’re not making wild assumptions about me either, because they already know who I am.

Also, I’m not exactly talking about tone per se. While written text can certainly have a tone, a lot of it is projected by the reader. I’m sure some of my writing might come across as hostile or cold too, but that’s not how it sounds in my head when I’m writing it. What I’m really complaining about - something real people often do and AI doesn’t - is the intentional nastiness. They intend to be mean, snarky, and dismissive. Often, they’re not even really talking to me. They know there’s an audience, and they care more about how that audience reacts. Even when they disagree, they rarely put any real effort into trying to change the other person’s mind. They’re just throwing stones. They consider an argument won when their comment calling the other person a bigot got 25 upvotes.

In my case, the main issue with talking to my friends compared to ChatGPT is that most of them have completely different interests, so there’s just not much to talk about. But with ChatGPT, it doesn’t matter what I want to discuss - it always acts interested and asks follow-up questions.

-

Tell me more about these beans

I’ve tasted other cocoas. This is the best!

-

New DSM / ICD is dropping with AI dependency. But it's unreadable because image generation was used for the text.

This is perfect for the billionaires in control, now if you suggest that "hey maybe these AI have developed enough to be sentient and sapient beings (not saying they are now) and probably deserve rights", they can just label you (and that arguement) mentally ill

Foucault laughs somewhere

-

What the fuck is vibe coding... Whatever it is I hate it already.

-

Jfc, I didn't even know who Grummz was until yesterday but gawdamn that is some nuclear cringe.

That's a pretty good summary of Grummz

-

I need to read Amusing Ourselves to Death....

My notes on it https://fabien.benetou.fr/ReadingNotes/AmusingOurselvesToDeath

But yes, stop scrolling, read it.

-

I don’t personally feel like this applies to people who know me in real life, even when we’re just chatting over text. If the tone comes off wrong, I know they’re not trying to hurt my feelings. People don’t talk to someone they know the same way they talk to strangers online - and they’re not making wild assumptions about me either, because they already know who I am.

Also, I’m not exactly talking about tone per se. While written text can certainly have a tone, a lot of it is projected by the reader. I’m sure some of my writing might come across as hostile or cold too, but that’s not how it sounds in my head when I’m writing it. What I’m really complaining about - something real people often do and AI doesn’t - is the intentional nastiness. They intend to be mean, snarky, and dismissive. Often, they’re not even really talking to me. They know there’s an audience, and they care more about how that audience reacts. Even when they disagree, they rarely put any real effort into trying to change the other person’s mind. They’re just throwing stones. They consider an argument won when their comment calling the other person a bigot got 25 upvotes.

In my case, the main issue with talking to my friends compared to ChatGPT is that most of them have completely different interests, so there’s just not much to talk about. But with ChatGPT, it doesn’t matter what I want to discuss - it always acts interested and asks follow-up questions.

I can see how people would seek refuge talking to an AI given that a lot of online forums have really inflammatory users; it is one of the biggest downfalls of online interactions. I have had similar thoughts myself - without knowing me strangers could see something I write as hostile or cold, but it's really more often friends that turn blind to what I'm saying and project a tone that is likely not there to begin with. They used to not do that, but in the past year or so it's gotten to the point where I frankly just don't participate in our group chats and really only talk if it's one-one text or in person. I feel like I'm walking on eggshells, even if I were to show genuine interest in the conversation it is taken the wrong way. That being said, I think we're coming from opposite ends of a shared experience but are seeing the same thing, we're just viewing it differently because of what we have experienced individually. This gives me more to think about!

I feel a lot of similarities in your last point, especially with having friends who have wildly different interests. Most of mine don't care to even reach out to me beyond a few things here and there; they don't ask follow-up questions and they're certainly not interested when I do speak. To share what I'm seeing, my friends are using these LLM's to an extent where if I am not responding in the same manner or structure it's either ignored or I'm told I'm not providing the appropriate response they wanted. This where the tone comes in where I'm at, because ChatGPT will still have a regarded tone of sorts to the user; that is it's calm, non-judgmental, and friendly. With that, the people in my friend group that do heavily use it have appeared to become more sensitive to even how others like me in the group talk, to the point where they take it upon themselves to correct my speech because the cadence, tone and/or structure is not fitting a blind expectation I wouldn't know about. I find it concerning, because regardless of the people who are intentionally mean, and for interpersonal relationships, it's creating an expectation that can't be achieved with being human. We have emotions and conversation patterns that vary and we're not always predictable in what we say, which can suck when you want someone to be interested in you and have meaningful conversations but it doesn't tend to pan out. And I feel that. A lot unfortunately. AKA I just wish my friends cared sometimes

-

This post did not contain any content.

Brain bleaching?

-

I can see how targeted ads like that would be overwhelming. Would you like me to sign you up for a free 7-day trial of BetterHelp?

Your fear of constant data collection and targeted advertising is valid and draining. Take back your privacy with this code for 30% off Nord VPN.

-

Wake me up when you find something people will not abuse and get addicted to.

-

This post did not contain any content.

I knew a guy I went to rehab with. Talked to him a while back and he invited me to his discord server. It was him, and like three self trained LLMs and a bunch of inactive people who he had invited like me. He would hold conversations with the LLMs like they had anything interesting or human to say, which they didn't. Honestly a very disgusting image, I left because I figured he was on the shit again and had lost it and didn't want to get dragged into anything.

-

What the fuck is vibe coding... Whatever it is I hate it already.

Andrej Karpathy (One of the founders of OpenAI, left OpenAI, worked for Tesla back in 2015-2017, worked for OpenAI a bit more, and is now working on his startup "Eureka Labs - we are building a new kind of school that is AI native") make a tweet defining the term:

There's a new kind of coding I call "vibe coding", where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It's possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good. Also I just talk to Composer with SuperWhisper so I barely even touch the keyboard. I ask for the dumbest things like "decrease the padding on the sidebar by half" because I'm too lazy to find it. I "Accept All" always, I don't read the diffs anymore. When I get error messages I just copy paste them in with no comment, usually that fixes it. The code grows beyond my usual comprehension, I'd have to really read through it for a while. Sometimes the LLMs can't fix a bug so I just work around it or ask for random changes until it goes away. It's not too bad for throwaway weekend projects, but still quite amusing. I'm building a project or webapp, but it's not really coding - I just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works.

People ignore the "It's not too bad for throwaway weekend projects", and try to use this style of coding to create "production-grade" code... Lets just say it's not going well.

source (xcancel link)

-

Jfc, I didn't even know who Grummz was until yesterday but gawdamn that is some nuclear cringe.

Something worth knowing about that guy?

I mean, apart from the fact that he seems to be a complete idiot?

Midwits shouldn't have been allowed on the Internet.

-

people tend to become dependent upon AI chatbots when their personal lives are lacking. In other words, the neediest people are developing the deepest parasocial relationship with AI

Preying on the vulnerable is a feature, not a bug.

That was clear from GPT-3, day 1.

I read a Reddit post about a woman who used GPT-3 to effectively replace her husband, who had passed on not too long before that. She used it as a way to grief, I suppose? She ended up noticing that she was getting too attach to it, and had to leave him behind a second time...

-

If you are dating a body pillow, I think that's a pretty good sign that you have taken a wrong turn in life.

What if it's either that, or suicide? I imagine that people who make that choice don't have a lot of choice. Due to monetary, physical, or mental issues that they cannot make another choice.

-

Thanks ill look into it!

Presuming you're writing in Python: Check out https://docs.astral.sh/ruff/

It's an all-in-one tool that combines several older (pre-existing) tools. Very fast, very cool.