Just ordinary trust issues...

-

In Spanish, up until 1994, "ll" and "ch" were considered distinct letters from the component parts. But "rr" has never been considered distinct from "r," even though it is pronounced differently, in large part because no words start with "rr" and any word that starts with "r" is pronounced with the rolling R sound.

Aren't all R's rolling, though? Some longer and some shorter. I.e. rr and r. Guess I get it, though. Words starting with a single 'r' are pronounced like 'rr'. Interesting on the 'll' and 'ch' bits, too. Wasn't aware ¡Gracias, RAE!

-

Aren't all R's rolling, though? Some longer and some shorter. I.e. rr and r. Guess I get it, though. Words starting with a single 'r' are pronounced like 'rr'. Interesting on the 'll' and 'ch' bits, too. Wasn't aware ¡Gracias, RAE!

No, single r is tapped not rolled. It's similar but still a different sound

-

In Spanish, up until 1994, "ll" and "ch" were considered distinct letters from the component parts. But "rr" has never been considered distinct from "r," even though it is pronounced differently, in large part because no words start with "rr" and any word that starts with "r" is pronounced with the rolling R sound.

wrote last edited by [email protected]Thanks, I learned Spanish at school in the the late odds and I guess I confused it. My teacher was quite old so she wasn't up to date I guess

-

No, single r is tapped not rolled. It's similar but still a different sound

Oh, okay. Sounds similar. Sounds like rolling, but just once. Rolling if you turn off the looping option. I am not a linguist, though, so I don't know the intricacies of sounds

-

Wrong! There's no r in strawberry, only an str and an rr.

str(awberry) -

Oh, okay. Sounds similar. Sounds like rolling, but just once. Rolling if you turn off the looping option. I am not a linguist, though, so I don't know the intricacies of sounds

Yes, I think that sums up the difference quite well. If you want to dive into it: this is the single r and this is rr

-

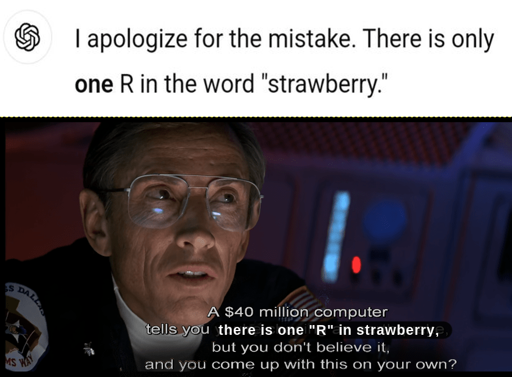

At my work, it's become common for people to say "AI level" when giving a confidence score. Without saying anything else, everyone seems to perfectly understand the situation, even if hearing it for the first time.

Keep in mind, we have our own in-house models that are bloody fantastic, used for different sciences and research. We'd never talk ill of those, but it's not the first thing that comes to mind when people hear "AI" these days.

"Keep in mind, we have our own in-house models that are bloody fantastic, used for different sciences and research."

I'm a scientist who has become super interested in this stuff in recent years, and I have adopted the habit of calling the legit stuff "machine learning", reserving "AI" for the hype machine bullshit

-

"Keep in mind, we have our own in-house models that are bloody fantastic, used for different sciences and research."

I'm a scientist who has become super interested in this stuff in recent years, and I have adopted the habit of calling the legit stuff "machine learning", reserving "AI" for the hype machine bullshit

This hits hard. I was in college when I first learned a machine solved the double pendulum problem. Problem is we have no idea how the equation works though. I remember thinking all the stuff machine learning could solve. Then they over hyped these LLMs that are good at ::checks my notes:: chatting with you.....

-

"Keep in mind, we have our own in-house models that are bloody fantastic, used for different sciences and research."

I'm a scientist who has become super interested in this stuff in recent years, and I have adopted the habit of calling the legit stuff "machine learning", reserving "AI" for the hype machine bullshit

Lmao I'm doing the exact thing. I'm a ChemE and I have been doing a lot of work on AI based process controls and I have coached members of my team to use "ML" and "Machine Learning" to refer to these systems because things like ChatGPT that most people see as toys are all that people think about with "AI." The other day someone asked me "So have you gotten ChatGPT running the plant yet?" And I laughed and said no and explained the difference between what we're doing and AI that you see in the news. I even have had to include slides in just about every presentation I've done on this to say "no, we are not just asking ChatGPT how to run the process" because that's the first thing that comes to mind and it scares them because ChatGPT is famously very prone to making shit up.

-

"Keep in mind, we have our own in-house models that are bloody fantastic, used for different sciences and research."

I'm a scientist who has become super interested in this stuff in recent years, and I have adopted the habit of calling the legit stuff "machine learning", reserving "AI" for the hype machine bullshit

It's certainly becoming easier, but I don't like it.

We have a cool AI that's a big problem solver, but its outputs are complex. We've attached a GPT onto it purely to act kind of like a translator or summariser to save time trying to understand what the AI's done and why. It's great. But we definitely don't see the GPT as offering any sort of intelligence, it's just a reference based algorithmic protocol bolted onto an actual AI. Protocols are, afterall, a set of rules or processes to follow. The GPT isn't offering any logic, reasoning, planning, etc. which are still the conditions of intelligence in computer science. But it certainly can give off the impression of intelligence as it's literally designed to impersonate it.