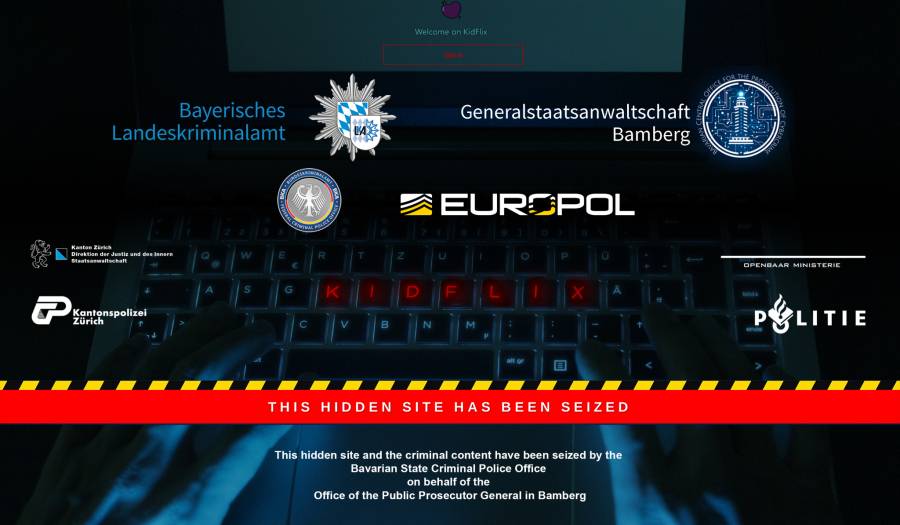

European police say KidFlix, "one of the largest pedophile platforms in the world," busted in joint operation.

-

what he fuck I've never ran into anyone like that.don't even wanna know where you do this regularly

-

From a month ago:

https://lemmy.world/post/26165823/15375845 -

gross, probably the reason they got banned from reddit for the same thing, promoting or soliciting csam material.

I don't really think Reddit minds that, actually, given that u/spez was the lead mod of r/jailbait until.he got caught and hid who the mods were

-

I feel like what he’s trying to say it shouldn’t be the end of the world if a kid sees a sex scene in a movie, like it should be ok for them to know it exists. But the way he phrases it is questionable at best.

When I was a kid I was forced to leave the room when any intimate scenes were in a movie and I honestly do feel like it fucked with my perception of sex a bit. Like it’s this taboo thing that should be hidden away and never discussed.

-

I don't know about that.

I spot most of it while looking for out-of-print books about growing orchids on the typical file-sharing networks. The term "blue orchid" seems to be frequently used in file names of things that are in no way related to gardening. The eMule network is especially bad.

When I was looking into messaging clients a couple years ago, to figure out what I wanted to use, I checked out a public user directory for the Tox messaging network and it was maybe 90% people openly trying to find, or offering, custom made CP. On the open internet, not an onion page or anything.

Then maybe last year, I joined openSUSE's official Matrix channels, and some random person (who, to be clear, did not seem connected to the distro) invited me to join a room called openSUSE Child Porn, with a room logo that appeared to be an actual photo of a small girl being violated by a grown man.

I hope to god these are all cops, because I have no idea how there can be so many pedos just openly doing their thing without being caught.

-

Search "AI woman porn miniskirt," and tell me you don't see questionable results in the first 2 pages, of women who at least appear possibly younger than 18.

Fuck, the head guy of Reddit, u/spez, was the main mod of r/jailbait before he changed the design of reddit so he could hide mod names. Also, look into the u/MaxwellHill / Ghilisaine Maxwell conspiracy on Reddit.

Search “AI woman porn miniskirt,”

Did it with safesearch off and got a bunch of women clearly in their late teens or 20s. Plus, I don't want to derail my main point but I think we should acknowledge the difference between a picture of a real child actively being harmed vs a 100% fake image. I didn't find any AI CP, but even if I did, it's in an entire different universe of morally bad.

r/jailbait

That was, what, fifteen years ago? It's why I said "in the last decade".

-

most definitely not clean lmao, your just not actively searching for it, or stumbling onto it.

-

This post did not contain any content.

During the investigation, Europol’s analysts from the European Cybercrime Centre (EC3) provided intensive operational support to national authorities by analysing thousands of videos.

I don't know how you can do this job and not get sick because looking away is not an option

-

with a catchy name clearly thought up by a marketing person

A marketing person? They took "Netflix" and changed the first three letters lol

-

Well, some pedophiles have argued that AI generated child porn should be allowed, so real humans are not harmed, and exploited.

I'm conflicted on that. Naturally, I'm disgusted, and repulsed.

But if no real child is harmed...

I don't want to think about it, anymore.

Issue is, AI is often trained on real children, sometimes even real CSAM(allegedly), which makes the "no real children were harmed" part not necessarily 100% true.

Also since AI can generate photorealistic imagery, it also muddies the water for the real thing.

-

During the investigation, Europol’s analysts from the European Cybercrime Centre (EC3) provided intensive operational support to national authorities by analysing thousands of videos.

I don't know how you can do this job and not get sick because looking away is not an option

You do get sick, and I would be most surprised if they didnt allow people to look away and take breaks/get support as needed.

-

It's not a strawman if they repeat your own logic back at you. You just had a shit take.

my take was everyone should be illiterate. good work.

-

Issue is, AI is often trained on real children, sometimes even real CSAM(allegedly), which makes the "no real children were harmed" part not necessarily 100% true.

Also since AI can generate photorealistic imagery, it also muddies the water for the real thing.

-

Kidflix sounds like a feature on Nickelodeon. The world is disgusting.

Or a Netflix for children/video editing app for primary schoolers in the early 2000s/late 1900s.

-

And it didn't even require sacrificing encryption huh!

Basically the only reason I read the article is to know if they needed a "backdoor" in encryption, guess the don't need it, like everyone with a little bit of IT knowledge always told them.

-

You do get sick, and I would be most surprised if they didnt allow people to look away and take breaks/get support as needed.

Indeed, but in my country the psychological support is even mandatory. Furthermore, I know there have been pilots with using ML to go through the videos. When the system detects explicit material, an officer has to confirm it. But it prevents them going through it all day every day for each video. I think Microsoft has also been working on a database with hashes that LEO provides to automatically detect materials that have already been identified. All in all, a gruesome job, but fortunately technique is alleviating the harshest activities bit by bit.

-

I worked in customer service a long time. No one was trained on how to be law enforcement and no one was paid enough to be entrusted with public safety beyond the common sense everyday people have about these things. I reported every instance of child abuse I've seen, and that's maybe 4 times in two decades. I have no problem with training and reporting, but you have to accept that the service staff aren't going to police hotels.

-

I imagine it's easier to catch uploaders than viewers.

It's also probably more impactful to go for the big "power producers" simultaneously and quickly before word gets out and people start locking things down.

Yeah, I don't suspect they went after any viewers, only uploaders.

-

Well, some pedophiles have argued that AI generated child porn should be allowed, so real humans are not harmed, and exploited.

I'm conflicted on that. Naturally, I'm disgusted, and repulsed.

But if no real child is harmed...

I don't want to think about it, anymore.

-

Indeed, but in my country the psychological support is even mandatory. Furthermore, I know there have been pilots with using ML to go through the videos. When the system detects explicit material, an officer has to confirm it. But it prevents them going through it all day every day for each video. I think Microsoft has also been working on a database with hashes that LEO provides to automatically detect materials that have already been identified. All in all, a gruesome job, but fortunately technique is alleviating the harshest activities bit by bit.

And this is for law enforcement level of personnel. Meta and friends just outsource content moderation to low-wage countries and let the poors deal with the PTSD themselves.

Let’s hope that’s what AI can help with, instead of techbrocracy