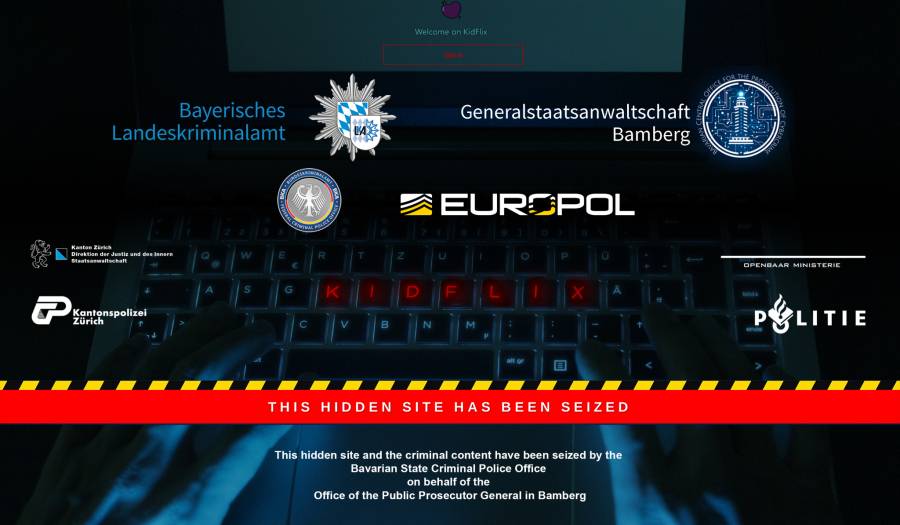

European police say KidFlix, "one of the largest pedophile platforms in the world," busted in joint operation.

-

You do get sick, and I would be most surprised if they didnt allow people to look away and take breaks/get support as needed.

Indeed, but in my country the psychological support is even mandatory. Furthermore, I know there have been pilots with using ML to go through the videos. When the system detects explicit material, an officer has to confirm it. But it prevents them going through it all day every day for each video. I think Microsoft has also been working on a database with hashes that LEO provides to automatically detect materials that have already been identified. All in all, a gruesome job, but fortunately technique is alleviating the harshest activities bit by bit.

-

I worked in customer service a long time. No one was trained on how to be law enforcement and no one was paid enough to be entrusted with public safety beyond the common sense everyday people have about these things. I reported every instance of child abuse I've seen, and that's maybe 4 times in two decades. I have no problem with training and reporting, but you have to accept that the service staff aren't going to police hotels.

-

I imagine it's easier to catch uploaders than viewers.

It's also probably more impactful to go for the big "power producers" simultaneously and quickly before word gets out and people start locking things down.

Yeah, I don't suspect they went after any viewers, only uploaders.

-

Well, some pedophiles have argued that AI generated child porn should be allowed, so real humans are not harmed, and exploited.

I'm conflicted on that. Naturally, I'm disgusted, and repulsed.

But if no real child is harmed...

I don't want to think about it, anymore.

-

Indeed, but in my country the psychological support is even mandatory. Furthermore, I know there have been pilots with using ML to go through the videos. When the system detects explicit material, an officer has to confirm it. But it prevents them going through it all day every day for each video. I think Microsoft has also been working on a database with hashes that LEO provides to automatically detect materials that have already been identified. All in all, a gruesome job, but fortunately technique is alleviating the harshest activities bit by bit.

And this is for law enforcement level of personnel. Meta and friends just outsource content moderation to low-wage countries and let the poors deal with the PTSD themselves.

Let’s hope that’s what AI can help with, instead of techbrocracy

-

I worked in customer service a long time. No one was trained on how to be law enforcement and no one was paid enough to be entrusted with public safety beyond the common sense everyday people have about these things. I reported every instance of child abuse I've seen, and that's maybe 4 times in two decades. I have no problem with training and reporting, but you have to accept that the service staff aren't going to police hotels.

-

Somehow I doubt allowing it actually meaningfully helps the situation. It sounds like an alcoholic arguing that a glass of wine actually helps them not drink.

-

You do get sick, and I would be most surprised if they didnt allow people to look away and take breaks/get support as needed.

Yes, my wife used to work in the ER, she still tells the same stories over and over again 15 years later, because the memories of the horrible shit she saw doesn't go away

-

qualifying that as advocating for pedophilia is crazy. all that said is they don't know about studies regarding it so they said probably instead of making a definitive statement. your response is extremely over the top and hostile to someone who didn't advocate for what you're saying they advocate for.

It's none of my business what you do with your time here but if I were you I'd be more cool headed about this because this is giving qanon.

-

I'd be surprised if many "producers" are caught. From what I have heard, most uploads on those sites are reuploads because it's magnitudes easier.

Of the 1400 people caught, I'd say maybe 10 were site administors and the rest passive "consumers" who didn't use Tor. I wouldn't put my hopes up too much that anyone who was caught ever committed child abuse themselves.

I mean, 1400 identified out of 1.8 million really isn't a whole lot to begin with.

If most are reuploads anyway that kills the whole argument that deleting things works though.

-

From a month ago:

https://lemmy.world/post/26165823/15375845as said before, that person was not advocating for anything. he made a qualified statement, which you answered to with examples of kids in cults and flipped out calling him all kinds of nasty things.

-

I imagine it's easier to catch uploaders than viewers.

It's also probably more impactful to go for the big "power producers" simultaneously and quickly before word gets out and people start locking things down.

It also likely gives you the best $ spent/children protected rate, because you know the producers have children they are abusing which may or may not be the case for a viewer.

-

If most are reuploads anyway that kills the whole argument that deleting things works though.

Not quite. Reuploading is at the very least an annoying process.

Uploading anything over Tor is a gruelling process. Downloading takes much time already, uploading even more so. Most consumer internet plans aren't symmetrically either with significantly lower upload than download speeds. Plus, you need to find a direct-download provider which doesn't block Tor exit nodes and where uploading/downloading is free.

Taking something down is quick. A script scraping these forums which automatically reports the download links (any direct-download site quickly removes reports of CSAM by the way - no one wants to host this legal nightmare) can take down thousands of uploads per day.

Making the experience horrible leads to a slow death of those sites. Imagine if 95% of videos on [generic legal porn site] lead to a "Sorry! This content has been taken down." message. How much traffic would the site lose? I'd argue quite a lot.

-

qualifying that as advocating for pedophilia is crazy. all that said is they don't know about studies regarding it so they said probably instead of making a definitive statement. your response is extremely over the top and hostile to someone who didn't advocate for what you're saying they advocate for.

It's none of my business what you do with your time here but if I were you I'd be more cool headed about this because this is giving qanon.

Even then, a common bit you'll hear from people actually defending pedophilia is that the damage caused is a result of how society reacts to it or the way it's done because of the taboo against it rather than something inherent to the act itself, which would be even harder to do research on than researching pedophilia outside a criminal context already is to begin with. For starters, you'd need to find some culture that openly engaged in adult sex with children in some social context and was willing to be examined to see if the same (or different or any) damages show themselves.

And that's before you get into the question of defining where exactly you draw the age line before it "counts" as child sexual abuse, which doesn't have a single, coherent answer. The US alone has at least three different answers to how old someone has to be before having sex with them is not illegal based on their age alone (16-18, with 16 being most common), with many having exceptions that go lower (one if the partners are close "enough" in age are pretty common). For example in my state, the age of consent is 16 with an exception if the parties are less than 4 years difference in age. For California in comparison if two 17 year olds have sex they've both committed a misdemeanor unless they are married.

-

This post did not contain any content.

-

This post did not contain any content.

-

it says "this hidden site", meaning it was a site on the dark web. It probably took them awhile to figure out were the site was located so they could shut it down.

it says “this hidden site”, meaning it was a site on the dark web.

Not just on the dark web (which technically is anything not indexed by search engines) but hidden sites are specifically a TOR thing (though Freenet/Hyphanet has something similar but it's called something else). Usually a TOR hidden site has a URL that ends in .onion and the TOR protocol has a structure for routing .onion addresses.

-

If that's the actual splash screen that pops up when you try to access it (no, I'm not going to go to it and check, I don't want to be on a new and exciting list) then kudos to the person who put that together. Shit goes hard. So do all the agency logos.

-

That’s unfortunately (not really sure) probably the fault of Germanys approach to that.

It is usually not taking these websites down but try to find the guys behind it and seize them. The argument is: they will just use a backup and start a “KidFlix 2” or sth like that.

Some investigations show, that this is not the case and deleting is very effective. Also the German approach completely ignores the victim side. They have to deal with old men masturbating to them getting raped online. Very disturbing…They have to deal with old men masturbating to them getting raped online.

The moment it was posted to wherever they were going to have to deal with that forever. It's not like they can ever know for certain that every copy of it ever made has been deleted.

-

that is still cp, and distributing CP still harms childrens, eventually they want to move on to the real thing, as porn is not satisfying them anymore.

eventually they want to move on to the real thing, as porn is not satisfying them anymore.

Isn't this basically the same argument as arguing violent media creates killers?