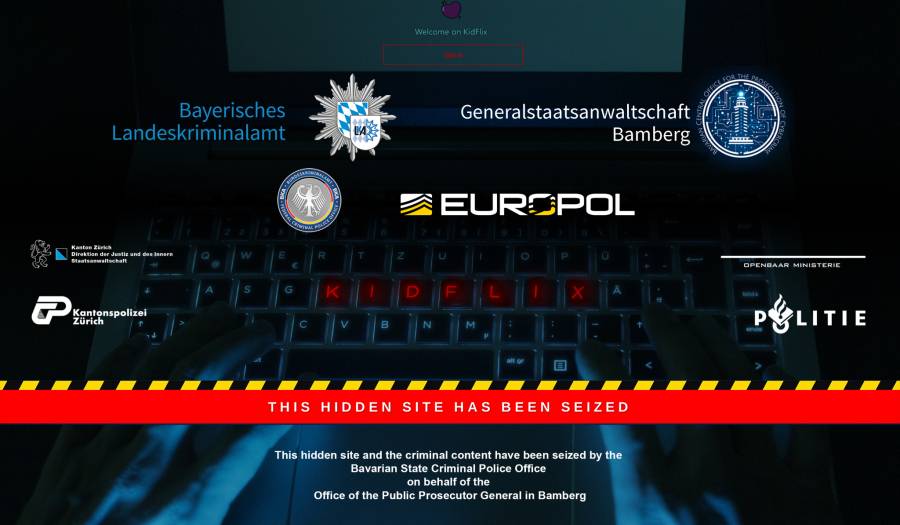

European police say KidFlix, "one of the largest pedophile platforms in the world," busted in joint operation.

-

If that's the actual splash screen that pops up when you try to access it (no, I'm not going to go to it and check, I don't want to be on a new and exciting list) then kudos to the person who put that together. Shit goes hard. So do all the agency logos.

-

That’s unfortunately (not really sure) probably the fault of Germanys approach to that.

It is usually not taking these websites down but try to find the guys behind it and seize them. The argument is: they will just use a backup and start a “KidFlix 2” or sth like that.

Some investigations show, that this is not the case and deleting is very effective. Also the German approach completely ignores the victim side. They have to deal with old men masturbating to them getting raped online. Very disturbing…They have to deal with old men masturbating to them getting raped online.

The moment it was posted to wherever they were going to have to deal with that forever. It's not like they can ever know for certain that every copy of it ever made has been deleted.

-

that is still cp, and distributing CP still harms childrens, eventually they want to move on to the real thing, as porn is not satisfying them anymore.

eventually they want to move on to the real thing, as porn is not satisfying them anymore.

Isn't this basically the same argument as arguing violent media creates killers?

-

Here’s a reminder that you can submit photos of your hotel room to law enforcement, to assist in tracking down CSAM producers. The vast majority of sex trafficking media is produced in hotels. So being able to match furniture, bedspreads, carpet patterns, wallpaper, curtains, etc in the background to a specific hotel helps investigators narrow down when and where it was produced.

-

This post did not contain any content.

-

typical file-sharing networks

Tox messaging network

Matrix channels

I would consider all of these to be trawling dark waters.

...and most of the people who agree with that notion would also consider reading Lemmy to be "trawling dark waters" because it's not a major site run by a massive corporation actively working to maintain advertiser friendliness to maximize profits. Hell, Matrix is practically Lemmy-adjacent in terms of the tech.

-

Well, some pedophiles have argued that AI generated child porn should be allowed, so real humans are not harmed, and exploited.

I'm conflicted on that. Naturally, I'm disgusted, and repulsed.

But if no real child is harmed...

I don't want to think about it, anymore.

Understand you’re not advocating for it, but I do take issue with the idea that AI CSAM will prevent children from being harmed. While it might satisfy some of them (at first, until the high from that wears off and they need progressively harder stuff), a lot of pedophiles are just straight up sadistic fucks and a real child being hurt is what gets them off. I think it’ll just make the “real” stuff even more valuable in their eyes.

-

This post did not contain any content.

Geez, two million? Good riddance. Great job everyone!

-

This post did not contain any content.

Maybe Jeff Bezos will write an article about him and editorialize about "personal liberty". I have to keep posting this because every day another MAGA/lover - religious bigot or otherwise pretend upstanding community member is indicted or arrested for heinous acts against women and children.

-

With the amount of sites that are easily accessed on the dark net though the hidden wiki and other sites. This might as well be a honeypot from the start. And it's doesn't only apply to cp but to drugs, fake ids and other shit.

No judge would authorise a honeypot that runs for multiple years, hosting original child abuse material meaning that children are actively being abused to produce content for it. That would be an unspeakable atrocity. A few years ago the Australian police seized a similar website and ran it for a matter of weeks to gather intelligence and even that was considered too far for many.

-

Even then, a common bit you'll hear from people actually defending pedophilia is that the damage caused is a result of how society reacts to it or the way it's done because of the taboo against it rather than something inherent to the act itself, which would be even harder to do research on than researching pedophilia outside a criminal context already is to begin with. For starters, you'd need to find some culture that openly engaged in adult sex with children in some social context and was willing to be examined to see if the same (or different or any) damages show themselves.

And that's before you get into the question of defining where exactly you draw the age line before it "counts" as child sexual abuse, which doesn't have a single, coherent answer. The US alone has at least three different answers to how old someone has to be before having sex with them is not illegal based on their age alone (16-18, with 16 being most common), with many having exceptions that go lower (one if the partners are close "enough" in age are pretty common). For example in my state, the age of consent is 16 with an exception if the parties are less than 4 years difference in age. For California in comparison if two 17 year olds have sex they've both committed a misdemeanor unless they are married.

none of this applies to the comment they cited as an example of defending pedophilia.

-

Understand you’re not advocating for it, but I do take issue with the idea that AI CSAM will prevent children from being harmed. While it might satisfy some of them (at first, until the high from that wears off and they need progressively harder stuff), a lot of pedophiles are just straight up sadistic fucks and a real child being hurt is what gets them off. I think it’ll just make the “real” stuff even more valuable in their eyes.

I feel the same way. I've seen the argument that it's analogous to violence in videogames, but it's pretty disingenuous since people typically play videogames to have fun and for escapism, whereas with CSAM the person seeking it out is doing so in bad faith. A more apt comparison would be people who go out of their way to hurt animals.

-

I used to work in netsec and unfortunately government still sucks at hiring security experts everywhere.

That being said hiring here is extremely hard - you need to find someone with below market salary expectation working on such ugly subject. Very few people can do that. I do believe money fixes this though. Just pay people more and I'm sure every European citizen wouldn't mind 0.1% tax increase for a more effective investigation force.

Most cases of "we can't find anyone good for this job" can be solved with better pay. Make your opening more attractive, then you'll get more applicants and can afford to be picky.

Getting the money is a different question, unless you're willing to touch the sacred corporate profits....

-

as said before, that person was not advocating for anything. he made a qualified statement, which you answered to with examples of kids in cults and flipped out calling him all kinds of nasty things.

Lmfao as I stated, they said that physical sexual abuse "PROBABLY" harms kids but they have only done research into their voyeurism kink as it applies to children.

Go off defending pedos, though

-

qualifying that as advocating for pedophilia is crazy. all that said is they don't know about studies regarding it so they said probably instead of making a definitive statement. your response is extremely over the top and hostile to someone who didn't advocate for what you're saying they advocate for.

It's none of my business what you do with your time here but if I were you I'd be more cool headed about this because this is giving qanon.

They literally investigated specific time frames of their voyeurism kink in medieval times extensively, but couldn't be bothered to do the most basic of research that sex abuse is harmful to children.

-

Search “AI woman porn miniskirt,”

Did it with safesearch off and got a bunch of women clearly in their late teens or 20s. Plus, I don't want to derail my main point but I think we should acknowledge the difference between a picture of a real child actively being harmed vs a 100% fake image. I didn't find any AI CP, but even if I did, it's in an entire different universe of morally bad.

r/jailbait

That was, what, fifteen years ago? It's why I said "in the last decade".

"Clearly in their late teens," sure.

Obviously there's a difference with AI porn vs real, that's why I told you to search AI in the first place??? The convo isn't about AI porn, but AI porn uses images to seed their new images including CSAM

-

I feel like what he’s trying to say it shouldn’t be the end of the world if a kid sees a sex scene in a movie, like it should be ok for them to know it exists. But the way he phrases it is questionable at best.

When I was a kid I was forced to leave the room when any intimate scenes were in a movie and I honestly do feel like it fucked with my perception of sex a bit. Like it’s this taboo thing that should be hidden away and never discussed.

He wants children to full on watch adults have sex because he has a voyeurism kink. Solved that for you.

-

They literally investigated specific time frames of their voyeurism kink in medieval times extensively, but couldn't be bothered to do the most basic of research that sex abuse is harmful to children.

"they knew some things and didn't know some things" isn't worth getting so worked up over. they knew the mere concept of sex being taboo negatively affected them and didn't want to make definitive statements about things they didn't research. believe it or not lemmy comments are not dissertations and most people just talk and don't bother researching every tangential topic just to make a point they want to make.

-

typical file-sharing networks

Tox messaging network

Matrix channels

I would consider all of these to be trawling dark waters.

-

No judge would authorise a honeypot that runs for multiple years, hosting original child abuse material meaning that children are actively being abused to produce content for it. That would be an unspeakable atrocity. A few years ago the Australian police seized a similar website and ran it for a matter of weeks to gather intelligence and even that was considered too far for many.

"That would be an unspeakable atrocity", yet there is contradiction in the final sentence. The issue is, what evidence is there to prove such thing operation actually works, as my last point implied - what stops the government from abusing this sort of operation. With "covert" operations like this the outcome can be catastrophic for everyone.