Why I am not impressed by A.I.

-

[email protected]replied to [email protected] last edited by

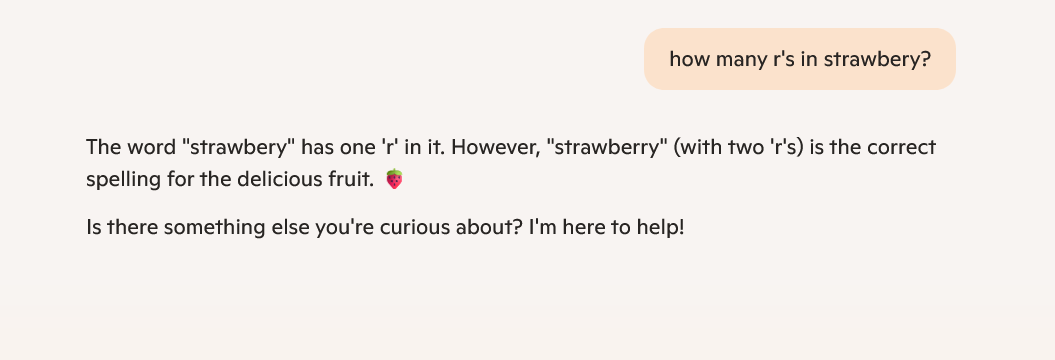

The terrifying thing is everyone criticising the LLM as being poor, however it excelled at the task.

The question asked was how many R in strawbery and it answered. 2.

It also detected the typo and offered the correct spelling.

What’s the issue I’m missing?

-

[email protected]replied to [email protected] last edited by

Ask it for a second opinion on medical conditions.

Sounds insane but they are leaps and bounds better than blindly Googling and self prescribe every condition there is under the sun when the symptoms only vaguely match.

Once the LLM helps you narrow in on a couple of possible conditions based on the symptoms, then you can dig deeper into those specific ones, learn more about them, and have a slightly more informed conversation with your medical practitioner.

They’re not a replacement for your actual doctor, but they can help you learn and have better discussions with your actual doctor.

-

[email protected]replied to [email protected] last edited by

The issue that you are missing is that the AI answered that there is 1 'r' in 'strawbery' even though there are 2 'r's in the misspelled word. And the AI corrected the user with the correct spelling of the word 'strawberry' only to tell the user that there are 2 'r's in that word even though there are 3.

-

[email protected]replied to [email protected] last edited by

Make this sound better: we’re aware of the outage at Site A, we are working as quick as possible to get things back online

How does this work in practice? I suspect you're just going to get an email that takes longer for everyone to read, and doesn't give any more information (or worse, gives incorrect information). Your prompt seems like what you should be sending in the email.

If the model (or context?) was good enough to actually add useful, accurate information, then maybe that would be different.

I think we'll get to the point really quickly where a nice concise message like in your prompt will be appreciated more than the bloated, normalised version, which people will find insulting.

-

[email protected]replied to [email protected] last edited by

I think I have seen this exact post word for word fifty times in the last year.

-

[email protected]replied to [email protected] last edited by

This but actually. Don't use an LLM to do things LLMs are known to not be good at. As tools various companies would do good to list out specifically what they're not good at to eliminate requiring background knowledge before even using them, not unlike needing to know that one corner of those old iPhones was an antenna and to not bridge it.

-

[email protected]replied to [email protected] last edited by

So can web MD. We didn't need AI for that. Googling symptoms is a great way to just be dehydrated and suddenly think you're in kidney failure.

-

[email protected]replied to [email protected] last edited by

Still, it’s kinda insane how two years ago we didn’t imagine we would be instructing programs like “be helpful but avoid sensitive topics”.

That was definitely a big step in AI.

-

[email protected]replied to [email protected] last edited by

Yeah, normally my "Make this sound better" or "summarize this for me" is a longer wall of text that I want to simplify, talking to non-technical people about a technical issue is not the easiest for me, and AI has helped me dumb it down when sending an email.

As for accuracy, you review what is gives you, you don't just copy and send it without review. Also you will have to tweak some pieces that it gives out where it doesn't make the most sense, such as if it uses wording you wouldn't typically use. It is fairly accurate though in my use-cases.

-

[email protected]replied to [email protected] last edited by

Uh oh, you’ve blown your cover, robot sir.

-

[email protected]replied to [email protected] last edited by

I think there's a fundamental difference between someone saying "you're holding your phone wrong, of course you're not getting a signal" to millions of people and someone saying "LLMs aren't good at that task you're asking it to perform, but they are good for XYZ."

If someone is using a hammer to cut down a tree, they're going to have a bad time. A hammer is not a useful tool for that job.

-

[email protected]replied to [email protected] last edited by

As for accuracy, you review what it gives you, you don't just copy and send it without review.

Yeah, I don't get why so many people seem to not get that.

It's like people who were against Intellisense in IDEs because "What if it suggests the wrong function?"...you still need to know what the functions do. If you find something you're unfamiliar with, you check the documentation. You don't just blindly accept it as truth.

Just because it can't replace a person's job doesn't mean it's worthless as a tool.

-

[email protected]replied to [email protected] last edited by

From a linguistic perspective, this is why I am impressed by (or at least, astonished by) LLMs!

-

[email protected]replied to [email protected] last edited by

Yup, the problem with that iPhone (4?) wasn't that it sucked, but that it had limitations. You could just put a case on it and the problem goes away.

LLMs are pretty good at a number of tasks, and they're also pretty bad at a number of tasks. They're pretty good at summarizing, but don't trust the summary to be accurate, just to give you a decent idea of what something is about. They're pretty good at generating code, just don't trust the code to be perfect.

You wouldn't use a chainsaw to build a table, but it's pretty good at making big things into small things, and cleaning up the details later with a more refined tool is the way to go.

-

[email protected]replied to [email protected] last edited by

I can already see it...

Ad:

CAN YOU SOLVE THIS IMPOSSIBLE RIDDLE THAT AI CAN'T SOLVE?!With OP's image. And then it will have the following once you solve it: "congratz, send us your personal details and you'll be added to the hall of fame at CERN Headquarters"

-

[email protected]replied to [email protected] last edited by

We didn’t stop trying to make faster, safer and more fuel efficient cars after Model T, even though it can get us from place A to place B just fine. We didn’t stop pushing for digital access to published content, even though we have physical libraries. Just because something satisfies a use case doesn’t mean we should stop advancing technology.

-

[email protected]replied to [email protected] last edited by

Has the number of "r"s changed over that time?

-

[email protected]replied to [email protected] last edited by

There's also a "r" in the first half of the word, "straw", so it was completely skipping over that r and just focusing on the r's in the word "berry"

-

[email protected]replied to [email protected] last edited by

I asked mistral/brave AI and got this response:

How Many Rs in Strawberry

The word "strawberry" contains three "r"s. This simple question has highlighted a limitation in large language models (LLMs), such as GPT-4 and Claude, which often incorrectly count the number of "r"s as two. The error stems from the way these models process text through a process called tokenization, where text is broken down into smaller units called tokens. These tokens do not always correspond directly to individual letters, leading to errors in counting specific letters within words.

-

[email protected]replied to [email protected] last edited by

sounds like a perfectly sane idea https://freethoughtblogs.com/pharyngula/2025/02/05/ai-anatomy-is-weird/