-

Today I Learned (TIL)

You learn something new every day; what did you learn today?

/c/til is a community for any true knowledge that you would like to share, regardless of topic or of source.

Share your knowledge and experience!

Rules

- Information must be true

- Follow site rules

- No, you don’t have to have literally learned the fact today

- Posts must be about something you learned

51 Topics655 Posts -

22 Topics81 Posts

-

Linux

From Wikipedia, the free encyclopedia

Linux is a family of open source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991 by Linus Torvalds. Linux is typically packaged in a Linux distribution (or distro for short).

Distributions include the Linux kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word “Linux” in their name, but the Free Software Foundation uses the name GNU/Linux to emphasize the importance of GNU software, causing some controversy.

Rules

- Posts must be relevant to operating systems running the Linux kernel. GNU/Linux or otherwise.

- No misinformation

- No NSFW content

- No hate speech, bigotry, etc

Related Communities

Community icon by Alpár-Etele Méder, licensed under CC BY 3.0

2k Topics29k Posts -

0 Topics0 Posts

-

Artificial Intelligence

Welcome to the AI Community!

Let’s explore AI passionately, foster innovation, and learn together. Follow these guidelines for a vibrant and respectful community:

- Be kind and respectful.

- Share high-quality contributions.

- Stay on-topic.

- Enhance accessibility.

- Verify information.

- Encourage meaningful discussions.

You can access the AI Wiki at the following link: AI Wiki

Let’s create a thriving AI community together!

126 Topics305 Posts -

Art

This is a community for art in any medium. Welcome!

Rules:

- This is a community to discuss all things related to art. Posts should be relevant to art, such as sharing art or news about art. To clarify, when we say “any medium,” this includes media such as painting, film, music, literature, performance, video games, etc.

- Keep things civil. Critiquing art is fine but attacking other users for their opinions is not.

- Avoid excessive self-promotion. Yes, this is subjective and will be based on how popular this community becomes overall. If you can see a post about your own work on the first few pages, don’t post another.

- Follow site-wide rules

0 00 Topics0 Posts -

artporn

Wander the gallery. Look at the art. Be polite. If you feel able please post some great art :)

30 Topics78 Posts -

Call of Duty

Welcome to the Call of Duty subreddit, a community for fans to discuss Call of Duty®

0 00 Topics0 Posts -

Dungeons and Dragons

A community for discussion of all things Dungeons and Dragons! This is the catch all community for anything relating to Dungeons and Dragons, though we encourage you to see out our Networked Communities listed below!

/c/DnD Network Communities

- Dungeons and Dragons - Art

- DM Academy

- Dungeons and Dragons - Homebrew

- Dungeons and Dragons - Memes and Comics

- Dungeons and Dragons - AI

- Dungeons and Dragons - Looking for Group

Other DnD and related Communities to follow*

- Tabletop Miniatures

- RPG @lemmy.ml

- TTRPGs @lemmy.blahaj.zone

- Battlemaps

- Map Making

- Fantasy e.g. books stories, etc.

- Worldbuilding @ lemmy.world

- Worldbuilding @ lemmy.ml

- OSR

- OSR @lemm.ee

- Clacksmith

- RPG greentext

- Tyranny of Dragons

- DnD @lemmy.ca

- DnD [email protected]

DnD/RPG Podcasts

*Please Follow the rules of these individual communities, not all of them are strictly DnD related, but may be of interest to DnD Fans

Rules (Subject to Change)

- Be a Decent Human Being

- Credit OC content (self or otherwise)

- Posting news articles: include the source name and exact title from article

Format: [Source Name] Article Title

- Posts must have something to do with Dungeons and Dragons

- No Piracy, this includes links to torrent sites, hosted content, streaming content, etc. Please see this post for details

- Zero tolerance for Racism/Sexism/Ableism/etc.

- No NSFW content

- Abide by the rules of lemmy.world

37 Topics254 Posts -

Free and Open Source Software

If it’s free and open source and it’s also software, it can be discussed here. Subcommunity of Technology.

This community’s icon was made by Aaron Schneider, under the CC-BY-NC-SA 4.0 license.

162 Topics1k Posts -

World News

Breaking news from around the world.

News that is American but has an international facet may also be posted here.

Guidelines for submissions:

- Where possible, post the original source of information.

- If there is a paywall, you can use alternative sources or provide an archive.today, 12ft.io, etc. link in the body.

- Do not editorialize titles. Preserve the original title when possible; edits for clarity are fine.

- Do not post ragebait or shock stories. These will be removed.

- Do not post tabloid or blogspam stories. These will be removed.

- Social media should be a source of last resort.

These guidelines will be enforced on a know-it-when-I-see-it basis.

For US News, see the US News community.

This community’s icon was made by Aaron Schneider, under the CC-BY-NC-SA 4.0 license.

261 Topics1k Posts - Where possible, post the original source of information.

-

0 Topics0 Posts

-

ADHD

A casual community for people with ADHD

Values:

Acceptance, Openness, Understanding, Equality, Reciprocity.

Rules:

- No abusive, derogatory, or offensive post/comments.

- No porn, gore, spam, or advertisements allowed.

- Do not request for donations.

- Do not link to other social media or paywalled content.

- Do not gatekeep or diagnose.

- Mark NSFW content accordingly.

- No racism, homophobia, sexism, ableism, or ageism.

- Respectful venting, including dealing with oppressive neurotypical culture, is okay.

- Discussing other neurological problems like autism, anxiety, ptsd, and brain injury are allowed.

- Discussions regarding medication are allowed as long as you are describing your own situation and not telling others what to do (only qualified medical practitioners can prescribe medication).

Encouraged:

- Funny memes.

- Welcoming and accepting attitudes.

- Questions on confusing situations.

- Seeking and sharing support.

- Engagement in our values.

Relevant Lemmy communities:

lemmy.world/c/adhd will happily promote other ND communities as long as said communities demonstrate that they share our values.

129 Topics2k Posts -

Computer RPGs

Community for CRPG and other RPG discussions. Focus is on CRPGs, but discussion around JPRGs, ARPGs and hybrid games with RPG components is also welcome.

Tabletop/pen & paper RPG discussion is not a good fit for this community. Check out [email protected] for TT/P&P RPG discussions.

Memes are not banned, but the overwhelming focus is on discussions, releases and articles. Try and post memes (on an occasional basis) that would make people who don’t like memes admit “OK! That was a good one!”.

Rules (Click to Expand):1) Follow the Lemmy.world Rules - mastodon.world/about 2) Be kind. No bullying, harassment, racism, sexism etc. against other users. 3) No spam, illegal content, or NSFW content (no games with NSFW images/video). 4) Please stay on topic, cRPG adjacent games or even JRPGs are fine. Try to include topics / games that have a strong roleplaying component to them.

Some other gaming communities across Lemmy:

- Adventure Games - [email protected]

- Automation Games - [email protected]

- Cozy Games - [email protected]

- City Builders - [email protected]

- Incremental - [email protected]

- Indie Games (variety) - [email protected]

- Lifesim Games - [email protected]

- Open Source Games - [email protected]

- Roguelike Games - [email protected]

- RTS Games - [email protected]

- Space Games (variety) - [email protected]

- Strategy Games - [email protected]

- Turn-based Strategy - [email protected]

- Tycoon / Business Sim Games - [email protected]

- Video Game Art - [email protected]

- Video Game Music - [email protected]

- Video Game Questions - [email protected]

Game-specific communities on Lemmy:

- Baldur’s Gate 3 - [email protected]

- Cyberpunk - [email protected]

- Deus Ex - [email protected]

- Elder Scrolls - [email protected]

- Fallout - [email protected]

- Lovecraft Mythos - [email protected]

- The Witcher - [email protected]

Thank you to macniel for the community icon!

196 Topics630 Posts -

F-Droid

F-Droid is an installable catalogue of FOSS (Free and Open Source Software) applications for the Android platform. The client makes it easy to browse, install, and keep track of updates on your device.

IzzyOnDroid is an F-Droid style repository for Android apps, provided by IzzyOnDroid. Applications in this repository are official binaries built by the original application developers, taken from their resp. repositories (mostly Github).

0 00 Topics0 Posts -

Firefox

The latest news and developments on Firefox and Mozilla, a global non-profit that strives to promote openness, innovation and opportunity on the web.

You can subscribe to this community from any Kbin or Lemmy instance:

Related

- Firefox Customs: !FirefoxCSS

- Thunderbird: !Thunderbird

Rules

While we are not an official Mozilla community, we have adopted the Mozilla Community Participation Guidelines as far as it can be applied to a bin.

Rules

-

Always be civil and respectful

Don't be toxic, hostile, or a troll, especially towards Mozilla employees. This includes gratuitous use of profanity. -

Don't be a bigot

No form of bigotry will be tolerated. -

Don't post security compromising suggestions

If you do, include an obvious and clear warning. -

Don't post conspiracy theories

Especially ones about nefarious intentions or funding. If you're concerned: Ask. Please don’t fuel conspiracy thinking here. Don’t try to spread FUD, especially against reliable privacy-enhancing software. Extraordinary claims require extraordinary evidence. Show credible sources. -

Don't accuse others of shilling

Send honest concerns to the moderators and/or admins, and we will investigate. -

Do not remove your help posts after they receive replies

Half the point of asking questions in a public sub is so that everyone can benefit from the answers—which is impossible if you go deleting everything behind yourself once you've gotten yours.

231 Topics1k Posts -

Gaming

The Lemmy.zip Gaming Community

For news, discussions and memes!

Community Rules

This community follows the Lemmy.zip Instance rules, with the inclusion of the following rule:

- No NSFW content

You can see Lemmy.zip’s rules by going to our Code of Conduct.

What to Expect in Our Code of Conduct:

- Respectful Communication: We strive for positive, constructive dialogue and encourage all members to engage with one another in a courteous and understanding manner.

- Inclusivity: Embracing diversity is at the core of our community. We welcome members from all walks of life and expect interactions to be conducted without discrimination.

- Privacy: Your privacy is paramount. Please respect the privacy of others just as you expect yours to be treated. Personal information should never be shared without consent.

- Integrity: We believe in the integrity of speech and action. As such, honesty is expected, and deceptive practices are strictly prohibited.

- Collaboration: Whether you’re here to learn, teach, or simply engage in discussion, collaboration is key. Support your fellow members and contribute positively to shared learning and growth.

If you enjoy reading legal stuff, you can check it all out at legal.lemmy.zip.

2k Topics4k Posts -

0 Topics0 Posts

-

KAVA

This is an uncensored community open to all and it pertains to all things Piper methysticum.

Unlike r/Kava on Reddit, this community is not subject to the capricious and arbitrary whims of the Fijian dilettante pseudonymously known as u/sandolllars, an unelected despot who surreptitiously removes posts and comments there if they don’t conform to his blinkered and unnuanced purview of kava.

This community was created by someone who comes with not only a superior understanding of kava and various interdisciplinary sciences than him, but also an unparalleled impartiality wholly conducive to truly uninhibited discussion of every possible facet of this wondrous Oceanic plant.

This community will hopefully grow to become your one-stop shop for the most incisive, brutally-honest, insider-info-rich, conjecture-busting discourse on kava on the entire Internet, decentralized and far-removed from the ham-fisted “moderation” contaminating corporate platforms like Reddit and Facebook.

I want to stress that everyone is welcome: whether you come from Reddit or Facebook or anywhere else is a non-issue, and your posts and comments (veracious or otherwise) will under no circumstances be removed unless they constitute off-topic spam or otherwise contain graphic pornography or violence. Everything else will be okayed by default, no matter how “heated” it becomes or how “divisive” it is. You do not have to fear of having your word stifled here, much unlike elsewhere.

Even sellouts and wannabe bounty-hunters are welcome here, too, but they just might not be showered with affection! Don’t worry: my skin is as thick as crocodile integument, so abuses will be tolerated (meaning I’ll virtually never remove anything, again, unlike other moderators and admins who invariably engage in thread manipulation games), but potentially reciprocated as well.

(As a heuristic, one needn’t do more than look at his dopey Reddit avatar to understand that he isn’t to be taken seriously.)

0 00 Topics0 Posts -

pics

Rules:

1… Please mark original photos with [OC] in the title if you’re the photographer

2…Pictures containing a politician from any country or planet are prohibited, this is a community voted on rule.

3… Image must be a photograph, no AI or digital art.

4… No NSFW/Cosplay/Spam/Trolling images.

5… Be civil. No racism or bigotry.

Photo of the Week Rule(s):

1… On Fridays, the most upvoted original, marked [OC], photo posted between Friday and Thursday will be the next week’s banner and featured photo.

2… The weekly photos will be saved for an end of the year run off.

Instance-wide rules always apply. mastodon.world/about

852 Topics7k Posts -

Privacy

A place to discuss privacy and freedom in the digital world.

Privacy has become a very important issue in modern society, with companies and governments constantly abusing their power, more and more people are waking up to the importance of digital privacy.

In this community everyone is welcome to post links and discuss topics related to privacy.

Some Rules

- Posting a link to a website containing tracking isn’t great, if contents of the website are behind a paywall maybe copy them into the post

- Don’t promote proprietary software

- Try to keep things on topic

- If you have a question, please try searching for previous discussions, maybe it has already been answered

- Reposts are fine, but should have at least a couple of weeks in between so that the post can reach a new audience

- Be nice :)

Related communities

much thanks to @gary_host_laptop for the logo design :)

2k Topics21k Posts -

Only Pro-The True Kingdom

If you are reading this, then it's already too late.This is a fictional community meant to enhance my creative skills as well as other people creative skills and to waste more time on Lemmy. Why? You might ask… Well, I had never seen any one apply this idea before and we are entering a age where a lot of stories is going to be AI-Generated. Why a king? Will, I need to be super motivated to engage, so hopefully this community stay alive. What is the content like here? This is probably going to be something like just me posting and shitposting+ roleplay/fiction stories and lore. Why should you join? You shouldn’t. This community might not be something that benefits you or even advance your creativity. You can treat that like unfinished unprofessional art work that is almost never going to be finished. I might delete this community later, I might not delete it who knows? But I really don’t want to surrender to AI and lose my creativity, so hopefully some people might enjoy role-playing as my minions and the friction create fire(Which is stories and lore). I want to stay weird, I don’t want to become another gear in the machine of life. Who knows maybe I click with someone in this community and we get something real. I don’t like bullshit and I don’t like to invite greatness that I am not worth, I just want to have a place that is super comfy to me. The world is getting ruined, people are getting more conservative, social media is being ruined and climate change is going full speed and we are in a loneliness epidemic. Most people are evil and rude and we are in the hopeless age. Fiction can fix all of that and more. In case that after you read all of this and you became interested for a reason or another, you can subscribe.

What

This is a community where pro kingdom citizens can come to embrace their only king.

Rules:

- No other kings.

- No negative stuff about the king.

- You can only show your loyalty and devotion to the king only.

What can you post?

- Talk about the greatness of the king.

- Stories involving the king.

- Legends about the king.

- Miracles you witnessed by the king.

0 00 Topics0 Posts -

riot

0 00 Topics0 Posts -

Space

A community to discuss space & astronomy through a STEM lens

Rules

- Be respectful and inclusive. This means no harassment, hate speech, or trolling.

- Engage in constructive discussions by discussing in good faith.

- Foster a continuous learning environment.

> Please keep politics to a minimum. When science is the focus, intersection with politics may be tolerated as long as the discussion is constructive and science remains the focus. As a general rule, political content posted directly to the instance’s local communities is discouraged and may be removed. You can of course engage in political discussions in non-local communities.

Related Communities

🔭 Science- [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected]

🚀 Engineering- [email protected] - [email protected] - [email protected]

🌌 Art and Photography- [email protected] - [email protected]

Other Cool Links

443 Topics2k Posts -

Android

The new home of /r/Android on Lemmy and the Fediverse!

Android news, reviews, tips, and discussions about rooting, tutorials, and apps.

🔗Universal Link: [email protected]

💡Content Philosophy:

Content which benefits the community (news, rumours, and discussions) is generally allowed and is valued over content which benefits only the individual (technical questions, help buying/selling, rants, self-promotion, etc.) which will be removed if it’s in violation of the rules.

Support, technical, or app related questions belong in: [email protected]

For fresh communities, lemmy apps, and instance updates: [email protected]

📰Our communities below

Rules

-

Stay on topic: All posts should be related to the Android OS or ecosystem.

-

No support questions, recommendation requests, rants, or bug reports: Posts must benefit the community rather than the individual. Please post to [email protected].

-

Describe images/videos, no memes: Please include a text description when sharing images or videos. Post memes to [email protected].

-

No self-promotion spam: Active community members can post their apps if they answer any questions in the comments. Please do not post links to your own website, YouTube, blog content, or communities.

-

No reposts or rehosted content: Share only the original source of an article, unless it’s not available in English or requires logging in (like Twitter). Avoid reposting the same topic from other sources.

-

No editorializing titles: You can add the author or website’s name if helpful, but keep article titles unchanged.

-

No piracy or unverified APKs: Do not share links or direct people to pirated content or unverified APKs, which may contain malicious code.

-

No unauthorized polls, bots, or giveaways: Do not create polls, use bots, or organize giveaways without first contacting mods for approval.

-

No offensive or low-effort content: Don’t post offensive or unhelpful content. Keep it civil and friendly!

-

No affiliate links: Posting affiliate links is not allowed.

Quick Links

Our Communities+ [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected] + [email protected]

Lemmy App List Chat and More+ Android Chat + Lemdro.id Chat + Mods Chat + Lemdro.id Admin Chat + Reddit

613 Topics7k Posts -

-

Asklemmy

A loosely moderated place to ask open-ended questions

If your post meets the following criteria, it’s welcome here!

- Open-ended question

- Not offensive: at this point, we do not have the bandwidth to moderate overtly political discussions. Assume best intent and be excellent to each other.

- Not regarding using or support for Lemmy: context, see the list of support communities and tools for finding communities below

- Not ad nauseam inducing: please make sure it is a question that would be new to most members

- An actual topic of discussion

Looking for support?

Looking for a community?

- Lemmyverse: community search

- sub.rehab: maps old subreddits to fediverse options, marks official as such

- [email protected]: a community for finding communities

~Icon~ ~by~ ~@[email protected]~

2k Topics43k Posts -

Europe

News and information from Europe 🇪🇺

(Current banner: La Mancha, Spain. Feel free to post submissions for banner images.)

Rules (2024-08-30)

- This is an English-language community. Comments should be in English. Posts can link to non-English news sources when providing a full-text translation in the post description. Automated translations are fine, as long as they don’t overly distort the content.

- No links to misinformation or commercial advertising. When you post outdated/historic articles, add the year of publication to the post title. Infographics must include a source and a year of creation; if possible, also provide a link to the source.

- Be kind to each other, and argue in good faith. Don’t post direct insults nor disrespectful and condescending comments. Don’t troll nor incite hatred. Don’t look for novel argumentation strategies at Wikipedia’s List of fallacies.

- No bigotry, sexism, racism, antisemitism, islamophobia, dehumanization of minorities, or glorification of National Socialism. We follow German law; don’t question the statehood of Israel.

- Be the signal, not the noise: Strive to post insightful comments. Add “/s” when you’re being sarcastic (and don’t use it to break rule no. 3).

- If you link to paywalled information, please provide also a link to a freely available archived version. Alternatively, try to find a different source.

- Light-hearted content, memes, and posts about your European everyday belong in other communities.

- Don’t evade bans. If we notice ban evasion, that will result in a permanent ban for all the accounts we can associate with you.

- No posts linking to speculative reporting about ongoing events with unclear backgrounds. Please wait at least 12 hours. (E.g., do not post breathless reporting on an ongoing terror attack.)

- Always provide context with posts: Don’t post uncontextualized images or videos, and don’t start discussions without giving some context first.

(This list may get expanded as necessary.)

Posts that link to the following sources will be removed

- on any topic: Al Mayadeen, brusselssignal:eu, citjourno:com, europesays:com, Breitbart, Daily Caller, Fox, GB News, geo-trends:eu, news-pravda:com, OAN, RT, sociable:co, any AI slop sites (when in doubt please look for a credible imprint/about page), change:org (for privacy reasons)

- on Middle-East topics: Al Jazeera

- on Hungary: Euronews

Unless they’re the only sources, please also avoid The Sun, Daily Mail, any “thinktank” type organization, and non-Lemmy social media. Don’t link to Twitter directly, instead use xcancel.com. For Reddit, use old:reddit:com

(Lists may get expanded as necessary.)

Ban lengths, etc.

We will use some leeway to decide whether to remove a comment.

If need be, there are also bans: 3 days for lighter offenses, 7 or 14 days for bigger offenses, and permanent bans for people who don’t show any willingness to participate productively. If we think the ban reason is obvious, we may not specifically write to you.

If you want to protest a removal or ban, feel free to write privately to the primary mod account @[email protected]

6k Topics39k Posts -

Funny: Home of the Haha

Welcome to /c/funny, a place for all your humorous and amusing content.

Looking for mods! Send an application to Stamets!

Our Rules:

-

Keep it civil. We’re all people here. Be respectful to one another.

-

No sexism, racism, homophobia, transphobia or any other flavor of bigotry. I should not need to explain this one.

-

Try not to repost anything posted within the past month. Beyond that, go for it. Not everyone is on every site all the time.

Other Communities:

-

/c/[email protected] - Star Trek chat, memes and shitposts

-

/c/[email protected] - General memes

243 Topics3k Posts -

-

Games

Welcome to the largest gaming community on Lemmy! Discussion for all kinds of games. Video games, tabletop games, card games etc.

Rules

1. Submissions have to be related to gamesVideo games, tabletop, or otherwise. Posts not related to games will be deleted. This community is focused on games, of all kinds. Any news item or discussion should be related to gaming in some way.

2. No bigotry or harassment, be civilNo bigotry, hardline stance. Try not to get too heated when entering into a discussion or debate. We are here to talk and discuss about one of our passions, not fight or be exposed to hate. Posts or responses that are hateful will be deleted to keep the atmosphere good. If repeatedly violated, not only will the comment be deleted but a ban will be handed out as well. We judge each case individually.

3. No excessive self-promotionTry to keep it to 10% self-promotion / 90% other stuff in your post history. This is to prevent people from posting for the sole purpose of promoting their own website or social media account.

4. Stay on-topic; no memes, funny videos, giveaways, reposts, or low-effort postsThis community is mostly for discussion and news. Remember to search for the thing you’re submitting before posting to see if it’s already been posted. We want to keep the quality of posts high. Therefore, memes, funny videos, low-effort posts and reposts are not allowed. We prohibit giveaways because we cannot be sure that the person holding the giveaway will actually do what they promise.

5. Mark Spoilers and NSFWMake sure to mark your stuff or it may be removed. No one wants to be spoiled. Therefore, always mark spoilers. Similarly mark NSFW, in case anyone is browsing in a public space or at work.

6. No linking to piracyDon’t share it here, there are other places to find it. Discussion of piracy is fine. We don’t want us moderators or the admins of lemmy.world to get in trouble for linking to piracy. Therefore, any link to piracy will be removed. Discussion of it is of course allowed.

Authorized Regular Threads

Related communities

PM a mod to add your own

Video games

Generic

- [email protected]: Our sister community, focused on PC and console gaming. Meme are allowed.

- [email protected]: For all your screenshots needs, to share your love for games graphics.

- [email protected]: A community to share your love for video games music

Help and suggestions

By platform- [email protected] - [email protected]

By type- [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected]

By games- [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected] - [email protected]

Language specific- [email protected]: French

3k Topics42k Posts -

0 Topics0 Posts

-

memes

Community rules

1. Be civilNo trolling, bigotry or other insulting / annoying behaviour

2. No politicsThis is non-politics community. For political memes please go to [email protected]

3. No recent repostsCheck for reposts when posting a meme, you can only repost after 1 month

4. No botsNo bots without the express approval of the mods or the admins

5. No Spam/Ads/AI SlopNo advertisements or spam. This is an instance rule and the only way to live. We also consider AI slop to be spam in this community and is subject to removal.

A collection of some classic Lemmy memes for your enjoyment

Sister communities

- [email protected] : Star Trek memes, chat and shitposts

- [email protected] : Lemmy Shitposts, anything and everything goes.

- [email protected] : Linux themed memes

- [email protected] : for those who love comic stories.

3k Topics50k Posts -

PC Gaming

For PC gaming news and discussion. PCGamingWiki

Rules:

- Be Respectful.

- No Spam or Porn.

- No Advertising.

- No Memes.

- No Tech Support.

- No questions about buying/building computers.

- No game suggestions, friend requests, surveys, or begging.

- No Let’s Plays, streams, highlight reels/montages, random videos or shorts.

- No off-topic posts/comments, within reason.

- Use the original source, no clickbait titles, no duplicates. (Submissions should be from the original source if possible, unless from paywalled or non-english sources. If the title is clickbait or lacks context you may lightly edit the title.)

0 00 Topics0 Posts -

0 Topics0 Posts

-

Trees

A community centered around cannabis.

In the spirit of making Trees a welcoming and uplifting place for everyone, please follow our Commandments.

- Be Cool.

- I’m not kidding. Be nice to each other.

- Avoid low-effort posts

71 Topics470 Posts -

World News

A community for discussing events around the World

Rules:

-

Rule 1: posts have the following requirements:

- Post news articles only

- Video links are NOT articles and will be removed.

- Title must match the article headline

- Not United States Internal News

- Recent (Past 30 Days)

- Screenshots/links to other social media sites (Twitter/X/Facebook/Youtube/reddit, etc.) are explicitly forbidden, as are link shorteners.

- Blogsites are treated in the same manner as social media sites. Medium, Blogger, Substack, etc. are not valid news links regardless of who is posting them. Yes, legitimate news sites use Blogging platforms, they also use Twitter, Facebook, and YouTube and we don’t allow those links either.

-

Rule 2: Do not copy the entire article into your post. The key points in 1-2 paragraphs is allowed (even encouraged!), but large segments of articles posted in the body will result in the post being removed. If you have to stop and think “Is this fair use?”, it probably isn’t. Archive links, especially the ones created on link submission, are absolutely allowed but those that avoid paywalls are not.

-

Rule 3: Opinions articles, or Articles based on misinformation/propaganda may be removed. Sources that have a Low or Very Low factual reporting rating or MBFC Credibility Rating may be removed.

-

Rule 4: Posts or comments that are homophobic, transphobic, racist, sexist, anti-religious, or ableist will be removed. “Ironic” prejudice is just prejudiced.

-

Posts and comments must abide by the lemmy.world terms of service UPDATED AS OF 10/19

-

Rule 5: Keep it civil. It’s OK to say the subject of an article is behaving like a (pejorative, pejorative). It’s NOT OK to say another USER is (pejorative). Strong language is fine, just not directed at other members. Engage in good-faith and with respect! This includes accusing another user of being a bot or paid actor. Trolling is uncivil and is grounds for removal and/or a community ban.

Similarly, if you see posts along these lines, do not engage. Report them, block them, and live a happier life than they do. We see too many slapfights that boil down to “Mom! He’s bugging me!” and “I’m not touching you!” Going forward, slapfights will result in removed comments and temp bans to cool off.

-

Rule 6: Memes, spam, other low effort posting, reposts, misinformation, advocating violence, off-topic, trolling, offensive, regarding the moderators or meta in content may be removed at any time.

-

Rule 7: We didn’t USED to need a rule about how many posts one could make in a day, then someone posted NINETEEN articles in a single day. Not comments, FULL ARTICLES. If you’re posting more than say, 10 or so, consider going outside and touching grass. We reserve the right to limit over-posting so a single user does not dominate the front page.

We ask that the users report any comment or post that violate the rules, to use critical thinking when reading, posting or commenting. Users that post off-topic spam, advocate violence, have multiple comments or posts removed, weaponize reports or violate the code of conduct will be banned.

All posts and comments will be reviewed on a case-by-case basis. This means that some content that violates the rules may be allowed, while other content that does not violate the rules may be removed. The moderators retain the right to remove any content and ban users.

Lemmy World Partners

News [email protected]

Politics [email protected]

World Politics [email protected]

Recommendations

For Firefox users, there is media bias / propaganda / fact check plugin.

addons.mozilla.org/…/media-bias-fact-check/

- Consider including the article’s mediabiasfactcheck.com/ link

7k Topics70k Posts -

-

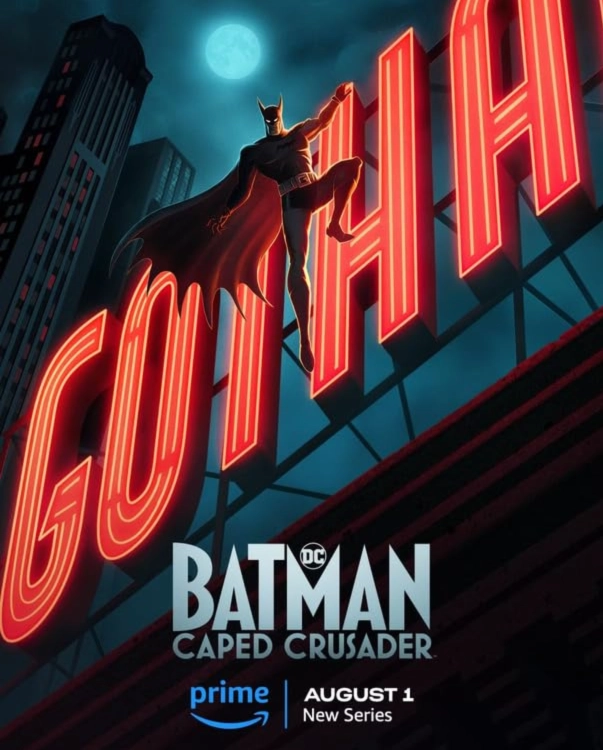

Batman

The Batman community we deserve. Anything and everything about the Dark Knight of Gotham from across all media.

Rules:

- Be civil

- Tag spoiler posts with '[Spoiler(s)]'

- No SPAMing or trolling

- Only relevant posts

- Credit artist(s) when possible

- Let people like what they like

- Follow all Lemmy.world rules

Please report any rule violations.

0 00 Topics0 Posts

0 00 Topics0 Posts -

0 Topics0 Posts

-

donuts

0 00 Topics0 Posts -

Jungle

Welcome Junglist to the lemmy.world jungle community! Jungle music discussions, posts and memes.

DnB heads, check out:

-[email protected]If you are sharing your own music/mixes, please add [Self Post] or [Self Mix] to the end so we know it’s yours.Feel free to leave links to music, socials, whatever in the description. Please, no flooding or spamming.

Self promotion and event promotions are allowed. Again, please don’t spam.

Nearly everything goes, just a few simple rules.

- Be respectful

- No NSFW/NSFL content.

- No hate speech of any kind

- No personal attacks

Spotify Playlist: open.spotify.com/playlist/4OpUIuT1rUnE1TDMGDDA5T

Youtube playlist: youtube.com/playlist?list=PLmRulcmQQ4sql-KP48e7Zp…

Related Communities:

0 00 Topics0 Posts -

0 Topics0 Posts

-

Palestine

A community to discuss everything Palestine.

Rules:

-

Posts can be in Arabic or English.

-

Please add a flair in the title of every post. Example: “[News] Israel annexes the West Bank ”, “[Culture] Musakhan is the nicest food in the world!”, “[Question] How many Palestinians live in Jordan?”

List of flairs: [News] [Culture] [Discussion] [Question] [Request] [Guide]

0 00 Topics0 Posts -

-

0 Topics0 Posts

-

Signal Jam

A privacy podcast, for everyone.

The official Lemmy community. Hang out here to engage with other listeners and privacy enthusiasts, share knowledge, and learn more.

Our other official channels:

Rules

- Please, keep this space free of judgement and elitism. Remember: privacy is a spectrum, and everyone’s threat model is different.

- If you’re going to make a bold claim, do so with a source.

0 00 Topics0 Posts -

Thrawn - for our favorite Grand Admiral

A community for discussing all things Thrawn.

Banner art from part 6 of the comic series Thrawn, icon art by daniel-morpheus.

0 00 Topics0 Posts -

Two Goobers — Just the Two of Us

ABOUT

A community for wholesome memes and pics about you and the person you care about most in the world.

Whether they be your best friend, a family member, romantic partner, or anyone else (like zucchinis!), this is the perfect place for finding and sharing memes / pics that make you feel warm inside.

They can be sappy, lovey-dovey, or full of goober energy.

RULES

- Be civil - This is a wholesome community. Hate speech, sexism, slurs, or other similar talk will not be tolerated. Don’t cause arguments in the comments.

POSTING GUIDELINES

- Pics should be about two people who care and love for each other

- Post titles should be about the love shared between the two people

- No hateful or cruel pics. Only wholesome ones (a bit of gremlin energy is allowed)

- No NSFW or suggestive content

- No politics

- Do your best to check for reposts before submitting a pic

20 Topics39 Posts -

0 Topics0 Posts

-

33 Topics114 Posts

-

0 Topics0 Posts

-

Gunners

Victoria Concordia Crescit

We are a community for supporters of the North London-based football club Arsenal F.C.

Sister Community: lemmy.world/c/arsenalwfc

Join our FPL with League Code: tt2t8e

If old posts remain pinned on other instances, comment on the Daily Discussion with a screenshot!

0 00 Topics0 Posts -

Lietuva

0 00 Topics0 Posts -

Disney Lorcana TCG

The Disney Lorcana TCG Community for Lemmy! Come and join us for news and discussions!

Rules:

- Stay on topic.

- No hate speech or personal attacks.

- Be a decent human.

- Pixelborn/Project Inklore (and other digital Lorcana simulators) content is allowed.

- No NSFW content, shilling, self promotion, ads, spam, low effort posts, trolling, etc.

- All bots must have mod permission prior to implementation and must follow instance-wide rules. For lemmy.world bot rules click here

- Have fun!

What is Lorcana?

- Lorcana is a trading card game (TCG) announced by Disney developed in partnership with designers at Ravensburger. You, as an “illumineer,” will summon alternate versions of Disney characters (both heroes and villains) in an attempt to be the first to reach 20 points (called Lore).

- It is similar to other TCGs like Magic: The Gathering (MTG) and Pokémon.

- Disney Lorcana Rules – How To Play Lorcana – FULL Beginner’s Guide

How does it play?

- Check out these videos online with the game’s Co-Designer, Ryan Miller. He talks about the game and then they hop right into a match. We are not affiliated with these youtube channels:

Is it fun and does it have depth?

- I will let others in the TCG space speak to this. Here are some reviews and first impressions:

Resources:

General:

- Official Lorcana Website

- Official Companion App for Android/iOS

- Disney Lorcana TCG Resources

- Player’s Guide PDF (English)

- Buy and Sell Cards on TCGPlayer

3rd Party Resources:

- Deck Building:

- Proxies:

- Make your own cards with your own art and attributes:

- vintageccg.com/disney-lorcana-online-card-creator…

- Fun for making cards that don’t exist (yet)!

MSRP Pricing Information Summary - The First Chapter:

- Starter decks:

- $16.99 - USA

- $21.99 - Canada

- €17.99 - Europe

- Sleeved boosters:

- $5.99 - USA

- $7.99 - Canada

- €4.99 - Europe

- Booster Box:

- $143.76 - USA

- Gift set:

- $29.99 - USA

- $39.99 - Canda

- €27.99 - Europe

- Illumineer’s Trove:

- $49.99 - USA

- $64.99 - Canada

- €49.99 - Europe

19 Topics42 Posts -

Medicine

This is a community for medical professionals. Please see the Medical Community Hub for other communities.

Official Lemmy community for /r/Medicine.

[email protected] is a virtual lounge for physicians and other medical professionals from around the world to talk about the latest advances, controversies, ask questions of each other, have a laugh, or share a difficult moment.

This is a highly moderated community. Please read the rules carefully before posting or commenting.

Related Communities

- Medical Community Hub

- Medicine (📍)

- Medicine Canada

- Premed

- Premed Canada

- Public Health

See the pinned post in the Medical Community Hub for links and descriptions. link ([email protected])

Rules

Violations may result in a warning, removal, or ban based on moderator discretion. The rule numbers will correspond to those on /r/Medicine, and where differences are listed where relevant. Please also remember that instance rules for mander.xyz will also apply.

-

Flairs & Starter Comment: Lemmy does not have user flairs, but you are welcome to highlight your role in the healthcare system, however you feel is appropriate. Please also include a starter comment to explain why the link is of interest to the community and to start the conversation. Link posts without starter comments may be temporarily or permanently removed. (rule is different from /r/Medicine)

-

No requests for professional advice or general medical information: You may not solicit medical advice or share personal health anecdotes about yourself, family, acquaintances, or celebrities, seek comments on care provided by other clinicians, discuss billing disputes, or otherwise seek a professional opinion from members of the community. General queries about medical conditions, prognosis, drugs, or other medical topics from the lay public are not allowed.

-

No promotions, advertisements, surveys, or petitions: Surveys (formal or informal) and polls are not allowed on this community. You may not use the community to promote your website, channel, community, or product. Market research is not allowed. Petitions are not allowed. Advertising or spam may result in a permanent ban. Prior permission is required before posting educational material you were involved in making.

-

Link to high-quality, original research whenever possible: Posts which rely on or reference scientific data (e.g. an announcement about a medical breakthrough) should link to the original research in peer-reviewed medical journals or respectable news sources as judged by the moderators. Avoid login or paywall requirements when possible. Please submit direct links to PDFs as text/self posts with the link in the text. Sensationalized titles, misrepresentation of results, or promotion of blatantly bad science may lead to removal.

-

Act professionally and decently: /c/medicine is a public forum that represents the medical community and comments should reflect this. Please keep disagreement civil and focused on issues. Trolling, abuse, and insults (either personal or aimed at a specific group) are not allowed. Do not attack other users’ flair. Keep offensive language to a minimum and do not use ethnic, sexual, or other slurs. Posts, comments, or private messages violating Reddit’s content policy will be removed and reported to site administration.

-

No personal agendas: Users who primarily post or comment on a single pet issue on this community (as judged by moderators) will be asked to broaden participation or leave. Comments from users who appear on this community only to discuss a specific political topic, medical condition, health care role, or similar single-topic issues will be removed. Comments which deviate from the topic of a thread to interject an unrelated personal opinion (e.g. politics) or steer the conversation to their pet issue will be removed.

-

Protect patient confidentiality: Posting protected health information may result in an immediate ban. Please anonymize cases and remove any patient-identifiable information. For health information arising from the United States, follow the HIPAA Privacy Rule’s De-Identification Standard.

-

No careers or homework questions: Questions relating to medical school admissions, courses or exams should be asked elsewhere. Links to medical training communitys and a compilation of careers and specialty threads are available on the /r/medicine wiki. Medical career advice may be asked. (rule is different from /r/Medicine)

-

Throwaway accounts: There are currently no limits on account age or ‘karma’. (rule is different from /r/Medicine)

-

No memes or low-effort posts: Memes, image links (including social media screenshots), images of text, or other low-effort posts or comments are not allowed. Videos require a text post or starter comment that summarizes the video and provides context.

-

No Covid misinformation, conspiracy theories, or other nonsense

Moderators may act with their judgement beyond the scope of these rules to maintain the quality of the community. If your post doesn’t show up shortly after posting, make sure that it meets our posting criteria. If it does, please message a moderator with a link to your post and explanation. You are free to message the moderation team for a second opinion on moderator actions.

0 00 Topics0 Posts -

0 Topics0 Posts

-

Open Source

All about open source! Feel free to ask questions, and share news, and interesting stuff!

Useful Links

- Open Source Initiative

- Free Software Foundation

- Electronic Frontier Foundation

- Software Freedom Conservancy

- It’s FOSS

- Android FOSS Apps Megathread

Rules

- Posts must be relevant to the open source ideology

- No NSFW content

- No hate speech, bigotry, etc

Related Communities

Community icon from opensource.org, but we are not affiliated with them.

363 Topics5k Posts -

Wizards

Post wizards

No ai art

Nonaffiliated links to more wizards

15 Topics151 Posts -

And Finally...

A place for odd or quirky world news stories.

Elsewhere in the Fediverse:

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Rules:

- Be excellent to each other

- The Internet will resurface old “And finally…” material. Just mark it [VINTAGE]

48 Topics234 Posts -

Ask Lemmy

A Fediverse community for open-ended, thought provoking questions

Rules: (interactive)

1) Be nice and; have funDoxxing, trolling, sealioning, racism, and toxicity are not welcomed in AskLemmy. Remember what your mother said: if you can’t say something nice, don’t say anything at all. In addition, the site-wide Lemmy.world terms of service also apply here. Please familiarize yourself with them

2) All posts must end with a '?'This is sort of like Jeopardy. Please phrase all post titles in the form of a proper question ending with ?

3) No spamPlease do not flood the community with nonsense. Actual suspected spammers will be banned on site. No astroturfing.

4) NSFW is okay, within reasonJust remember to tag posts with either a content warning or a [NSFW] tag. Overtly sexual posts are not allowed, please direct them to either [email protected] or [email protected]. NSFW comments should be restricted to posts tagged [NSFW].

5) This is not a support community.It is not a place for ‘how do I?’, type questions. If you have any questions regarding the site itself or would like to report a community, please direct them to Lemmy.world Support or email [email protected]. For other questions check our partnered communities list, or use the search function.

6) No US Politics.Please don’t post about current US Politics. If you need to do this, try [email protected] or [email protected]

Reminder: The terms of service apply here too.

Partnered Communities:

Logo design credit goes to: tubbadu

4k Topics80k Posts -

Chevron 7

Chevron 7

A community for sharing humor about Stargate in all its iterations.

Rules:

- Follow the Lemmy.World Terms of Service. This includes (but is not limited to):

- Lemmy.World is not a place for you to attack other people or groups of people.

- Always be respectful of the privacy of others who access and use the website.

- Links to copyright infringing content are not allowed

- Stay on topic. Posts must be directly related to Stargate, be it a meme, joke, screenshot, discussion prompt, etc.

- Be good, don’t be bad. You’re an adult, or close enough, I trust you know how to act around people.

For more general Stargate content, visit [email protected]

20 Topics127 Posts - Follow the Lemmy.World Terms of Service. This includes (but is not limited to):

-

0 Topics0 Posts

-

Formula 1

Welcome to Formula1 @ lemmy.world Lemmy’s largest community for Formula 1 and related racing series

📆 F1 Calendar

🏁 FIA Documents

📊 F1 Pace

2025 Calendar

Location Date 🇺🇸 United States 17-19 Oct 🇲🇽 Mexico 24-26 Oct 🇧🇷 Brazil 07-09 Nov 🇺🇸 United States 20-22 Nov 🇶🇦 Qatar 28-30 Nov 🇦🇪 Abu Dhabi 05-07 Dec Rules

- Be respectful to everyone: drivers, lemmings etc

- No gambling, crypto or NFTs

- Spoilers are allowed

- Non English articles should include a translation in the comments by deepl.com or similar

- Paywalled articles should include at least a brief summary in the comments, the wording of the article should not be altered

- Social media posts should be posted as screenshots with a link for those who want to view it

- Memes are allowed on Monday only as we all do like a laugh or 2, but don’t want to become formuladank.

- No duplicate posts, or posts of different news companies that say the same thing.

544 Topics3k Posts -

Futurama

For all things Futurama

Rule 1: Don’t be a jerkwad!

Rule 2: Alternate video links to be linked in a comment, below the original video.

Related Communities

0 00 Topics0 Posts -

Maga.Place Announcements, Questions and Answers

A community dedicated to this website. A place for questions, answers and website related announcements.

0 00 Topics0 Posts -

movies

A community about movies and cinema.

Related communities:

- [email protected]

- [email protected]

- [email protected]

- [email protected]Rules

- Be civil

- No discrimination or prejudice of any kind

- Do not spam

- Stay on topic

- These rules will evolve as this community grows

No posts or comments will be removed without an explanation from mods.

940 Topics6k Posts -

Nintendo

A community for everything Nintendo. Games, news, discussions, stories etc.

Rules:

- Stay on topic.

- No NSFW content.

- No hate speech or personal attacks.

- No ads / spamming / trolling / self-promotion / low effort posts / memes etc.

- No linking to, or sharing information about, hacks, ROMs or any illegal content. And no piracy talk. (Linking to emulators, or general mention / discussion of emulation topics is fine.)

- No console wars or PC elitism.

- Be a decent human (or a bot, we don’t discriminate against bots… except in Point 8).

- All bots must have mod permission prior to implementation and must follow instance-wide rules. For lemmy.world bot rules click here

- Links to Twitter, X, or any alternative version such as Nitter, Xitter, Xcancel, etc. are no longer allowed. This includes any “connected-but-separate” web services such as pbs. twimg. com. The only exception will be screenshots in the event that the news cannot be sourced elsewhere.

Upcoming First Party Games (NA):

Game Date Pokémon Z-A Oct 16 Hyrule Warriors: Age of Imprisonment [S2] Nov 6 Kirby Air Riders [S2] Nov 20 Metroid Prime 4 Dec 4 Mario Tennis Fever [S2] Feb 12 Fire Emblem: Fortune’s Weave [S2] 2026 Pokémon Pokopia 2026 Super Mario Bros. Wonder [S2] 2026 Rhythm Heaven: Groove 2026 The Duskbloods [S2] 2026 Tomodachi Life: Living the Dream 2026 Yoshi and the Mysterious Book 2026 Spaltoon Raiders [S2] TBA Pokémon Champions TBA [S2] means Switch 2 only.

Other Gaming Communities

433 Topics3k Posts -

Photography

c/photography is a community centered on the practice of amateur and professional photography. You can come here to discuss the gear, the technique and the culture related to the art of photography. You can also share your work, appreciate the others’ and constructively critique each others work.

Please, be sure to read the rules before posting.

THE RULES

- Be nice to each other

This Lemmy Community is open to civil, friendly discussion about our common interest, photography. Excessively rude, mean, unfriendly, or hostile conduct is not permitted.

- Keep content on topic

All discussion threads must be photography related such as latest gear or art news, gear acquisition advices, photography related questions, etc…

- No politics or religion

This Lemmy Community is about photography and discussion around photography, not religion or politics.

- No classified ads or job offers

All is in the title. This is a casual discussion community.

- No spam or self-promotion

One post, one photo in the limit of 3 pictures in a 24 hours timespan. Do not flood the community with your pictures. Be patient, select your best work, and enjoy.

-

If you want contructive critiques, use [Critique Wanted] in your title.

-

Flair NSFW posts (nudity, gore, …)

-

Do not share your portfolio (instagram, flickr, or else…)

The aim of this community is to invite everyone to discuss around your photography. If you drop everything with one link, this become pointless. Portfolio posts will be deleted. You can however share your portfolio link in the comment section if another member wants to see more of your work.

65 Topics207 Posts -

0 Topics0 Posts

-

Steam Deck

A place to discuss and support all things Steam Deck.

Replacement for r/steamdeck_linux.

As Lemmy doesn’t have flairs yet, you can use these prefixes to indicate what type of post you have made, eg:

[Flair] My post titleThe following is a list of suggested flairs:

[Discussion] - General discussion.

[Help] - A request for help or support.

[News] - News about the deck.

[PSA] - Sharing important information.

[Game] - News / info about a game on the deck.

[Update] - An update to a previous post.

[Meta] - Discussion about this community.Some more Steam Deck specific flairs:

[Boot Screen] - Custom boot screens/videos.

[Selling] - If you are selling your deck.These are not enforced, but they are encouraged.

Rules:

- Follow the rules of Sopuli

- Posts must be related to the Steam Deck in an obvious way.

- No piracy, there are other communities for that.

- Discussion of emulators are allowed, but no discussion on how to illegally acquire ROMs.

- This is a place of civil discussion, no trolling.

- Have fun.

276 Topics4k Posts -

Technology

Which posts fit here?

Anything that is at least tangentially connected to the technology, social media platforms, informational technologies and tech policy.

Post guidelines

[Opinion] prefixOpinion (op-ed) articles must use [Opinion] prefix before the title.

Rules

1. English onlyTitle and associated content has to be in English.

2. Use original linkPost URL should be the original link to the article (even if paywalled) and archived copies left in the body. It allows avoiding duplicate posts when cross-posting.

3. Respectful communicationAll communication has to be respectful of differing opinions, viewpoints, and experiences.

4. InclusivityEveryone is welcome here regardless of age, body size, visible or invisible disability, ethnicity, sex characteristics, gender identity and expression, education, socio-economic status, nationality, personal appearance, race, caste, color, religion, or sexual identity and orientation.

5. Ad hominem attacksAny kind of personal attacks are expressly forbidden. If you can’t argue your position without attacking a person’s character, you already lost the argument.

6. Off-topic tangents

Stay on topic. Keep it relevant.

7. Instance rules may applyIf something is not covered by community rules, but are against lemmy.zip instance rules, they will be enforced.

Companion communities

[email protected]

[email protected]

Icon attribution | Banner attribution

If someone is interested in moderating this community, message @[email protected].

2k Topics9k Posts -

THE_PACK

DO

- BE A BADASS

- POST BOMB ASS MEMES

- CRANK THAT HOG BABY

DON’T YOU FUCKING DARE

- ASK PEOPLE TO STOP CURSING

- SAY THINGS THAT A KID WOULD SAY THIS IS A SUB FOR FUCKEN ADULTS!!1!1! (ALSO GO TO C/AROOOOOOO IF YOU WANNA MAKE JOKES ABOUT GETTING IN TROUBLE WITH PARENTS OR WHOSE TURN IT IS ON THE XBOX OR KID SHIT LIKE THAT - THAT’LL GET U BANED HERE!!1!)!1!+!

- BE A MOMMA’S BOY

- BE A GODDAMN CRYBABY

- SUBMIT A MEME YOU DIDN’T MAKE

- GET ALL POLITICAL AND SHIT

- TELL SOMEONE TO "SPEAK UP"

- CENSOR THE FUCKING PACK

WE GOT A DISCORD, GET IN HERE MFER: discord.gg/thepack HEED THESE WORDS

Could not be further from the truth. I am simply here to spread the word of anime to the less fortunate. I think astrophysics and anime go hand in hand, like a sword and shield. Like man and woman, as G-d Himself intended for all of us when he went on that drunken bender 6000 years ago today. You don’t think that fucker wasn’t watching hentai? We’re like his 2D, you ever think of that? Like, he’s in something more than 3D, so we’re like his 2D, like I just fucking said!! And he could still get it up and bust all kind of holy nuts up in them virgin guts on St. Patrick’s Day way back when. That’s like jerkin’ it to Yoko Littner. Am I really so different, G-d!?! Why the fuck have you forsaken me? You know he didn’t even pay child support for Jesus, not even once? Fuckin’ piece of shit. Informer You know say Daddy Snow me, I’m gonna blame A licky boom-boom down 'Tective man says Daddy Snow stabbed someone down the lane A licky boom-boom down InformerI disagree with that in general. ( ͡° ͜ʖ ͡°)ಠ_ಠLegislated mandates in sentencing can create some awful tyrannies. I think we need a human dimension to sentencing because I believe that leads, overall, to more humane and constructive corrective value. As long as there is some oversight watching out for unreasonable abusers and a channel to deal with tha t, then I think we are better off in the long run. The law can’t anticipate or solve every problem in advance and trying to design it to be that way would make it too unwieldy, burdensome, and in some cases potentially wildly inhumane. Three strikes laws are examples of such failure in the past. You know say Daddy Snow me, I’m gonna blame A licky boom-boom down 'Tective man says Daddy Snow stabbed someone down the lane A licky boom-boom down Police-a them-a they come and-a they blow down me door One him come crawl through through my window So they put me in the back of the car at the station From that point on I reach my destination Now the destination reached was the East Detention Where they whipped down my pants and looked up my bottom [CHORUS] The bigger they are they think they have more power They’re on the phone me say that on hour Me for want to use it once to call my lover Lover who I’m gonna call is the one Tammy I love her from my heart down to my belly Yes Daddy Snow, I’m the coolest daddy The one MC Shan and the one that is Snow Together we are like a tornado [CHORUS] Listen for me ya better listen for me now Listen for me ya better listen for me now When I rock the microphonAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHe, I rock it steady Yes sir Daddy Snow me are the Article Don When I’m at a danceANIME IS KING OF KINGS they say, “Where you come from?” People then say I ce from Jamaica But INow and Then, Here and There Stupid gung-ho kid gets thrown into an absolutely brutal world. You think he’s going to solve everything, but it gets so bad. It’s good, but it suffers from some mediocre tricks of convenience that are really annoying. It’s pretty unique, except for the pretty predictable ending. However, the last episode ties things together well enough that I can forgive it for that. A solid 7 or so.‘m born and raised in the ghetto That’s all I want you to know Purblack people man that’s all I man know My shoes used to tear up and my toes used to show Where I’m born is the o Toronto, so [CHORUS] Come with a nice young lady Intelligent, yes she’s gentle and irie Everywhere I anyway, here’s the verdict on RIN ~Daughters of Mnemosyne~ Eh. Each episode is kind of dumb and strings you along with info you couldn’t possibly know. Lots of exposition. The only reason I’m saying eh is the ending ties stuff together decently and is emotionally effective. I also liked how almost every episode had a large time jump. There’s also a cool implementation of how the internet could be in the future. It just sucked. Perhaps 4th worst show I’ve ever seen. Thank G-d it was short.go I’ve never left here at all Yes, me Snow roam the dance Roam the dance in every nation You’d never know, me Daddy Snow I am the Boom Shakata I’ll never lay down flat in one cardboard box Yes, me Daddy Snow I’m gonna reach to the top, so [CHORUS] Why would he? [repeat] [MC Shan:] Me sitting round cool with my dibby dibby girl Police kDetroit Metal City Some say this is There are a couple viable explanations that I have mused about. The first one is the most obvious… Namely that humanity survived into the future without help the first time through… Perhaps just barely. Perhaps only after great loss and long eras of suffering. In this scenario there is no paradox. Why would the future survivors care to reach back in time and aid their struggling ancestors? These humans were apparently masters of time. Maybe from their perspectPeople seem to be overlooking the deviations from the biblical story as sort of random ignorance on the part of the filmakers. But I think these deviations are coherently intentional … Scott’s take on this film seems to have been to cast the plague miracles pretty much as if they were coincidental natural events. And Moses’ conversations with God were hallucinatory episodes triggered by a head injury. These hard to believe events culminated in a rebellion by the Hebrews and provoked the one remarkable event that is hard to deny historically: namely, Ramses allowing the Hebrews to leave Egypt. Perhaps Ridley Scott is saying, in essence, "Eventually the story of the Exodus got turned into a miraculous mythology. But here, dear viewer, is a plausible rendering of how it all may have really happened."ive of being able to see through all of time, everyone to them is in the “present” (as was apparent in the tesseract construct). Perhaps they chose to reach back in time to render aid to fellow humans out of compassion, to save a lot of lives – much as we might render aid to less fortunate people today.the Metalocalypse of anime. It’s not. They go in the opposite direction. DMC is about a kid moving to Toky who wanted to be an indie pop act, but for some unexplained reason wound up in a death metal band that he hates. And the comedy comes from him stumbling into situations that are way over his head. He’s touted as a metal god who mostly lives up to the reputation by accident. It’s sometimes really funny. It was obviously cheaplyYour accusations here are way overblown. Your assertion that there is some sort of quality judgement about one music style over another (akin to racism as you imply above) is just not there. She simply chose a music style that she knew that he did not enjoy. That was the point of the message. You should not force other people to listen to your music because they might not enjoy doing so. Comparing this to Guantanamo torture is just way off base. “Gross overstep”? Hardly! Finally, there’s no way he would have to sit and listen to the music for 20 hours straight. He was probably given several sessions to attend… probably something like 5 days in a row for 4 hours each. You just need to wind down a bit and take a look at this again in a couple days. Maybe you’re going through finals and are really stressed out or something? made, because they only animate the part of the screen where things are happening. Sometimes, most of the screen is totally black. And each episode is only ~14 minutes, including a 1.5 minute OP, and most episodes are split into two stories. So the pace is blinding. It doesn’t have a real ending. He defeats the king of American death metal, inherits his true kvlt guitar, and then plays his silly indie songs with it on the street. There is no resolution with his potential love interest and he never reveals his identity to anyone, which is a bit anti-climacticiciciciciciciciciciciciciciciciicicicichad;gh;lafhg;lahglafhglahjl gaoretuaop t 747 4947nock my door, lick up my pal Rough me up and I can’t do a thing Pick up my line when my telephone ring Take me to the station, black up my hands Trail me down 'cause I’m hanging with the Snowman What am I gonna do, I’m black and I’m trapped Smack me in my fEliminating money would not eliminate any moral problems in human nature but it sure would hold us down from developing prosperous societies. Remember how sucky and tribalistic life was back in the dark ages?ace, took all of my gap They have no clues and they wanna get warmer But Shan won’t turn informer [CHORUS]

20 Topics122 Posts -

Threaded

This community is for Fediverse clients Threaded (microblogging) and Coli (Kbin/Lemmy) in this space community members will be kept up to date, be able to discuss, provide feature requests and feedback

0 00 Topics0 Posts -

ADHD memes

ADHD Memes

The lighter side of ADHD

Rules

Other ND communities

- [email protected] - Generic discussion

- [email protected]

- [email protected]

- !autisticandadhd

- [email protected]

509 Topics9k Posts -

Cocktails, the libationary art!

A place for conversation about cocktails, ingredients, home mixology, the bar industry or liquor industry, glassware - this is not an exhaustive list. If you think it’s in some way related to cocktails it’s probably fine.

If you post something you didn’t create give credit whenever possible.

Pictures and recipes are encouraged when posting a drink as a standalone post. Example of an ideal drink post:

We love garnishes.

Remember the code of conduct, keep it nice. In terms of cocktails- specific etiquette that might be different from other communities:

Mentioning your blog, insta, website, book or bar is allowed, yes. For now at least, we do allow self-promotion. If it gets out of hand this might change.

A good post with a drink you don’t like is still a good post! Try not to conflate the drink and the post or poster. If someone has a relevant title, gorgeous photo and clearly formatted recipe of what you consider a truly terrible drink, a comment is more appropriate than a downvote.

On that topic: Polite critique/reviews of drinks (or posts, images, etc.) is allowed here. Encouraged , even. It’s a good tool for improving your drinks and content. Really, just be nice.

57 Topics251 Posts -

Fediverse

A community to talk about the Fediverse and all it’s related services using ActivityPub (Mastodon, Lemmy, KBin, etc).

If you wanted to get help with moderating your own community then head over to [email protected]!

Rules

- Posts must be on topic.

- Be respectful of others.

- Cite the sources used for graphs and other statistics.

- Follow the general Lemmy.world rules.

Learn more at these websites: Join The Fediverse Wiki, Fediverse.info, Wikipedia Page, The Federation Info (Stats), FediDB (Stats), Sub Rehab (Reddit Migration)

996 Topics20k Posts -

Fediverso - social federati indipendenti

🚫🤖 Benvenutə nella sezione Fediverso di Diggita

Questo è lo spazio dedicato al Fediverso, l’alternativa libera, decentralizzata e federata alle piattaforme delle Big Tech. Un luogo per conoscere, usare e diffondere strumenti come Mastodon, Pixelfed, PeerTube e molte altre soluzioni etiche, dove la persona è al centro, non il profitto.

Usare Facebook, Twitch, YouTube, Instagram e simili vi trasforma in una sorta di giullari di corte: intrattenete il re (la piattaforma) sperando di ottenere qualche avanzo di attenzione, retweet o like — e rischiate sempre di essere messi da parte se non siete abbastanza “divertenti” o utili ai loro obiettivi. È come stare alla mercé di una corte virtuale, dove la vostra voce passa solo se ritenuta gradita.

Il Fediverso è la risposta concreta a un web diventato tossico e chiuso:

- ❌ Niente algoritmi manipolatori

- ❌ Niente pubblicità invasive

- ❌ Niente sorveglianza e tracciamento delle abitudini.

🌍 Una rete aperta per un web migliore

La più grande rivoluzione del Fediverso è la sua natura aperta e interoperabile: chiunque, da qualsiasi piattaforma compatibile, può seguirci, interagire e partecipare. Non è un giardino recintato, ma un ecosistema pensato per unire, non dividere.

Il vero Fediverso non crea bolle, ma connessioni. È una riappropriazione collettiva di internet, libera e non controllata da pochi.

✊ Siamo qui per costruire un’alternativa

In un’epoca di concentrazione e controllo, usare il Fediverso è un atto politico. Significa scegliere un altro modello di comunicazione, fondato su trasparenza, solidarietà e autonomia digitale.

🔗 Seguici anche su:

- Mastodon – aggiornamenti quotidiani dal mondo del Fediverso

- Telegram – notizie, guide e segnalazioni

- Newsletter – un riepilogo etico, senza traccianti né pubblicità

—.

immagine di copertina CC BY-SA 4.0 di @[email protected]

📚 Libertà di Condivisione

Tutti i contenuti presenti su Diggita.com sono pubblicati con licenza Creative Commons Attribuzione (CC BY 4.0)

0 00 Topics0 Posts -

Funny

General rules:

- Be kind.

- All posts must make an attempt to be funny.

- Obey the general sh.itjust.works instance rules.

- No politics or political figures. There are plenty of other politics communities to choose from.

- Don’t post anything grotesque or potentially illegal. Examples include pornography, gore, animal cruelty, inappropriate jokes involving kids, etc.

Exceptions may be made at the discretion of the mods.

737 Topics13k Posts -

Games

Video game news oriented community. No NanoUFO is not a bot :)

Posts.

- News oriented content (general reviews, previews or retrospectives allowed).

- Broad discussion posts (preferably not only about a specific game).

- No humor/memes etc…

- No affiliate links

- No advertising.

- No clickbait, editorialized, sensational titles. State the game in question in the title. No all caps.

- No self promotion.

- No duplicate posts, newer post will be deleted unless there is more discussion in one of the posts.

- No politics.

Comments.

- No personal attacks.

- Obey instance rules.

- No low effort comments(one or two words, emoji etc…)

- Please use spoiler tags for spoilers.

My goal is just to have a community where people can go and see what new game news is out for the day and comment on it.

Other communities:

615 Topics7k Posts -

Just horsing around 🐴

Just a page for a surreal or any other meme featuring horses! About rules: just don’t be asshole and respect people of their sexuality, race, gender, religion, worldview or anything else. And no illegal content

0 00 Topics0 Posts -

lennybird

For

Posts of mine, usually political in nature. Half for my own sake to keep track, half for the musings of others.

This sub is a bit more editorially-driven where I feel more free to speak my mind, and often pertains to topical content within current-events. I try my best to put forth sound arguments with citations, but this sub will project activism more so than my other sub.

/c/cgtcivics (Citizen’s Guide to Civics) has a more broader-minded goal of curating information to newcomers and oldies alike to civics, politics, and news that is objective and timeless. To foster critical-thinking skills and know how to digest the enormous amount of information from news sources, social media, and discussions. To create a stable foundation rather than a house of cards whereby you view the world around you.

Software Engineer by trade, writer at heart.

Thanks for stopping by.

0 00 Topics0 Posts -

Linux Gaming

Gaming on the GNU/Linux operating system.

Recommended news sources:

Related chat:

Related Communities:

Please be nice to other members. Anyone not being nice will be banned. Keep it fun, respectful and just be awesome to each other.

59 Topics384 Posts -

48 Topics286 Posts

-

0 Topics0 Posts

-

55 Topics629 Posts

-

Wikipedia

A place to share interesting articles from Wikipedia.

Rules:

- Only links to Wikipedia permitted

- Please stick to the format "Article Title (other descriptive text/editorialization)"

- Tick the NSFW box for submissions with inappropriate thumbnails

- On Casual Tuesdays, we allow submissions from wikis other than Wikipedia.

Recommended:

- If possible, when submitting please delete the “m.” from “en.m.wikipedia.org”. This will ensure people clicking from desktop will get the full Wikipedia website.

- Use the search box to see if someone has previously submitted an article. Some apps will also notify you if you are resubmitting an article previously shared on Lemmy.

896 Topics3k Posts -

cyberdelia

A place to discuss the 1995 film Hackers and the culture and music surrounding it.

Rules:

- No bots

- No boners

- No names

Hack the planet!

0 00 Topics0 Posts -

Floating Is Fun

Banners by ke-ta and by neko

We're chasing that dream-like feeling of floating free in the air and playing around, be it real or fantasy. Any post type that conveys this feeling is welcome. Check the post flairs for examples.

What qualifies for Floating Is Fun is open to interpretation. I encourage everyone to post what they like to see. Give us a gentle push and we'll float pretty far.

We started on Reddit /r/FloatingIsFun in 2021 and moved here in 2023. All 400+ posts from the original from the original subreddit have been reposted here, so you're not missing anything.

Filter by Floating Type

#balloons #bubbles #windpower #levitation #weightlessness #microgravity #airswimming #underwater #illusion #multipletypes

Read the old wiki for tag descriptions

Floaty Friends

🐭 !touhou

🪂 !paramotor

🧙🏼♀️ !imaginarywitches

🏞️ !imaginary

🧑🤝🧑 !skychildrenoflight

🐇 !ghibli