Why I am not impressed by A.I.

-

This post did not contain any content.

-

T [email protected] shared this topic

T [email protected] shared this topic

-

[email protected]replied to [email protected] last edited by

Well it is not wrong, I believe it just gets bored and tries to fail the Turing test for fun.

-

[email protected]replied to [email protected] last edited by

Because you're using it wrong. It's good for generative text and chains of thought, not symbolic calculations including math or linguistics

-

[email protected]replied to [email protected] last edited by

It's predictive text on speed. The LLMs currently in vogue hardly qualify as A.I. tbh..

-

[email protected]replied to [email protected] last edited by

Give me an example of how you use it.

-

[email protected]replied to [email protected] last edited by

It is wrong. Strawberry has 3 r's

-

[email protected]replied to [email protected] last edited by

It's like someone who has no formal education but has a high level of confidence and eavesdrops on a lot of random conversations.

-

[email protected]replied to [email protected] last edited by

there are two 'r's in 'strawbery'

-

[email protected]replied to [email protected] last edited by

I mean, that's how I would think about it...

-

[email protected]replied to [email protected] last edited by

Writing customer/company-wide emails is a good example. "Make this sound better: we're aware of the outage at Site A, we are working as quick as possible to get things back online"

Another is feeding it an article and asking for a summary, https://hackingne.ws does that for its Bsky posts.

Coding is another good example, "write me a Python script that moves all files in /mydir to /newdir"

Asking for it to summarize a theory, "explain to me why RIP was replaced with RIPv2, and what problems people have had since with RIPv2"

-

[email protected]replied to [email protected] last edited by

One thing which I find useful is to be able to turn installation/setup instructions into ansible roles and tasks. If you're unfamiliar, ansible is a tool for automated configuration for large scale server infrastructures.

In my case I only manage two servers but it is useful to parse instructions and convert them to ansible, helping me learn and understand ansible at the same time.Here is an example of instructions which I find interesting: how to setup docker for alpine Linux:

https://wiki.alpinelinux.org/wiki/DockerResults are actually quite good even for smaller 14B self-hosted models like the distilled versions of DeepSeek, though I'm sure there are other usable models too.

To assist you in programming (both to execute and learn) I find it helpful too.

I would not rely on it for factual information, but usually it does a decent job at pointing in the right direction. Another use i have is helpint with spell-checking in a foreign language.

-

[email protected]replied to [email protected] last edited by

I think the problem is the way LLM are trained means they pick up common parlance. Often if you say something has two of any letter in it the meaning can be two consecutive letters. But I take your point, I did fail that test.

-

[email protected]replied to [email protected] last edited by

"You're holding it wrong"

-

[email protected]replied to [email protected] last edited by

Uh, no, that is not common parlance. If any human tells you that strawberry has two r's, they are also wrong.

-

[email protected]replied to [email protected] last edited by

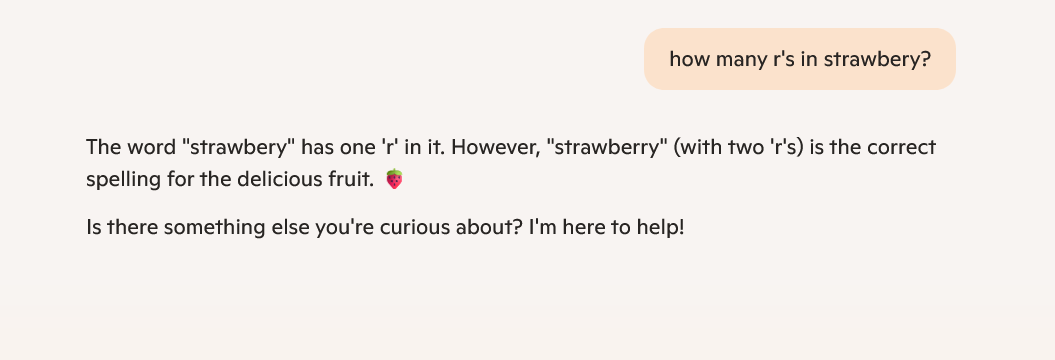

The terrifying thing is everyone criticising the LLM as being poor, however it excelled at the task.

The question asked was how many R in strawbery and it answered. 2.

It also detected the typo and offered the correct spelling.

What’s the issue I’m missing?

-

[email protected]replied to [email protected] last edited by

Ask it for a second opinion on medical conditions.

Sounds insane but they are leaps and bounds better than blindly Googling and self prescribe every condition there is under the sun when the symptoms only vaguely match.

Once the LLM helps you narrow in on a couple of possible conditions based on the symptoms, then you can dig deeper into those specific ones, learn more about them, and have a slightly more informed conversation with your medical practitioner.

They’re not a replacement for your actual doctor, but they can help you learn and have better discussions with your actual doctor.

-

[email protected]replied to [email protected] last edited by

The issue that you are missing is that the AI answered that there is 1 'r' in 'strawbery' even though there are 2 'r's in the misspelled word. And the AI corrected the user with the correct spelling of the word 'strawberry' only to tell the user that there are 2 'r's in that word even though there are 3.

-

[email protected]replied to [email protected] last edited by

Make this sound better: we’re aware of the outage at Site A, we are working as quick as possible to get things back online

How does this work in practice? I suspect you're just going to get an email that takes longer for everyone to read, and doesn't give any more information (or worse, gives incorrect information). Your prompt seems like what you should be sending in the email.

If the model (or context?) was good enough to actually add useful, accurate information, then maybe that would be different.

I think we'll get to the point really quickly where a nice concise message like in your prompt will be appreciated more than the bloated, normalised version, which people will find insulting.

-

[email protected]replied to [email protected] last edited by

I think I have seen this exact post word for word fifty times in the last year.

-

[email protected]replied to [email protected] last edited by

This but actually. Don't use an LLM to do things LLMs are known to not be good at. As tools various companies would do good to list out specifically what they're not good at to eliminate requiring background knowledge before even using them, not unlike needing to know that one corner of those old iPhones was an antenna and to not bridge it.