DeepSeek's AI breakthrough bypasses industry-standard CUDA, uses assembly-like PTX programming instead

-

[email protected]replied to [email protected] last edited by

It certainly does.

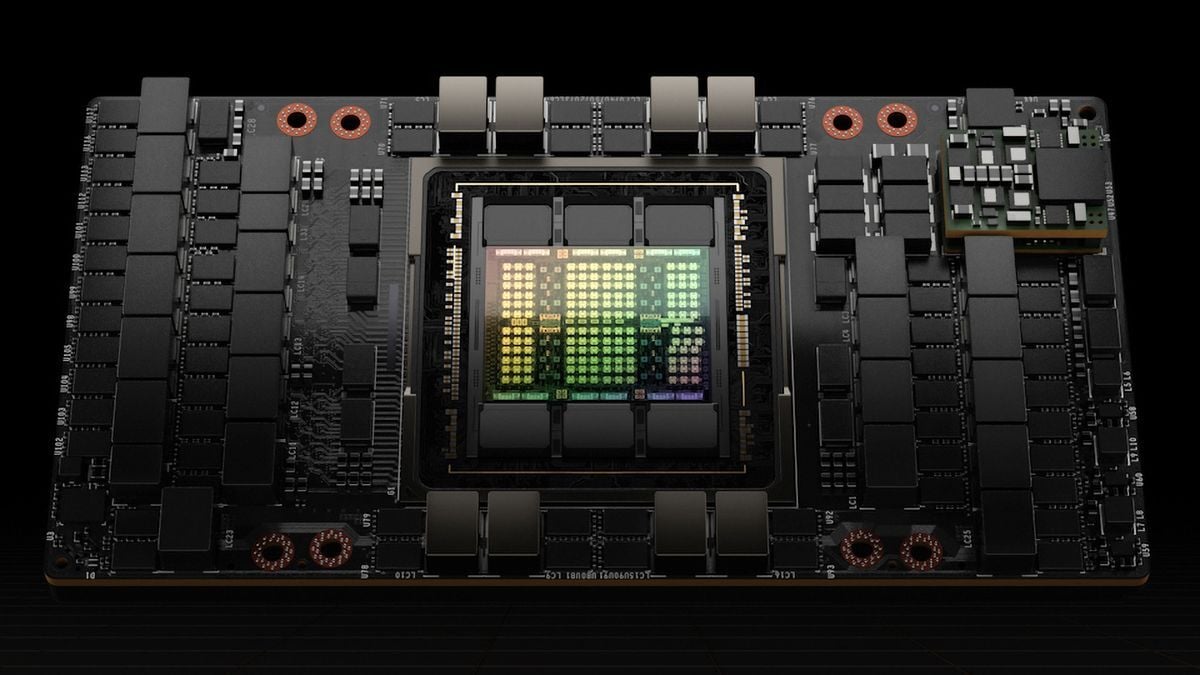

Until last week, you absolutely NEEDED an NVidia GPU equipped with CUDA to run all AI models.

Today, that is simply not true. (watch the video at the end of this comment)I watched this video and my initial reaction to this news was validated and then some: this video made me even more bearish on NVDA.

-

[email protected]replied to [email protected] last edited by

This specific tech is, yes, nvidia dependent. The game changer is that a team was able to beat the big players with less than 10 million dollars. They did it by operating at a low level of nvidia's stack, practically machine code. What this team has done, another could do. Building for AMD GPU ISA would be tough but not impossible.

-

[email protected]replied to [email protected] last edited by

mate, that means they are using PTX directly. If anything, they are more dependent to NVIDIA and the CUDA platform than anyone else.

-

[email protected]replied to [email protected] last edited by

you absolutely NEEDED an NVidia GPU equipped with CUDA

-

[email protected]replied to [email protected] last edited by

Ahh. Thanks for this insight.

-

[email protected]replied to [email protected] last edited by

Thanks for the corrections.

-

[email protected]replied to [email protected] last edited by

I thought everyone liked to hate on Metal.

-

[email protected]replied to [email protected] last edited by

It’s written in nvidia instruction set PTX which is part of CUDA ecosystem.

Hardly going to affect nvidia

-

[email protected]replied to [email protected] last edited by

I thought CUDA was NVIDIA-specific too, for a general version you had to use OpenACC or sth.

-

[email protected]replied to [email protected] last edited by

CUDA is NVIDIA proprietary, but may be open to licensing it? I think?

-

[email protected]replied to [email protected] last edited by

The big win I see here is the amount of optimisation they achieved by moving from the high-level CUDA to lower-level PTX. This suggests that developing these models going forward can be made a lot more energy-efficient, something I hope can be extended to their execution as well. As it stands currently, "AI" (read: LLMs and image generation models) consumes way too many resources to be sustainable.

-

[email protected]replied to [email protected] last edited by

It's already happening. This article takes a long look at many of the rising threats to nvidia. Some highlights:

-

Google has been running on their own homemade TPUs (tensor processing units) for years, and say they on the 6th generation of those.

-

Some AI researchers are building an entirely AMD based stack from scratch, essentially writing their own drivers and utilities to make it happen.

-

Cerebras.ai is creating their own AI chips using a unique whole-die system. They make an AI chip the size of entire silicon wafer (30cm square) with 900,000 micro-cores.

So yeah, it's not just "China AI bad" but that the entire market is catching up and innovating around nvidia's monopoly.

-

-

[email protected]replied to [email protected] last edited by

Yeah I'd like to see size comparisons too. The cuda stack is massive.

-

PTX also removes NVIDIA lock-in.

-

[email protected]replied to [email protected] last edited by

Kind of the opposite actually. PTX is in essence nvidia specific assembly. Just like how arm or x86_64 assembly are tied to arm and x86_64.

At least with cuda there are efforts like zluda. Cuda is more like objective-c was on the mac. Basicly tied to platform but at least you could write a compiler for another target in theory.

-

[email protected]replied to [email protected] last edited by

Eh, even for many console games it's not optimised that much.

Check out Kaze Emanaur's (& co) rewrite of the N64s Super Mario 64 engine. He's now building an entirely new game on top of that engine, and it looks considerably better than SM64 did and runs at twice the FPS on original hardware.

But you're probably right that today it happens even less than before.

-

[email protected]replied to [email protected] last edited by

Ah, I hoped it was cross platform, more like Opencl. Thinking about it, a lower level language would be more platform specific.

-

[email protected]replied to [email protected] last edited by

That disregards the massive advancement in technology, hindsight, tooling and theory they can make use of now. There is a world of difference there even with the same hardware. So not comparable imo, it wasn't for a lack of effort on Nintendo's part.

-

[email protected]replied to [email protected] last edited by

IIRC Zluda does support compiling PTX. My understanding is that this is part of why Intel and AMD eventually didn't want to support it - it's not a great idea to tie yourself to someone else's architecture you have no control or license to.

OTOH, CUDA itself is just a set of APIs and their implementations on NVIDIA GPUs. Other companies can re-implement them. AMD has already done this with HIP.

-

[email protected]replied to [email protected] last edited by

Wtf, this is literally the opposite of true. PTX is nvidia only.