OpenAI Furious DeepSeek Might Have Stolen All the Data OpenAI Stole From Us

-

[email protected]replied to [email protected] last edited by

-

[email protected]replied to [email protected] last edited by

That's why "value" is in quotes. It's not that it didn't exist, is just that it's purely speculative.

Hell Nvidia's stock plummeted as well, which makes no sense at all, considering Deepseek needs the same hardware as ChatGPT.

Stock investing is just gambling on whatever is public opinion, which is notoriously difficult because people are largely dumb and irrational.

-

[email protected]replied to [email protected] last edited by

That is true, and running locally is better in that respect. My point was more that privacy was hardly ever an issue until suddenly now.

-

[email protected]replied to [email protected] last edited by

Absolutely! I was just expanding on what you said for others who come across the thread

-

[email protected]replied to [email protected] last edited by

How much for two thousands?

-

[email protected]replied to [email protected] last edited by

I'm an AI/comp-sci novice, so forgive me if this is a dumb question, but does running the program locally allow you to better control the information that it trains on? I'm a college chemistry instructor that has to write lots of curriculum, assingments and lab protocols; if i ran deepseeks locally and fed it all my chemistry textbooks and previous syllabi and assignments, would I get better results when asking it to write a lab procedure? And could I then train it to cite specific sources when it does so?

-

[email protected]replied to [email protected] last edited by

-

[email protected]replied to [email protected] last edited by

Hell Nvidia’s stock plummeted as well, which makes no sense at all, considering Deepseek needs the same hardware as ChatGPT.

Common wisdom said that these models need CUDA to run properly, and DeepSeek doesn't.

-

[email protected]replied to [email protected] last edited by

EU is in best way to become a group of dictators having billionaires in their asses as well..

-

[email protected]replied to [email protected] last edited by

Good that 404 are unafraid of tackling issues, but tbh i find the "hahaha" unprofessional and dispense with the informal tone in news.

-

[email protected]replied to [email protected] last edited by

Sure but Nvidia still makes the GPUs needed to run them. And AMD is not really competitive in the commercial GPU market.

-

[email protected]replied to [email protected] last edited by

AMD apparently has the 7900 XTX outperforming the 4090 in Deepseek.

-

[email protected]replied to [email protected] last edited by

Those aren't commercial GPUs though. These are:

-

[email protected]replied to [email protected] last edited by

I definitely understand that reaction. It does give off a whiff of unprofessionalism, but their reporting is so consistently solid that I’m willing to give them the space to be a little more human than other journalists. If it ever got in the way of their actual journalism I’d say they should quit it, but that hasn’t happened so far.

-

[email protected]replied to [email protected] last edited by

Tree fiddy 🦕

-

[email protected]replied to [email protected] last edited by

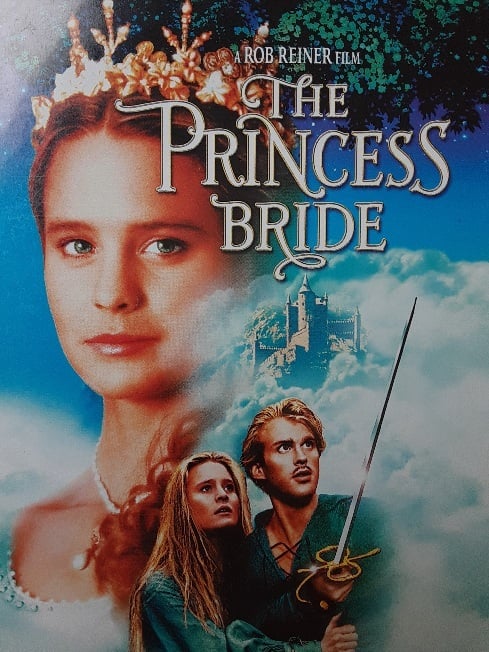

I always thought Rob Reiner had a similar sense of humor to Mel Brooks. And I liked Billy Crystal in it, it kept that section of the movie from feeling too heavy, though I get it's not everyone's thing.

For anyone who hasn't read it, the book is fantastic as well, and helped me appreciate the movie even more (it's probably one of the best film adaptations of a book ever, IMO). The humor and wit of William Goldman was captured expertly in the movie.

-

[email protected]replied to [email protected] last edited by

The battle of the plagiarism machines has begun

-

[email protected]replied to [email protected] last edited by

-

[email protected]replied to [email protected] last edited by

Hell Nvidia's stock plummeted as well, which makes no sense at all, considering Deepseek needs the same hardware as ChatGPT.

It's the same hardware, the problem for them is that deepseek found a way to train their AI for much cheaper using a lot less than the hundreds of thousands of GPUs from Nvidia that openai, meta, xAi, anthropic etc. uses

-

[email protected]replied to [email protected] last edited by

Wasn't zuck the cuck saying "privacy is dead" a few years ago