DeepSeek Proves It: Open Source is the Secret to Dominating Tech Markets (and Wall Street has it wrong).

-

The model weights and research paper are, which is the accepted terminology nowadays.

It would be nice to have the training corpus and RLHF too.

-

A lot of other AI models can say the same, though. Facebook's is. Xitter's is. Doesn't mean I trust them for shit.

-

-

"China bad"

*sounds legit

Sounds legit is what one hears about FUD spread by alglophone media every time the US oligarchy is caught with their pants down.

Snowden: "US is illegally spying on everyone"

Media: Snowden is Russia spy

*Sounds legit

France: US should not unilaterally invade a country

Media: Iraq is full of WMDs

*Sounds legit

DeepSeek: Guys, distillation and body of experts is a way to save money and energy, here's a paper on how to do same.

Media: China bad, deepseek must be cheating

*Sounds legit

-

The open paper they published details the algorithms and techniques used to train it, and it’s been replicated by researchers already.

-

This is your brain on Chinese/Russian propaganda.

-

Llama has several restrictions making it quite a bit less open than Grok or DeepSeek.

-

I don’t like this. Everything you’re saying is true, but this argument isn’t persuasive, it’s dehumanizing. Making people feel bad for disagreeing doesn’t convince them to stop disagreeing.

A more enlightened perspective might be “this might be true or it might not be, so I’m keeping an open mind and waiting for more evidence to arrive in the future.”

-

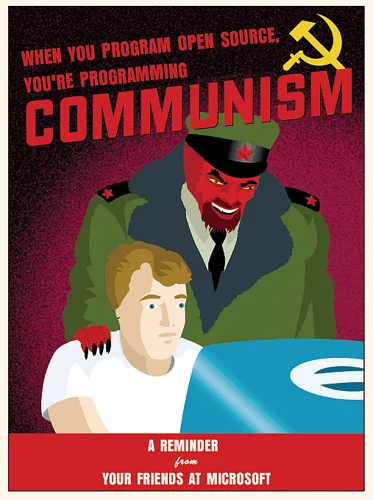

I remember this being some sort of Apple meme at some point. Hence the gum drop iMac.

-

Not the original commenter, but what theirs saying stands true. The issue of "sounds legit" is the main driving force in misinformation right now.

The only way to combat it is to truly gain the knowledge yourself. Accepting things at face value has lead to massive disagreements on objective information, and allowed anti science mindsets to flourish.

Podcasts are the medium that I give the most blame to. Just because someone has a camera and a microphone, viewers believe them to be an authority on a subject, and pairing this with the "sounds Legit" mindset has set back critical thinking skills for an entire population.

More people need to read Jurassic park.

-

So are these techiques so novel and breaktrough? Will we now have a burst of deepseek like models everywhere? Cause that's what absolutely should happen if the whole storey is true. I would assume there are dozens or even hundreds of companies in USA that are in a posession of similar number but surely more chips that Chinese folks claimed to trained their model on.

-

Yup. Thats internet nowadays. Full of comments like this. Cant do muich about it

-

Its just my opnion based on few sources I saw on the web. Should I attach them as links to the comment? I guess I could. But thats extra time which Im not sure I want to spend. Imagine the discussion where both sides provide links and sources to everything they say. Would be great? I guess? But at the same time would be very diffcult on both sides and time consuming. Nobody doest that in todays internet. Nobody every did that. Not just internet acutally, both in real life and internet. Providing evidence is generally for court talk.

-

well if they really are and methodology can be replicated, we are surely about to see some crazy number of deepseek comptention, cause imagine how many us companies in ai and finance sector that are in posession of even larger number of chips.

Although the question rises - if the methodology is so novel why would these folks make it opensource? Why would they share results of years of their work to the public losing their edge over competition? I dont understand.

Can somebody who actually knows how to read machine learning codebase tell us something about deepseek after reading their code?

-

Hugging face already reproduced deepseek R1 (called Open R1) and open sourced the entire pipeline

-

Did they? According to their repo its still WIP

https://github.com/huggingface/open-r1 -

They are trying to make it accepted but it's still contested. Unless the training data provided it's not really open.

-

The training corpus of these large models seem to be “the internet YOLO”. Where it’s fine for them to download every book and paper under the sun, but if a normal person does it.

Believe it or not:

-

But then, people would realize that you got copyrighted material and stuff from pirating websites...

-

Sounds legit