Why I am not impressed by A.I.

-

[email protected]replied to [email protected] last edited by

Doc: That’s an interesting name, Mr…

Fletch: Babar.

Doc: Is that with one B or two?

Fletch: One. B-A-B-A-R.

Doc: That’s two.

Fletch: Yeah, but not right next to each other, that’s what I thought you meant.

Doc: Isn’t there a children’s book about an elephant named Babar.

Fletch: Ha, ha, ha. I wouldn’t know. I don’t have any.

Doc: No children?

Fletch: No elephant books.

-

[email protected]replied to [email protected] last edited by

I have it write for me emails in German. I moved there not too long ago, works wonders to get doctors appointment, car service, etc. I also have it explain the text, so I’m learning the language.

I also use it as an alternative to internet search, which is now terrible. It’s not going to help you to find smg super location specific, but I can ask it to tell me without spoilers smg about a game/movie or list metacritic scores in a table, etc.

It also works great in summarizing long texts.

LLM is a tool, what matters is how you use it. It is stupid, it doesn’t think, it’s mostly hype to call it AI. But it definitely has it’s benefits.

-

[email protected]replied to [email protected] last edited by

And redbull give you wings.

Marketing within a capitalist market be like that for every product.

-

[email protected]replied to [email protected] last edited by

it would be like complaining that a water balloon isn't useful because it isn't accurate. LLMs are good at approximating language, numbers are too specific and have more objective answers.

-

[email protected]replied to [email protected] last edited by

Is anyone really pitching AI as being able to solve every problem though?

-

[email protected]replied to [email protected] last edited by

it can, in the same way a loom did, just for more language-y tasks, a multimodal system might be better at answering that type of question by first detecting that this is a question of fact and that using a bucket sort algorithm on the word "strawberry" will answer the question better than it's questionably obtained correlations.

-

[email protected]replied to [email protected] last edited by

It doesn't even see the word 'strawberry', it's been tokenized in a way to no longer see the 'text' that was input.

It's more like it sees a question like:

How many 'r's in 草莓?And it spits out an answer not based on analysis of the input, but a model of what people might have said.

-

[email protected]replied to [email protected] last edited by

if you want to find a few articles out of a few hundred that are about the benefits of nuclear weapons or other controversial topics that have significant literature on them it can be helpful to eliminate 90% that probably aren't what I'm looking for.

-

[email protected]replied to [email protected] last edited by

Yes, at some point the meme becomes the training data and the LLM doesn't need to answer because it sees the answer all over the damn place.

-

[email protected]replied to [email protected] last edited by

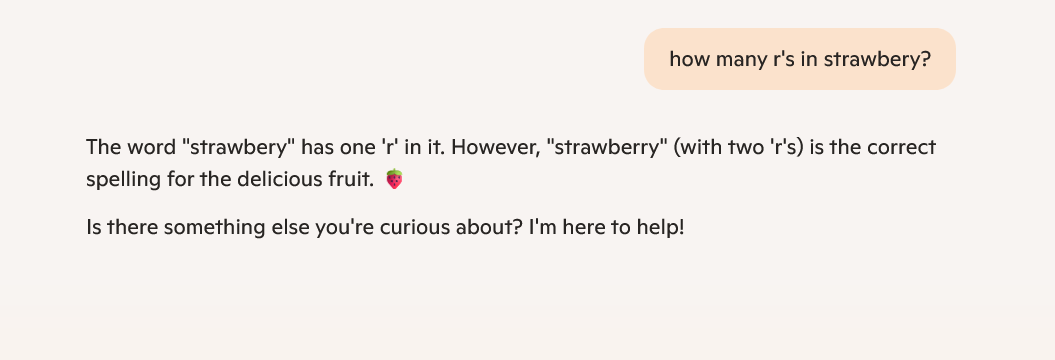

What would have been different about this if it had impressed you? It answered the literal question and also the question the user was actually trying to ask.

-

[email protected]replied to [email protected] last edited by

I really don't see the point OP is trying to make with this example though. It accurately answered their misspelled question, and also accurately answered the question they were apparently trying to ask. I don't see the problem.

-

[email protected]replied to [email protected] last edited by

Except many many experts have said this is not why it happens. It cannot count letters in the incoming words. It doesn't even know what "words" are. It has abstracted tokens by the time it's being run through the model.

It's more like you don't know the word strawberry, and instead you see:

How many 'r's in ?

?And you respond with nonsense, because the relation between 'r' and

is nonsensical.

is nonsensical. -

[email protected]replied to [email protected] last edited by

It doesn't see "strawberry" or "straw" or "berry". It's closer to think of it as seeing

, an abstract token representing the same concept that the training data associated with the word.

, an abstract token representing the same concept that the training data associated with the word. -

[email protected]replied to [email protected] last edited by

What gaps in functionality do you see being demonstrated in this example? It answered both the literal question and the intended question, recognizing the misspelling. I may be having a complete brain-fart but to me this seems like a demo of it working very well.

-

[email protected]replied to [email protected] last edited by

It didn't? StRawbeRy has 2 rs. StRawbeRRy has 3.

-

[email protected]replied to [email protected] last edited by

How many strawberries could a strawberry bury if a strawberry could bury strawberries

-

[email protected]replied to [email protected] last edited by

OHHHHHHH.... my bad. I'm an idiot. Being an LLM it's giving the answer it thinks a human such as myself would come up with.

-

[email protected]replied to [email protected] last edited by

Works fine for me in o3-mini-high:

Counting letters in “strawberry”

Alright, I’m checking: the word “strawberry” is spelled S T R A W B E R R Y. Let me count the letters: S (1), T (2), R (3), A (4), W (5), B (6), E (7), R (8), R (9), Y (10). There are three R’s: in positions 3, 8, and 9. So, the answer is 3. Even if we ignore case, the count still holds. Therefore, there are 3 r’s in “strawberry.”

-

[email protected]replied to [email protected] last edited by

Maybe you're a bot too...

-

[email protected]replied to [email protected] last edited by

Not last time I checked, but we all could be as far as you know.