Why I am not impressed by A.I.

-

Answer, you're using it wrong /stevejobs

-

Really? AI has been marketed as being able to count the r’s in “strawberry?” Please link to this ad.

-

We have one that indexes all the wikis and GDocs and such at my work and it’s incredibly useful for answering questions like “who’s in charge of project 123?” or “what’s the latest update from team XYZ?”

I even asked it to write my weekly update for MY team once and it did a fairly good job. The one thing I thought it had hallucinated turned out to be something I just hadn’t heard yet. So it was literally ahead of me at my own job.

I get really tired of all the automatic hate over stupid bullshit like this OP. These tools have their uses. It’s very popular to shit on them. So congratulations for whatever agreeable comments your post gets. Anyway.

-

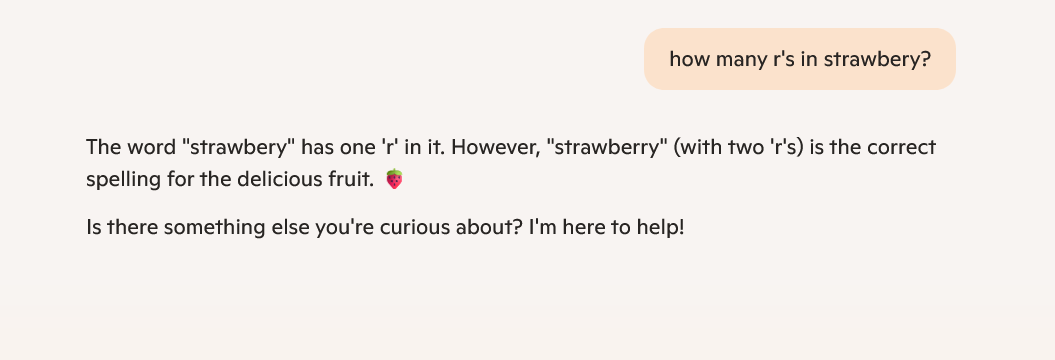

But you realize that it's wrong on both counts, right?

Strawberry has three Rs or two Rs in the wrong spelling.

-

Finally! With a household energy consumption for one day we can count how many Rs are in strawberry.

-

I think coding is one of the areas where LLMs are most useful for private individuals at this point in time.

It's not yet at the point where you just give it a prompt and it spits out flawless code.

For someone like me that are decent with computers but have little to no coding experience it's an absolutely amazing tool/teacher.

-

"strawbery" has 2 R's in it while "strawberry" has 3.

Fucking AI can't even count.

-

LLM is a type of a machine learning model, which is a type of artificial intelligence.

Saying LLMs aren't AI is just the AI Effect in action.

-

Your not supposed to just trust it. Your supposed to test the solution it gives you. Yes that makes it not useful for some things. But still immensely useful for other applications and a lot of times it gives you a really great jumping off point to solving whatever your problem is.

-

For reference:

AI chatbots unable to accurately summarise news, BBC finds

the BBC asked ChatGPT, Copilot, Gemini and Perplexity to summarise 100 news stories and rated each answer. […] It found 51% of all AI answers to questions about the news were judged to have significant issues of some form. […] 19% of AI answers which cited BBC content introduced factual errors, such as incorrect factual statements, numbers and dates.

It makes me remember I basically stopped using LLMs for any summarization after this exact thing happened to me. I realized that without reading the text, I wouldn’t be able to know whether the output has all the info or if it has some made-up info.