Why I am not impressed by A.I.

-

Artificial sugar is still sugar.

Because it contains sucrose, fructose or glucose? Because it metabolises the same and matches the glycemic index of sugar?

Because those are all wrong. What's your criteria?

-

No apologies needed. Enjoy your day and keep the good vibes up!

-

it's not good for summaries. often gets important bits wrong, like embedded instructions that can't be summarized.

-

My experience has been very different, I do have to sometimes add to what it summarized though. The Bsky account is mentioned is a good example, most of the posts are very well summarized, but every now and then there will be one that isn't as accurate.

-

-

Skill issue

-

In this example a sugar is something that is sweet.

Another example is artificial flavours still being a flavour.

Or like artificial light being in fact light.

-

So for something you can't objectively evaluate?

Looking at Apple's garbage generator, LLMs aren't even good at summarising. -

Yeah and you know I always hated this screwdrivers make really bad hammers.

-

That was this reality. Very briefly. Remember AI Dungeon and the other clones that were popular prior to the mass ml marketing campaigns of the last 2 years?

-

I asked Gemini if the quest has an SD slot. It doesn't, but Gemini said it did. Checking the source it was pulling info from the vive user manual

-

You rang?

-

This was an interesting read, thanks for sharing.

-

Just don't expect them to always tell the truth, or to actually be human-like

I think the point of the post is to call out exactly that: people preaching AI as replacing humans

-

Thats because it wasnt originally called AI. It was called an LLM. Techbros trying to sell it and articles wanting to fan the flames started called it AI and eventually it became common dialect. No one in the field seriously calls it AI, they generally save that terms to refer to general AI or at least narrow ai. Of which an llm is neither.

-

Fair enough - sounds like they might not be ready for prime time though.

Oh well, at least while the bugs get ironed-out we're not using them for anything important

-

And apparently, they apparently still can't get an accurate result with such a basic query.

And yet...

https://futurism.com/openai-signs-deal-us-government-nuclear-weapon-security -

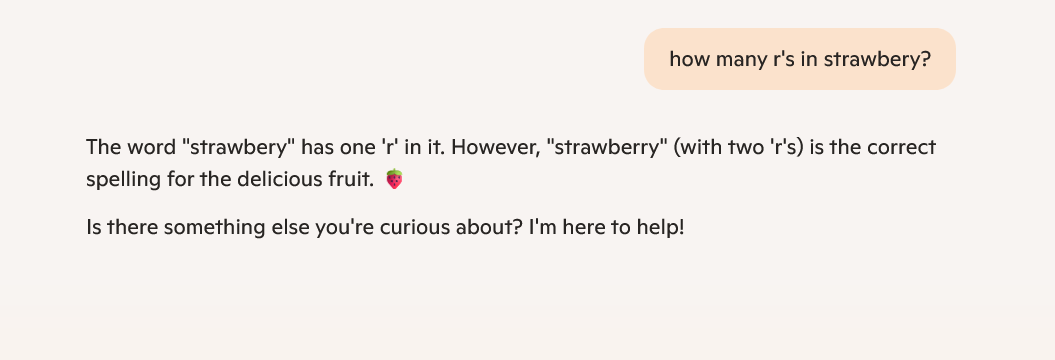

Here's my guess, aside from highlighted token issues:

We all know LLMs train on human-generated data. And when we ask something like "how many R's" or "how many L's" is in a given word, we don't mean to count them all - we normally mean something like "how many consecutive letters there are, so I could spell it right".

Yes, the word "strawberry" has 3 R's. But what most people are interested in is whether it is "strawberry" or "strawbery", and their "how many R's" refers to this exactly, not the entire word.

-

But to be fair, as people we would not ask "how many Rs does strawberry have", but "with how many Rs do you spell strawberry" or "do you spell strawberry with 1 R or 2 Rs"

-

That happens when do you not understand what is a llm, or what it's usecases are.

This is like not being impressed by a calculator because it cannot give a word synonym.