Why I am not impressed by A.I.

-

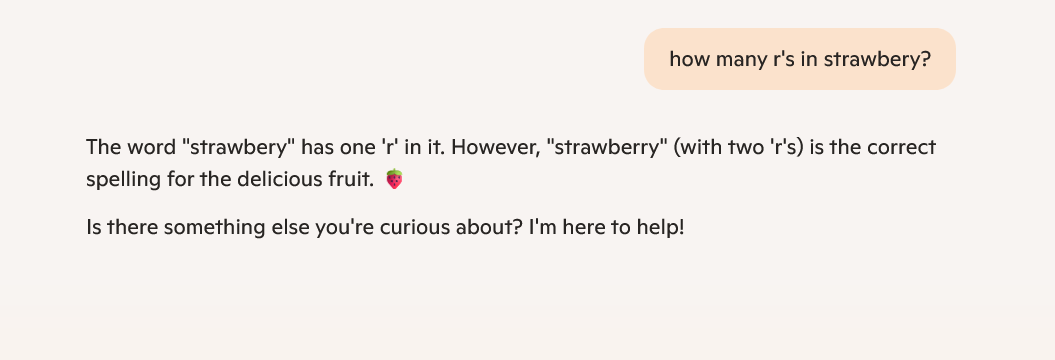

[email protected]replied to [email protected] last edited by

These models don't get single characters but rather tokens repenting multiple characters. While I also don't like the "AI" hype, this image is also very 1 dimensional hate and misreprents the usefulness of these models by picking one adversarial example.

Today ChatGPT saved me a fuckton of time by linking me to the exact issue on gitlab that discussed the issue I was having (full system freezes using Bottles installed with flatpak on Arch. This was the URL it came up with: https://gitlab.archlinux.org/archlinux/packaging/packages/linux/-/issues/110

This issue is one day old. When I looked this shit up myself I found exactly nothing useful on both DDG or Google. After this ChatGPT also provided me with the information that the LTS kernel exists and how to install it. Obviously I verified that stuff before using it, because these LLMs have their limits. Now my system works again, and figuring this out myself would've cost me hours because I had no idea what broke. Was it flatpak, Nvidia, the kernel, Wayland, Bottles, some random shit I changed in a config file 2 years ago? Well thanks to ChatGPT I know.

They're tools, and they can provide new insights that can be very useful. Just don't expect them to always tell the truth, or to actually be human-like

-

[email protected]replied to [email protected] last edited by

Skill issue

-

[email protected]replied to [email protected] last edited by

In this example a sugar is something that is sweet.

Another example is artificial flavours still being a flavour.

Or like artificial light being in fact light.

-

[email protected]replied to [email protected] last edited by

So for something you can't objectively evaluate?

Looking at Apple's garbage generator, LLMs aren't even good at summarising. -

[email protected]replied to [email protected] last edited by

Yeah and you know I always hated this screwdrivers make really bad hammers.

-

[email protected]replied to [email protected] last edited by

That was this reality. Very briefly. Remember AI Dungeon and the other clones that were popular prior to the mass ml marketing campaigns of the last 2 years?

-

[email protected]replied to [email protected] last edited by

I asked Gemini if the quest has an SD slot. It doesn't, but Gemini said it did. Checking the source it was pulling info from the vive user manual

-

[email protected]replied to [email protected] last edited by

You rang?

-

[email protected]replied to [email protected] last edited by

This was an interesting read, thanks for sharing.

-

[email protected]replied to [email protected] last edited by

Just don't expect them to always tell the truth, or to actually be human-like

I think the point of the post is to call out exactly that: people preaching AI as replacing humans

-

[email protected]replied to [email protected] last edited by

Thats because it wasnt originally called AI. It was called an LLM. Techbros trying to sell it and articles wanting to fan the flames started called it AI and eventually it became common dialect. No one in the field seriously calls it AI, they generally save that terms to refer to general AI or at least narrow ai. Of which an llm is neither.

-

[email protected]replied to [email protected] last edited by

Fair enough - sounds like they might not be ready for prime time though.

Oh well, at least while the bugs get ironed-out we're not using them for anything important

-

[email protected]replied to [email protected] last edited by

And apparently, they apparently still can't get an accurate result with such a basic query.

And yet...

https://futurism.com/openai-signs-deal-us-government-nuclear-weapon-security -

[email protected]replied to [email protected] last edited by

Here's my guess, aside from highlighted token issues:

We all know LLMs train on human-generated data. And when we ask something like "how many R's" or "how many L's" is in a given word, we don't mean to count them all - we normally mean something like "how many consecutive letters there are, so I could spell it right".

Yes, the word "strawberry" has 3 R's. But what most people are interested in is whether it is "strawberry" or "strawbery", and their "how many R's" refers to this exactly, not the entire word.

-

[email protected]replied to [email protected] last edited by

But to be fair, as people we would not ask "how many Rs does strawberry have", but "with how many Rs do you spell strawberry" or "do you spell strawberry with 1 R or 2 Rs"

-

[email protected]replied to [email protected] last edited by

That happens when do you not understand what is a llm, or what it's usecases are.

This is like not being impressed by a calculator because it cannot give a word synonym.

-

[email protected]replied to [email protected] last edited by

They are not random per se. They are just statistical with just some degree of randomization.

-

[email protected]replied to [email protected] last edited by

Exactly. The naming of the technology would make you assume it's intelligent. It's not.

-

[email protected]replied to [email protected] last edited by

What situations are you thinking of that requires reasoning?

I've used LLMs to create software i needed but couldn't find online.

-

[email protected]replied to [email protected] last edited by

"My hammer is not well suited to cut vegetables"

There is so much to say about AI, can we move on from "it can't count letters and do math" ?