OpenAI Furious DeepSeek Might Have Stolen All the Data OpenAI Stole From Us

-

[email protected]replied to [email protected] last edited by

I tend to think that information should be free, generally, so I would be fine with "OpenAI the non-profit" taking copyrighted data under fair-use, but I don't extend that thinking to "OpenAI the for-profit company".

-

[email protected]replied to [email protected] last edited by

everyone concerned about their privacy going to china-- look at how easy it is to get it from the hands of our overlord spymasters who've already snatched it from us.

-

[email protected]replied to [email protected] last edited by

I mean, sure, but the issue is that the rules aren't being applied on the same level. The data in question isn't free for you, it's not free for me, but it's free for OpenAI. They don't face any legal consequences, whereas humans in the USA are prosecuted including an average fine per human of $266,000 and an average prison sentence of 25 months.

OpenAI has pirated, violated copyright, and distributed more copyright than an i divided human is reasonably capable of, and faces no consequences.

https://www.splaw.us/blog/2021/02/looking-into-statistics-on-copyright-violations/

https://www.patronus.ai/blog/introducing-copyright-catcher

My use of the term "human" is awkward, but US law considers corporations people, so i tried to differentiate.

I'm in favour of free and open data, but I'm also of the opinion that the rules should apply to everyone.

-

[email protected]replied to [email protected] last edited by

It's a shame that you can't copyright the output of AI, isn't it?

-

[email protected]replied to [email protected] last edited by

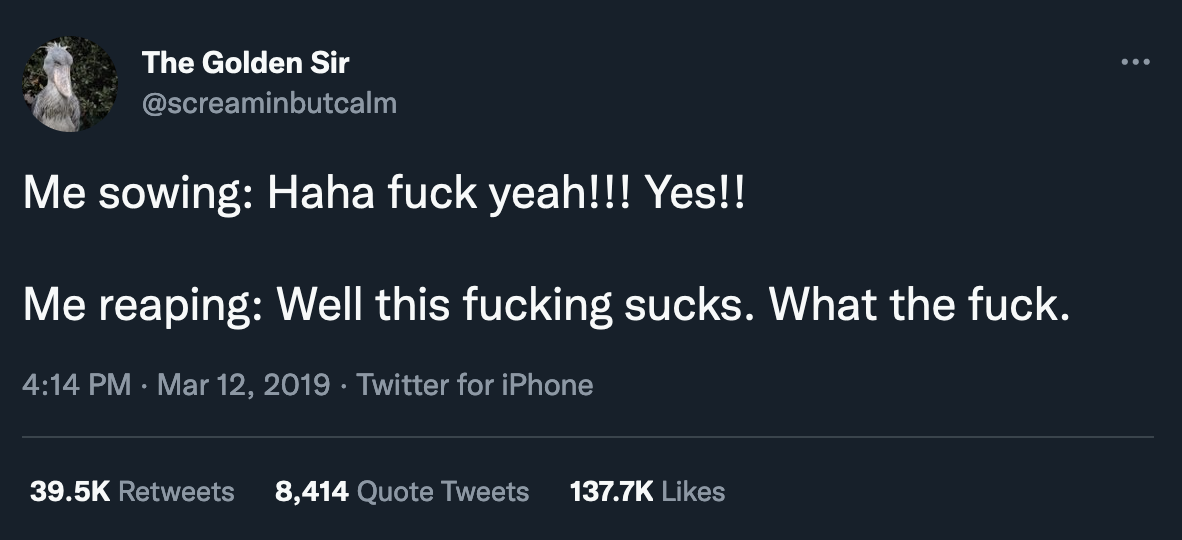

Intellectual property theft for me but not for thee!

-

[email protected]replied to [email protected] last edited by

Trump executive order on the copyrightability of AI output in 3...

-

[email protected]replied to [email protected] last edited by

It just gets better and better y'all.

https://www.theregister.com/2025/01/30/deepseek_database_left_open/

-

[email protected]replied to [email protected] last edited by

so? it won't have any effect on china, because last i checked, us laws apply only in the us

-

[email protected]replied to [email protected] last edited by

Tale as old as capitalism.

-

[email protected]replied to [email protected] last edited by

Security? We don’t need no security!

-

[email protected]replied to [email protected] last edited by

You get a free database, and you get free database, and you get a free database! EVERYBODY GETS A FREE DATABASE

Oprahbees.gif

-

[email protected]replied to [email protected] last edited by

Many licences have different rules for redistribution, which I think is fair. The site is free to use but it's not fair to copy all the data and make a competitive site.

Of course wikipedia could make such a license. I don't think they have though.

How is the lack of infrastructure an argument for allowing something morally incorrect? We can take that argument to absurdum by saying there are more people with guns than there are cops - therefore killing must be morally correct.

-

[email protected]replied to [email protected] last edited by

-

[email protected]replied to [email protected] last edited by

The core infrastructure issue is distinguishing between queries made by individuals and those made by programs scraping the internet for AI training data. The answer is that you can't. The way data is presented online makes such differentiation impossible.

Either all data must be placed behind a paywall, or none of it should be. Selective restriction is impractical. Copyright is not the central issue, as AI models do not claim ownership of the data they train on.

If information is freely accessible to everyone, then by definition, it is free to be viewed, queried, and utilized by any application. The copyrighted material used in AI training is not being stored verbatim—it is being learned.

In the same way, an artist drawing inspiration from Michelangelo or Raphael does not need to compensate their estates. They are not copying the work but rather learning from it and creating something new.

-

[email protected]replied to [email protected] last edited by

The new innovate and the old litigate.

-

[email protected]replied to [email protected] last edited by

@whostosay I know they're being touted as having done very much with very little, but this kind of thing should have been part of the little.

-

[email protected]replied to [email protected] last edited by

I'm not understanding your reply, do you mind rephrasing?

-

[email protected]replied to [email protected] last edited by

How can people wear hoodies without zippers? I just don’t get it

-

[email protected]replied to [email protected] last edited by

I disagree. Machines aren't "learning". You are anthropomorphising theem. They are storing the original works, just in a very convoluted way which makes it hard to know which works were used when generating a new one.

I tend to see it as they used "all the works" they trained on.

For the sake of argument, assume I could make an "AI" mesh together images but then only train it on two famous works of art. It would spit out a split screen of half the first one to the left and half of the other to the right. This would clearly be recognized as copying the original works but it would be a "new piece of art", right?

What if we add more images? At some point it would just be a jumbled mess, but still consist wholly of copies of original art. It would just be harder to demonstrate.

Morally - not practically - is the sophistication of the AI in jumbling the images together really what should constitute fair use?

-

[email protected]replied to [email protected] last edited by

That's literally not remotely what llms are doing.

And they most certainly do learn in the common sense of the term. They even use neural nets which mimic the way neurons function in the brain.