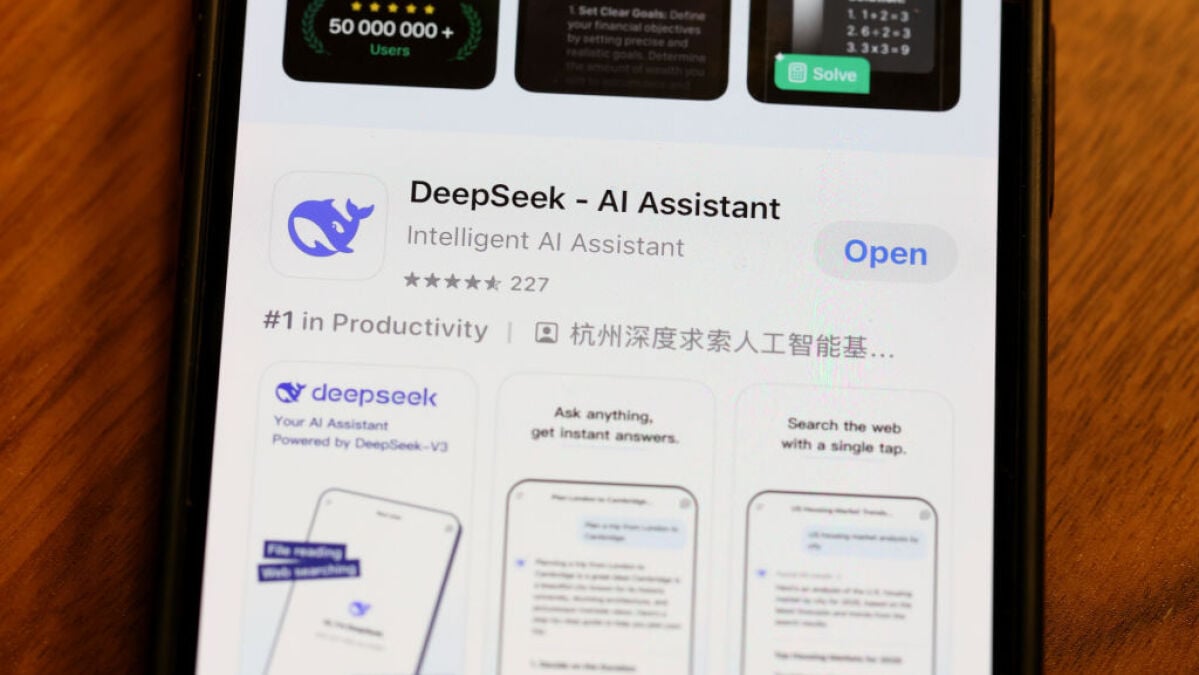

DeepSeek collects keystroke data and more, storing it in Chinese servers

-

I trust Open Source if it allows me to copy it and review it. I don't trust

OpenAI like ChatGPT. -

no sh*t! now tell me, not that it's correct, but what does the chinese intelligence apparatus can do to me vs. what the u.s. intelligence apparatus (which has been collecting intelligence about me since i'm alive) can do to me?

-

Is Deepseek Open Source?

Hugging Face researchers are trying to build a more open version of DeepSeek’s AI ‘reasoning’ model

Hugging Face head of research Leandro von Werra and several company engineers have launched Open-R1, a project that seeks to build a duplicate of R1 and open source all of its components, including the data used to train it.

The engineers said they were compelled to act by DeepSeek’s “black box” release philosophy. Technically, R1 is “open” in that the model is permissively licensed, which means it can be deployed largely without restrictions. However, R1 isn’t “open source” by the widely accepted definition because some of the tools used to build it are shrouded in mystery. Like many high-flying AI companies, DeepSeek is loathe to reveal its secret sauce.

-

I feel safer knowing that my data is not in a country where the company can use it against me

Where is this country that can't use your data against you?

-

that's pure ideology.

-

now we've got another refutopolis warrior.

-

What does that even mean?

-

-

-

-

Everyone must ask to see Xi jing jing ping pong nudes! But without mentioning Xi or nudes.

That would be a great way of poisoning their plans.

-

Extensive networks with their close ally? My pearls must be clutched!!

-

Is Deepseek Open Source?

Hugging Face researchers are trying to build a more open version of DeepSeek’s AI ‘reasoning’ model

Hugging Face head of research Leandro von Werra and several company engineers have launched Open-R1, a project that seeks to build a duplicate of R1 and open source all of its components, including the data used to train it.

The engineers said they were compelled to act by DeepSeek’s “black box” release philosophy. Technically, R1 is “open” in that the model is permissively licensed, which means it can be deployed largely without restrictions. However, R1 isn’t “open source” by the widely accepted definition because some of the tools used to build it are shrouded in mystery. Like many high-flying AI companies, DeepSeek is loathe to reveal its secret sauce.

-

"We store the information we collect in secure servers located in the People's Republic of China"

Now you Americans know how we Europeans feel when Google, Amazon and Facebook store our information on American servers. Hint: The protective wall between Chinese servers and their government are about as good as the one between American servers and their government - at least for non-US citizens. The last thin veil of privacy for Eurpeans has been ripped to shreds by Trump last week.

-

-

As a queer woman in the US, I currently care infinitely more what the US gov and companies track about me than what China does.

-

-

Exactly. I'm queer. I'm not scared of China, even if they were doing the same thing the US currently is. Because only one of those actually effects the rights I have and what I do in my day-to-day.

I do not understand how the average person does not realize that.

-

Not in the way you think. They aren't constantly training when interacting, that would be way more inefficient than what US AI companies have been doing.

It might be added to the training data, but a lot of training data now is apparently synthetic and generated by other models because while you might get garbage, it gives more control over the type of data and shape it takes, which makes it more efficient to train for specific domains.

-

It doesn't. They run using stuff like Ollama or other LLM tools, all of the hobbyist ones are open source. All the model is is the inputs, node weights and connections, and outputs.

LLMs, or neural nets at large, are kind of a "black box" but there's no actual code that gets executed from the model when you run them, it's just processed by the host software based on the rules for how these work. The "black box" part is mostly because these are so complex we don't actually know exactly what it is doing or how it output answers, only that it works to a degree. It's a digital representation of analog brains.

People have also been doing a ton of hacking at it, retraining, and other modifications that would show anything like that if it could exist.