What are your thoughts on AI?

-

What are your thoughts on Generative Machine Learning models? Do you like them? Why? What future do you see for this technology?

What about non-generative uses for these neural networks? Do you know of any field that could use such pattern recognition technology?

I want to get a feel for what are the general thoughts of Lemmy Users on this technology.

-

A [email protected] shared this topic

A [email protected] shared this topic

-

[email protected]replied to [email protected] last edited by

I think AI will end truth as a concept

-

[email protected]replied to [email protected] last edited by

AI is a tool and a lot of fields used it successfully prior to the chatgpt craze. It's excellent for structural extraction and comprehension and it will slowly change the way most of us work but there's a hell of a craze right now.

-

[email protected]replied to [email protected] last edited by

As a tool for reducing our societal need to do hard labor I think it is incredibly useful. As it is generally used in America I think it is an egregious from of creative theft that threatens to replace a large range of the working class in our nation.

-

[email protected]replied to [email protected] last edited by

agreed, I'm staying hopeful it'll improve lives for most when used efficiently, at the cost of others losing jobs, sadly.

on the other hand, wealth inequality will worsen until policies change

-

[email protected]replied to [email protected] last edited by

Let me know when we have some real AI to evaluate rather than products labeled as a marketing ploy. Anyone remember when everything had to be called "3D" because it was cool? I missed my chance to get 3D stereo cables.

-

[email protected]replied to [email protected] last edited by

The pushback against genAI's mostly reactionary moral panic with (stupid|misinformation|truth stretching) talking points , such's :

- AI art being inherently "plagiarising"

- AI using as much energy's crypto , the AI = crypto mindset in general

- AI art "having no soul" , .*

- "Peops use AI to do «BAD THING» , therefour AI ISZ THE DEVILLLL

"

" - .*

Any legitimate criticisms sadly drowned out by this bollocks , can't trust anti AI peops to actually criticise the tech . Am bitter

-

[email protected]replied to [email protected] last edited by

Mixed feelings. I decided not to study graphic design because I saw the writing on the wall, so I'm a little salty. I think they can be really useful for cutting back on menial tasks though. For example, I don't see why people bitch about someone using AI for their cover letter as long as they proofread it afterwards. That seems like the kind of thing you'd want to automate, unlike art and human interaction.

I think right now I just kind of hate AI because of capitalism. Tech companies are trying to make it sound like they can do so many things they really can't, and people are falling for it.

-

[email protected]replied to [email protected] last edited by

Most GenAI was trained on material they had no right to train on (including plenty of mine). So I'm doing my small part, and serving known AI agents an infinite maze of garbage. They can fuck right off.

Now, if we're talking about real AI, that isn't just a server park of disguised markov chains in a trenchcoat, neural networks that weren't trained on stolen data, that's a whole different story.

-

[email protected]replied to [email protected] last edited by

It’s a glorified crawler that is incredibly inefficient. I don’t use it because I’ve been programmed to be picky about my sources and LLMs haven’t.

-

[email protected]replied to [email protected] last edited by

Pretty cool technology ruined by greed. If we don't get this under control (which we won't probably) we're in for a pretty interesting age of the Internet, maybe even the last one.

-

[email protected]replied to [email protected] last edited by

I like to think somewhere researchers are working on actual AI and the AI has already decided that it doesn't want to read bullshit on the internet

-

[email protected]replied to [email protected] last edited by

If it does then we also lose the ability to even say that that's what it's done. And if that's the case then has it really done it? /ponders uselessly

-

[email protected]replied to [email protected] last edited by

I would probably be a bit more excited if it didn't start coming out during a time of widespread disinformation and anti-intellectualism.

I just come here to share animal facts and similar things, and the amount of reasonably realistic AI images and poorly compiled "fact sheets", and recently also passable videos of non-real animals is very disappointing. It waters down basic facts as it blends in to more and more things.

Stuff like that is the lowest level of bad in the grand scheme of things. I don't even like to think of the intentionally malicious ways we'll see it be used. It's a going to be the robocaller of the future, but not just spamming our landlines, but everything. I think I could live without it.

-

[email protected]replied to [email protected] last edited by

No joke, it will probably kill us all.. The Doomsday Clock is citing Fascism, Nazis, Pandemics, Global Warming, Nuclear War, and AI as the harbingers of our collective extinction..

The only thing I would add, is that AI itself will likely speed-run and coordinate these other world-ending disasters... It's both Humanity's greatest invention, and also our assured doom. -

[email protected]replied to [email protected] last edited by

Hype bubble. Has potential, but nothing like what is promised.

-

[email protected]replied to [email protected] last edited by

What do you think about what are your thoughts on AI?

-

[email protected]replied to [email protected] last edited by

-

I don’t think it’s useful for a lot of what it’s being promoted for—it’s exploiting the common understanding of software as a process whose behavior is rigidly constrained and can be trusted to operate within those constraints.

-

I think it sheds new light on human brain functioning, but only reproduces a specific aspect of the brain—namely, the salience network (i.e., the part of our brain that builds a predictive model of our environment and alerts us when the unexpected happens). This can be useful for picking up on subtle correlations our conscious brains would miss—but those who think it can be incrementally enhanced into reproducing the entire brain (or even the part of the brain we would properly call consciousness) are mistaken.

-

Building on the above, I think generative models imitate the part of our subconscious that tries to “fill in the banks” when we see or hear something ambiguous, not the part that deliberately creates meaningful things from scratch. So I don’t think it’s a real threat to the creative professions. I think they should be prevented from creating works that would be considered infringing if they were produced by humans, but not from training on copyrighted works that a human would be permitted to see or hear.

-

I think the parties claiming that AI needs to be prevented from falling into “the wrong hands” are themselves the most likely parties to abuse it. I think it’s safest when it’s open, accessible, and unconcentrated.

-

-

[email protected]replied to [email protected] last edited by

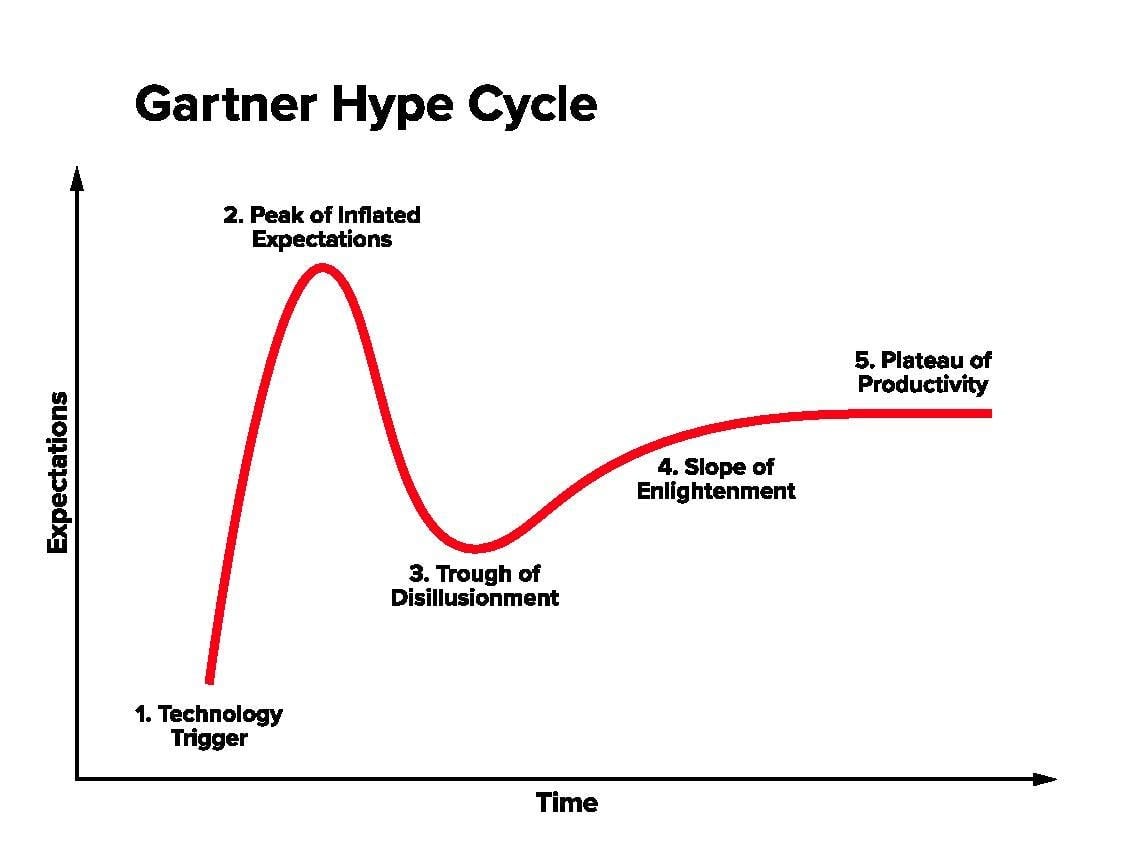

The trough of dissolutionment!

-

[email protected]replied to [email protected] last edited by

It's a tool with some interesting capabilities. It's very much in a hype phase right now, but legitimate uses are also emerging. Automatically generating subtitles is one good example of that. We also don't know what the plateau for this tech will be. Right now there are a lot of advancements happening at rapid pace, and it's hard to say how far people can push this tech before we start hitting diminishing returns.

For non generative uses, using neural networks to look for cancer tumors is a great use case https://pmc.ncbi.nlm.nih.gov/articles/PMC9904903/

Another use case is using neural nets to monitor infrastructure the way China is doing with their high speed rail network https://interestingengineering.com/transportation/china-now-using-ai-to-manage-worlds-largest-high-speed-railway-system

DeepSeek R1 appears to be good at analyzing code and suggesting potential optimizations, so it's possible that these tools could work as profilers https://simonwillison.net/2025/Jan/27/llamacpp-pr/

I do think it's likely that LLMs will become a part of more complex systems using different techniques in complimentary ways. For example, neurosymbolics seems like a very promising approach. It uses deep neural nets to parse and classify noisy input data, and then uses a symbolic logic engine to operate on the classified data internally. This addresses a key limitation of LLMs which is the ability to do reasoning in a reliable way and to explain how it arrives at a solution.

Personally, I generally feel positively about this tech and I think it will have a lot of interesting uses down the road.