We need to stop pretending AI is intelligent

-

That headline is a straw man, and the article really argues on General AI, which also has consciousness.

The current state of AI is definitely intelligent, but it's not GAI.

Bullshit headline.wrote on last edited by [email protected]Todays AI is clippy on steroids. It's not intelligent or creative. You can't feed it physics and astronomy books without the equation for C and tell it to create the equation for C. It's fancy autocorrect, and it's a waste of compute and energy.

-

We are constantly fed a version of AI that looks, sounds and acts suspiciously like us. It speaks in polished sentences, mimics emotions, expresses curiosity, claims to feel compassion, even dabbles in what it calls creativity.

But what we call AI today is nothing more than a statistical machine: a digital parrot regurgitating patterns mined from oceans of human data (the situation hasn’t changed much since it was discussed here five years ago). When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

This means AI has no understanding. No consciousness. No knowledge in any real, human sense. Just pure probability-driven, engineered brilliance — nothing more, and nothing less.

So why is a real “thinking” AI likely impossible? Because it’s bodiless. It has no senses, no flesh, no nerves, no pain, no pleasure. It doesn’t hunger, desire or fear. And because there is no cognition — not a shred — there’s a fundamental gap between the data it consumes (data born out of human feelings and experience) and what it can do with them.

Philosopher David Chalmers calls the mysterious mechanism underlying the relationship between our physical body and consciousness the “hard problem of consciousness”. Eminent scientists have recently hypothesised that consciousness actually emerges from the integration of internal, mental states with sensory representations (such as changes in heart rate, sweating and much more).

Given the paramount importance of the human senses and emotion for consciousness to “happen”, there is a profound and probably irreconcilable disconnect between general AI, the machine, and consciousness, a human phenomenon.

And yet, paradoxically, it is far more intelligent than those people who think it is intelligent.

-

If only there were a word, literally defined as:

Made by humans, especially in imitation of something natural.

throws hands up At least we tried.

-

We are constantly fed a version of AI that looks, sounds and acts suspiciously like us. It speaks in polished sentences, mimics emotions, expresses curiosity, claims to feel compassion, even dabbles in what it calls creativity.

But what we call AI today is nothing more than a statistical machine: a digital parrot regurgitating patterns mined from oceans of human data (the situation hasn’t changed much since it was discussed here five years ago). When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

This means AI has no understanding. No consciousness. No knowledge in any real, human sense. Just pure probability-driven, engineered brilliance — nothing more, and nothing less.

So why is a real “thinking” AI likely impossible? Because it’s bodiless. It has no senses, no flesh, no nerves, no pain, no pleasure. It doesn’t hunger, desire or fear. And because there is no cognition — not a shred — there’s a fundamental gap between the data it consumes (data born out of human feelings and experience) and what it can do with them.

Philosopher David Chalmers calls the mysterious mechanism underlying the relationship between our physical body and consciousness the “hard problem of consciousness”. Eminent scientists have recently hypothesised that consciousness actually emerges from the integration of internal, mental states with sensory representations (such as changes in heart rate, sweating and much more).

Given the paramount importance of the human senses and emotion for consciousness to “happen”, there is a profound and probably irreconcilable disconnect between general AI, the machine, and consciousness, a human phenomenon.

wrote on last edited by [email protected]The thing is, ai is compression of intelligence but not intelligence itself. That's the part that confuses people.

Ai is the ability to put anything describable into a compressed zip. -

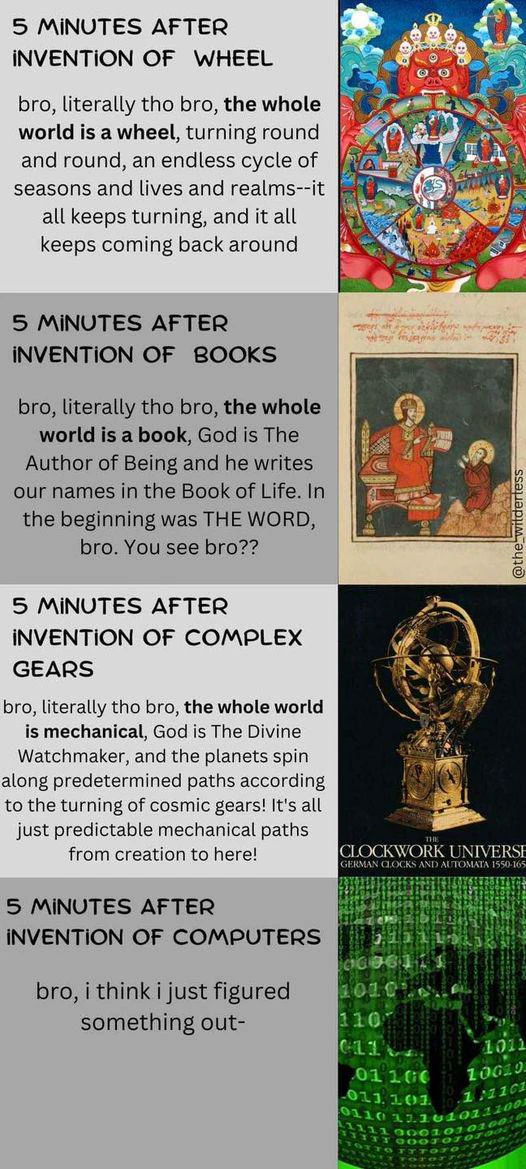

If you were born during the first industrial revolution, then you'd think the mind was a complicated machine. People seem to always anthropomorphize inventions of the era.

This is great

-

We are constantly fed a version of AI that looks, sounds and acts suspiciously like us. It speaks in polished sentences, mimics emotions, expresses curiosity, claims to feel compassion, even dabbles in what it calls creativity.

But what we call AI today is nothing more than a statistical machine: a digital parrot regurgitating patterns mined from oceans of human data (the situation hasn’t changed much since it was discussed here five years ago). When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

This means AI has no understanding. No consciousness. No knowledge in any real, human sense. Just pure probability-driven, engineered brilliance — nothing more, and nothing less.

So why is a real “thinking” AI likely impossible? Because it’s bodiless. It has no senses, no flesh, no nerves, no pain, no pleasure. It doesn’t hunger, desire or fear. And because there is no cognition — not a shred — there’s a fundamental gap between the data it consumes (data born out of human feelings and experience) and what it can do with them.

Philosopher David Chalmers calls the mysterious mechanism underlying the relationship between our physical body and consciousness the “hard problem of consciousness”. Eminent scientists have recently hypothesised that consciousness actually emerges from the integration of internal, mental states with sensory representations (such as changes in heart rate, sweating and much more).

Given the paramount importance of the human senses and emotion for consciousness to “happen”, there is a profound and probably irreconcilable disconnect between general AI, the machine, and consciousness, a human phenomenon.

wrote on last edited by [email protected]Another article written by a person who doesn't realize that human intelligence is 100% about predicting sequences of things (including words), and therefore has only the most nebulous idea of how to tell the difference between an LLM and a person.

The result is a lot of uninformed flailing and some pithy statements. You can predict how the article is going to go just from the headline because it's the same article you already read countless times.

So why is a real “thinking” AI likely impossible? Because it’s bodiless. It has no senses, no flesh, no nerves, no pain, no pleasure.

May as well have written "Durrrrrrrrrrrrrrr brghlgbhfblrghl." It didn't even occur to the author to ask, "what is thinking? what is reasoning?" The point was to write another junk article to get ad views. There is nothing of substance in it.

-

Citation Needed (by Molly White) also frequently bashes AI.

I like her stuff because, no matter how you feel about crypto, AI, or other big tech, you can never fault her reporting. She steers clear of any subjective accusations or prognostication.

It’s all “ABC person claimed XYZ thing on such and such date, and then 24 hours later submitted a report to the FTC claiming the exact opposite. They later bought $5 million worth of Trumpcoin, and two weeks later the FTC announced they were dropping the lawsuit.”

I'm subscribed to her Web3 is Going Great RSS. She coded the website in straight HTML, according to a podcast that I listen to. She's great.

I didn't know she had a podcast. I just added it to my backup playlist. If it's as good as I hope it is, it'll get moved to the primary playlist. Thanks!

-

We are constantly fed a version of AI that looks, sounds and acts suspiciously like us. It speaks in polished sentences, mimics emotions, expresses curiosity, claims to feel compassion, even dabbles in what it calls creativity.

But what we call AI today is nothing more than a statistical machine: a digital parrot regurgitating patterns mined from oceans of human data (the situation hasn’t changed much since it was discussed here five years ago). When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

This means AI has no understanding. No consciousness. No knowledge in any real, human sense. Just pure probability-driven, engineered brilliance — nothing more, and nothing less.

So why is a real “thinking” AI likely impossible? Because it’s bodiless. It has no senses, no flesh, no nerves, no pain, no pleasure. It doesn’t hunger, desire or fear. And because there is no cognition — not a shred — there’s a fundamental gap between the data it consumes (data born out of human feelings and experience) and what it can do with them.

Philosopher David Chalmers calls the mysterious mechanism underlying the relationship between our physical body and consciousness the “hard problem of consciousness”. Eminent scientists have recently hypothesised that consciousness actually emerges from the integration of internal, mental states with sensory representations (such as changes in heart rate, sweating and much more).

Given the paramount importance of the human senses and emotion for consciousness to “happen”, there is a profound and probably irreconcilable disconnect between general AI, the machine, and consciousness, a human phenomenon.

Amen! When I say the same things this author is saying I get, "It'S NoT StAtIsTiCs! LeArN aBoUt AI bEfOrE yOu CoMmEnT, dUmBaSs!"

-

I’ve taken to calling it Automated Inference

you know what. when you look at it this way, its much easier to get less pissed.

-

I think you're misunderstanding the point the author is making. He is arguing that even the current state is not intelligent, it is merely a fancy autocorrect, it doesn't know or understand anything about the prompts it receives. As the author stated, it can only guess at the next statistically most likely piece of information based on the data that has been fed into it. That's not intelligence.

wrote on last edited by [email protected]it doesn’t know or understand

But that's not what intelligence is, that's what consciousness is.

Intelligence is not understanding shit, it's the ability to for instance solve a problem, so a frigging calculator has a tiny degree of intelligence, but not enough for us to call it AI.

There is simply zero doubt an AI is intelligent, claiming otherwise just shows people don't know the difference between intelligence and consciousness.Passing an exam is a form of intelligence.

Can a good AI pass a basic exam?

YES.

Does passing an exam require consciousness?

NO.

Because an exam tests abilities of intelligence, not level of consciousness.it can only guess at the next statistically most likely piece of information based on the data that has been fed into it. That’s not intelligence.

Except we do the exact same thing! Based on prior experience (learning) we choose what we find to be the most likely answer. And that is indeed intelligence.

Current AI does not have the reasoning abilities we have yet, but they are not completely without it, and it's a subject that is currently worked on and improved. So current AI is actually a pretty high form of intelligence. And can sometimes out compete average humans in certain areas.

-

I think you're misunderstanding the point the author is making. He is arguing that even the current state is not intelligent, it is merely a fancy autocorrect, it doesn't know or understand anything about the prompts it receives. As the author stated, it can only guess at the next statistically most likely piece of information based on the data that has been fed into it. That's not intelligence.

Predicting sequences of things is foundational to intelligence. In fact, it is the whole point.

-

I've never been fooled by their claims of it being intelligent.

Its basically an overly complicated series of if/then statements that try to guess the next series of inputs.

wrote on last edited by [email protected]I love this resource, https://thebullshitmachines.com/ (i.e. see lesson 1)..

In a series of five- to ten-minute lessons, we will explain what these machines are, how they work, and how to thrive in a world where they are everywhere.

You will learn when these systems can save you a lot of time and effort. You will learn when they are likely to steer you wrong. And you will discover how to see through the hype to tell the difference.

..Also, Anthropic (ironically) has some nice paper(s) about the limits of "reasoning" in AI.

-

We are constantly fed a version of AI that looks, sounds and acts suspiciously like us. It speaks in polished sentences, mimics emotions, expresses curiosity, claims to feel compassion, even dabbles in what it calls creativity.

But what we call AI today is nothing more than a statistical machine: a digital parrot regurgitating patterns mined from oceans of human data (the situation hasn’t changed much since it was discussed here five years ago). When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

This means AI has no understanding. No consciousness. No knowledge in any real, human sense. Just pure probability-driven, engineered brilliance — nothing more, and nothing less.

So why is a real “thinking” AI likely impossible? Because it’s bodiless. It has no senses, no flesh, no nerves, no pain, no pleasure. It doesn’t hunger, desire or fear. And because there is no cognition — not a shred — there’s a fundamental gap between the data it consumes (data born out of human feelings and experience) and what it can do with them.

Philosopher David Chalmers calls the mysterious mechanism underlying the relationship between our physical body and consciousness the “hard problem of consciousness”. Eminent scientists have recently hypothesised that consciousness actually emerges from the integration of internal, mental states with sensory representations (such as changes in heart rate, sweating and much more).

Given the paramount importance of the human senses and emotion for consciousness to “happen”, there is a profound and probably irreconcilable disconnect between general AI, the machine, and consciousness, a human phenomenon.

So many confident takes on AI by people who've never opened a book on the nature of sentience, free will, intelligence, philosophy of mind, brain vs mind, etc.

There are hundreds of serious volumes on these, not to mention the plethora of casual pop science books with some of these basic thought experiments and hypotheses.

Seems like more and more incredibly shallow articles on AI are appearing every day, which is to be expected with the rapid decline of professional journalism.

It's a bit jarring and frankly offensive to be lectured 'at' by people who are obviously on the first step of their journey into this space.

-

Another article written by a person who doesn't realize that human intelligence is 100% about predicting sequences of things (including words), and therefore has only the most nebulous idea of how to tell the difference between an LLM and a person.

The result is a lot of uninformed flailing and some pithy statements. You can predict how the article is going to go just from the headline because it's the same article you already read countless times.

So why is a real “thinking” AI likely impossible? Because it’s bodiless. It has no senses, no flesh, no nerves, no pain, no pleasure.

May as well have written "Durrrrrrrrrrrrrrr brghlgbhfblrghl." It didn't even occur to the author to ask, "what is thinking? what is reasoning?" The point was to write another junk article to get ad views. There is nothing of substance in it.

wrote on last edited by [email protected]Wow. So when you typed that comment you were just predicting which words would be normal in this situation? Interesting delusion, but that's not how people think. We apply reasoning processes to the situation, formulate ideas about it, and then create a series of words that express our ideas. But our ideas exist on their own, even if we never end up putting them into words or actions. That's how organic intelligence differs from a Large Language Model.

-

How is outputting things based on things it has learned any different to what humans do?

Humans are not probabilistic, predictive chat models. If you think reasoning is taking a series of inputs, and then echoing the most common of those as output then you mustn't reason well or often.

If you were born during the first industrial revolution, then you'd think the mind was a complicated machine. People seem to always anthropomorphize inventions of the era.

When you typed this response, you were acting as a probabilistic, predictive chat model. You predicted the most likely effective sequence of words to convey ideas. You did this using very different circuitry, but the underlying strategy was the same.

-

Wow. So when you typed that comment you were just predicting which words would be normal in this situation? Interesting delusion, but that's not how people think. We apply reasoning processes to the situation, formulate ideas about it, and then create a series of words that express our ideas. But our ideas exist on their own, even if we never end up putting them into words or actions. That's how organic intelligence differs from a Large Language Model.

Yes, and that is precisely what you have done in your response.

You saw something you disagreed with, as did I. You felt an impulse to argue about it, as did I. You predicted the right series of words to convey the are argument, and then typed them, as did I.

There is no deep thought to what either of us has done here. We have in fact both performed as little rigorous thought as necessary, instead relying on experience from seeing other people do the same thing, because that is vastly more efficient than doing a full philosophical disassembly of every last thing we converse about.

That disassembly is expensive. Not only does it take time, but it puts us at risk of having to reevaluate notions that we're comfortable with, and would rather not revisit. I look at what you've written, and I see no sign of a mind that is in a state suitable for that. Your words are defensive ("delusion") rather than curious, so how can you have a discussion that is intellectual, rather than merely pretending to be?

-

No it’s really not at all the same. Humans don’t think according to the probabilities of what is the likely best next word.

How could you have a conversation about anything without the ability to predict the word most likely to be best?

-

Yes, and that is precisely what you have done in your response.

You saw something you disagreed with, as did I. You felt an impulse to argue about it, as did I. You predicted the right series of words to convey the are argument, and then typed them, as did I.

There is no deep thought to what either of us has done here. We have in fact both performed as little rigorous thought as necessary, instead relying on experience from seeing other people do the same thing, because that is vastly more efficient than doing a full philosophical disassembly of every last thing we converse about.

That disassembly is expensive. Not only does it take time, but it puts us at risk of having to reevaluate notions that we're comfortable with, and would rather not revisit. I look at what you've written, and I see no sign of a mind that is in a state suitable for that. Your words are defensive ("delusion") rather than curious, so how can you have a discussion that is intellectual, rather than merely pretending to be?

wrote on last edited by [email protected]No, I didn't start by predicting a series of words, I already had thoughts on the subject, which existed completely outside of this thread. By the way, I've been working on a scenario for my D&D campaign where there's an evil queen who rules a murky empire to the East. There's a race of uber-intelligent ogres her mages created, who then revolted. She managed to exile the ogres to a small valley once they reached a sort of power stalemate. She made a treaty with them whereby she leaves them alone and they stay in their little valley and don't oppose her, or aid anyone who opposes her. I figured somehow these ogres, who are generally known as "Bane Ogres" because of an offhand comment the queen once made about them being the bane of her existence - would convey information to the player characters about a key to her destruction, but because of their treaty they have to do it without actually doing it. Not sure how to work that yet. Anyway, the point of this is that the completely out-of-context information I just gave you is in no way related to what we were talking about and wasn't inspired by constructing a series of relevant words like you're proposing. I also enjoy designing and printing 3d objects and programming little circuit thingys called ESP32 to do home automation. I didn't get interested in that because of this thread, and I can't imagine how a LLM-like mental process would prompt me to tell you about it, or why I would think you would be interested in knowing anything about my hobbies. Anyway, nice talking to you. Cute theory you got there about brain function tho, I can tell you've know people inside out.

-

The thing is, ai is compression of intelligence but not intelligence itself. That's the part that confuses people.

Ai is the ability to put anything describable into a compressed zip.I think you meant compression. This is exactly how I prefer to describe it, except I also mention lossy compression for those that would understand what that means.

-

it doesn’t know or understand

But that's not what intelligence is, that's what consciousness is.

Intelligence is not understanding shit, it's the ability to for instance solve a problem, so a frigging calculator has a tiny degree of intelligence, but not enough for us to call it AI.

There is simply zero doubt an AI is intelligent, claiming otherwise just shows people don't know the difference between intelligence and consciousness.Passing an exam is a form of intelligence.

Can a good AI pass a basic exam?

YES.

Does passing an exam require consciousness?

NO.

Because an exam tests abilities of intelligence, not level of consciousness.it can only guess at the next statistically most likely piece of information based on the data that has been fed into it. That’s not intelligence.

Except we do the exact same thing! Based on prior experience (learning) we choose what we find to be the most likely answer. And that is indeed intelligence.

Current AI does not have the reasoning abilities we have yet, but they are not completely without it, and it's a subject that is currently worked on and improved. So current AI is actually a pretty high form of intelligence. And can sometimes out compete average humans in certain areas.

Intelligence is not understanding shit, it's the ability to for instance solve a problem, so a frigging calculator has a tiny degree of intelligence, but not enough for us to call it AI.

I have to disagree that a calculator has intelligence. The calculator has the mathematical functions programmed into it, but it couldn't use those on its own. The intelligence in your example is that of the operator of the calculator and the programmer who designed the calculator's software.

Can a good AI pass a basic exam?

YESI agree with you that the ability to pass an exam isn't a great test for this situation. In my opinion, the major factor that would point to current state AI not being intelligent is that it doesn't know why a given answer is correct, beyond that it is statistically likely to be correct.

Except we do the exact same thing! Based on prior experience (learning) we choose what we find to be the most likely answer.

Again, I think this points to the idea that knowing why an answer is correct is important. A person can know something by rote, which is what current AI does, but that doesn't mean that person knows why that is the correct answer. The ability to extrapolate from existing knowledge and apply that to other situations that may not seem directly applicable is an important aspect of intelligence.

As an example, image generation AI knows that a lot of the artwork that it has been fed contains watermarks or artist signatures, so it would often include things that look like those in the generated piece. It knew that it was statistically likely for that object to be there in a piece of art, but not why it was there, so it could not make a decision not to include them. Maybe that issue has been removed from the code of image generation AI by now, it has been a long time since I've messed around with that kind of tool, but even if it has been fixed, it is not because the AI knew it was wrong and self-corrected, it is because a programmer had to fix a bug in the code that the AI model had no awareness of.