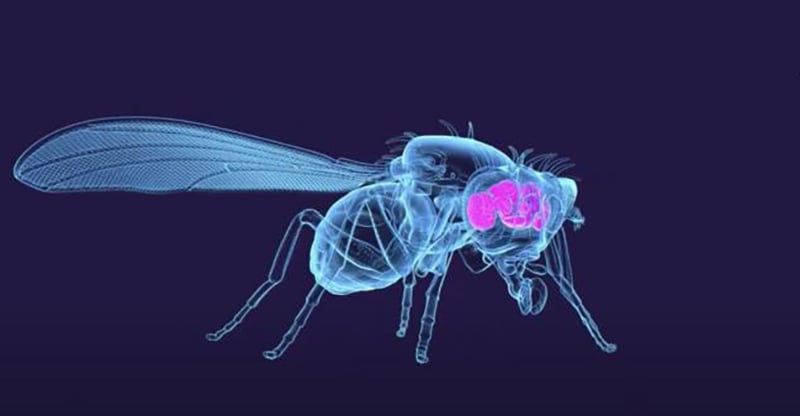

Complete wiring map of an adult fruit fly brain

-

[email protected]replied to [email protected] last edited by

The story there is fairly simple. Basically:

- Ubuntu - got an install disk on campus or from a friend, don't recall; my sound and wifi broke when upgrading major releases, so:

- Fedora - it's what my university used in the CS labs, so I figured I'd try it out; release upgrades took forever (>1 hr), so:

- Arch - coworker at my internship recommended I try it out, so I gave it a shot and loved it; stayed there for about 5 years

- openSUSE Leap - FreeBSD didn't support Docker properly and I didn't trust Arch on a server, so I went looking; holy grail was something stable for servers and rolling for desktop, and Debian Testing (we used Debian stable at work) wasn't quite new enough and Sid scared me, so I tried out Leap on a VPS; Leap worked out well and I actually liked Yast, so I figured I'd try out Leap on my laptop; I liked it, but decided I wanted fresher packages, so:

- Tumbleweed - I upgraded to Tumbleweed and didn't have issues for over a year (broke less than Arch), so I converted my desktop Arch install to Tumbleweed, and I've been happy for >5 years now (longest I've been on any distro, I think)

I wanted the same system on my desktop and server, and I really like rolling releases on my desktop. openSUSE was pretty much the only one that actually offered both. They were ballsy enough to officially support

btrfsin production, so I figured switching my NAS over to it wouldn't be a terrible idea, especially since I only needed RAID mirror so the write hole on raid 5/6 wouldn't be an issue. The first time an update went south on my desktop (Nvidia, go figure),snapper rollbacksaved me a bunch of time, and that's what sold me on it. I since replaced my GPU w/ AMD and I haven't had a single issue w/ updates since, whereas on Arch I'd have 3-4 manual interventions/year unrelated to Nvidia.And yeah, my kids haven't used my computers for anything other than Steam, YouTube, and some random web games. But they're technically on Linux and have successfully navigated both GNOME (used for a bit before KDE had proper Wayland support) and KDE, so they're more seasoned than some new Linux users.

-

[email protected]replied to [email protected] last edited by

I’m actually stunned that a cell-level map, the connectome, is even possible. Are we saying that every fruit fly has all these individual brain cells in this very particular configuration? I always assumed that major brain organelles might be the same from individual to individual but not down to the level of individual neurons. Am I reading something wrong or are individuals really similar as this?

-

[email protected]replied to [email protected] last edited by

Welcome to Microsoft 476! With new features such as fly! That's right! A real simulated fly will help you. Introducing, "the wall" it's where the fly lives! It will stay out of your way unless you call it. Setup your own buzzing noises! It will remind you in perfect stereo fly sounds about your incoming meeting!

-

[email protected]replied to [email protected] last edited by

I think they already did that with a nematode.

-

[email protected]replied to [email protected] last edited by

So to answer what I assume is going to be the most common question here, this is a circuit diagram, but every neuron is like its own little programmable integrated circuit with a small amount of internal memory and those aren't mapped here. So this is an excellent model to explore how neurons connect to each other and get insight into cognition and the function of the brain but it is far from something that can simply be simulated on a computer.

-

[email protected]replied to [email protected] last edited by

Awesome answer. Thank you for taking the time. I've enjoyed getting to know this part of your story.

-

[email protected]replied to [email protected] last edited by

"That I don't we can map yet"

Seems like your brain failed to map writing correctly.

-

[email protected]replied to [email protected] last edited by

Yeah, any time! It sounds like we had a relatively similar entry into *nix. Have a fantastic day.

-

[email protected]replied to [email protected] last edited by

Provisio, I have not read up on this particular experience.

Fruit flies, as used in labs are not like their wild cousins. They have been bred to be exceptionally consistent, since this makes X-Y experiments easier. If you take genetically identical eggs, and raise them in effectively identical conditions, you get almost the same wiring.

There will still be areas of variability, but a lot will be conserved. This is likely an "average" wiring. Once you have even an approximate baseline, you can vary things and see how the wiring adapted.

-

[email protected]replied to [email protected] last edited by

openworm did not map all the neurons yet. But soon

-

[email protected]replied to [email protected] last edited by

I said "inspired by" and not "exact digital replicas".

In classical MLP networks a neuron is modeled as an activation function depending on its inputs. Connections between those are "learned", basically weights which determine the influence of one neuron's output on the next neuron's input. This is indeed Inspired by biological neural networks.

Interestingly, in some computer vision deep learning architectures, we have found structures after the training procedure which are even similar to how human vision works.

There are a bunch of different artificial neural network types, most – if not all – inspired by biology. I wouldn't be so bold to reduce them in that absurd manner you did.

-

[email protected]replied to [email protected] last edited by

I said “inspired by” and not “exact digital replicas”

its not, though. Its best described as inspired by a big pachinko machine, with weighted pegs.

It is almost in no way inspired by. Thats just propaganda being put out to make AI more palatable, and personable.

There are a bunch of different artificial neural network types, most – if not all – inspired by biology. I wouldn’t be so bold to reduce them in that absurd manner you did.

I would be, because it's factual.

If it was "inspired by" it would be able to tell the difference between running over a person, and avoiding a car, by example. It wouldn't start hallucinating when asked simple questions, because a biological brain acts in congruence with it's inputs.

Which happens because of a web of interconnections and spanning of multiple sphere's, with two major ones acting as checks on the other. Which is nothing like any current AI model.

In current models, each token has a limited number of interconnects, and always to a neighbor node. That is nothing like a biological neuronal network.

-

[email protected]replied to [email protected] last edited by

its not, though. Its best described as inspired by a big pachinko machine, with weighted pegs.It is almost in no way inspired by. Thats just propaganda being put out to make AI more palatable, and personable.

Get your facts straight.

The multi layer perceptron was first proposed in 1943 and was indeed inspired by biological networks: https://doi.org/10.1007/BF02478259You can be sure this wasn't to make it "more palatable", wtf.

Regarding the rest of your reply:

You seem to be expecting a fully functioning digital brain as replica of the human brain. That's not what current ANNs in modern AI methods do.

Although they are in their core inspired by nature (which is why I originally said that advancements in brain research can aid the development of more advanced AI models), they work structurally different. And ANNs for example are just simplified mathematical models of biological neural nets. I've described basic properties before. Further characteristics, like neurogenesis, transmission speeds influenced by myelinated or unmyelinated axons, different types and subnets of neurons, like inhibitors, etc., are not included.

There is quite a large difference between simplfied models which are "inspired by" nature and exact digital replicas. It seems you are not accepting this.