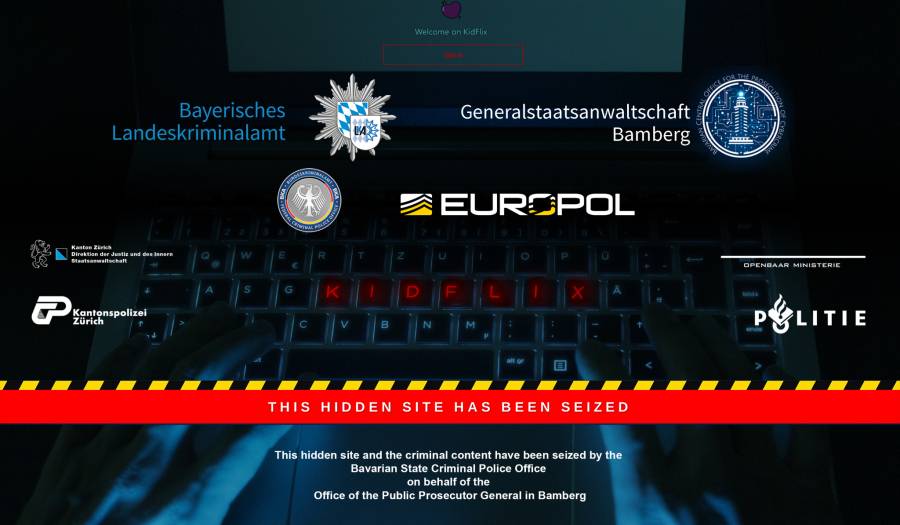

European police say KidFlix, "one of the largest pedophile platforms in the world," busted in joint operation.

-

Every now and again I am reminded of my sentiment that the introduction of "media" onto the Internet is a net harm. Maybe 256 dithered color photos like you'd see in Encarta 95 and that's the maximum extent of what should be allowed. There's just so much abuse from this kind of shit... despicable.

I think it just shows all the hideousness of humanity and all it's glory in a way that we have never confronted before. It's shatters the illusion the humanity has grown from its barbaric ways.

-

Every now and again I am reminded of my sentiment that the introduction of "media" onto the Internet is a net harm. Maybe 256 dithered color photos like you'd see in Encarta 95 and that's the maximum extent of what should be allowed. There's just so much abuse from this kind of shit... despicable.

Let’s get rid of the printing press because it can be used for smut. /s

-

Every now and again I am reminded of my sentiment that the introduction of "media" onto the Internet is a net harm. Maybe 256 dithered color photos like you'd see in Encarta 95 and that's the maximum extent of what should be allowed. There's just so much abuse from this kind of shit... despicable.

Raping kids has unfortunately been a thing since long before the internet. You could legally bang a 13 year old right up to the 1800s and in some places you still can.

As recently as the 1980s people would openly advocate for it to be legal, and remove the age of consent altogether. They'd get it in magazines from countries where it was still legal.

I suspect it's far less prevalent now than it's ever been. It's now pretty much universally seen as unacceptable, which is a good start.

-

Every now and again I am reminded of my sentiment that the introduction of "media" onto the Internet is a net harm. Maybe 256 dithered color photos like you'd see in Encarta 95 and that's the maximum extent of what should be allowed. There's just so much abuse from this kind of shit... despicable.

It is easy to very feel disillusioned with the world, but it is important to remember that there are still good people all around willing to fight the good fight. And it is also important to remember that technology is not inherently bad, it is a neutral object, but people could use it for either good or bad purposes.

-

Let’s get rid of the printing press because it can be used for smut. /s

great pointless strawman. nice contribution.

-

great pointless strawman. nice contribution.

It’s satire of your suggestion that we hold back progress but I guess it went over your head.

-

Raping kids has unfortunately been a thing since long before the internet. You could legally bang a 13 year old right up to the 1800s and in some places you still can.

As recently as the 1980s people would openly advocate for it to be legal, and remove the age of consent altogether. They'd get it in magazines from countries where it was still legal.

I suspect it's far less prevalent now than it's ever been. It's now pretty much universally seen as unacceptable, which is a good start.

The youngest Playboy model, Eva Ionesco, was only 12 years old at the time of the photo shoot, and that was back in the late 1970’s... It ended up being used as evidence against the Eva’s mother (who was also the photographer), and she ended up losing custody of Eva as a result. The mother had started taking erotic photos (ugh) of Eva when she was only like 5 or 6 years old, under the guise of “art”. It wasn’t until the Playboy shoot that authorities started digging into the mother’s portfolio.

But also worth noting that the mother still holds copyright over the photos, and has refused to remove/redact/recall photos at Eva’s request. The police have confiscated hundreds of photos for being blatant CSAM, but the mother has been uncooperative in a full recall. Eva has sued the mother numerous times to try and get the copyright turned over, which would allow her to initiate the recall instead.

-

This post did not contain any content.

Here’s a reminder that you can submit photos of your hotel room to law enforcement, to assist in tracking down CSAM producers. The vast majority of sex trafficking media is produced in hotels. So being able to match furniture, bedspreads, carpet patterns, wallpaper, curtains, etc in the background to a specific hotel helps investigators narrow down when and where it was produced.

-

Honestly, if the existing victims have to deal with a few more people masturbating to the existing video material and in exchange it leads to fewer future victims it might be worth the trade-off but it is certainly not an easy choice to make.

It doesn't though.

The most effective way to shut these forums down is to register bot accounts scraping links to the clearnet direct-download sites hosting the material and then reporting every single one.

If everything posted to these forums is deleted within a couple of days, their popularity would falter. And victims much prefer having their footage deleted than letting it stay up for years to catch a handful of site admins.

Frankly, I couldn't care less about punishing the people hosting these sites. It's an endless game of cat and mouse and will never be fast enough to meaningfully slow down the spread of CSAM.

Also, these sites don't produce CSAM themselves. They just spread it - most of the CSAM exists already and isn't made specifically for distribution.

-

Here’s a reminder that you can submit photos of your hotel room to law enforcement, to assist in tracking down CSAM producers. The vast majority of sex trafficking media is produced in hotels. So being able to match furniture, bedspreads, carpet patterns, wallpaper, curtains, etc in the background to a specific hotel helps investigators narrow down when and where it was produced.

Thank you for posting this.

-

1.8 million users and they only caught 1000?

I imagine it's easier to catch uploaders than viewers.

It's also probably more impactful to go for the big "power producers" simultaneously and quickly before word gets out and people start locking things down.

-

Does it feel odd to anyone else that a platform for something this universally condemned in any jurisdiction can operate for 4 years, with a catchy name clearly thought up by a marketing person, its own payment system and nearly six figure number of videos? I mean even if we assume that some of those 4 years were intentional to allow law enforcement to catch as many perpetrators as possible this feels too similar to fully legal operations in scope.

It definitely seems weird how easy it is to stumble upon CP online, and how open people are about sharing it, with no effort made, in many instances, to hide what they're doing. I've often wondered how much of the stuff is spread by pedo rings and how much is shared by cops trying to see how many people they can catch with it.

-

Wow, with such a daring name as well. Fucking disgusting.

I once saw a list of defederated lemmy instances. In most cases, and I mean like 95% of them, the reason of thedefederation was pretty much in the instance name. CP everywhere. Humanity is a freaking mistake.

-

Here’s a reminder that you can submit photos of your hotel room to law enforcement, to assist in tracking down CSAM producers. The vast majority of sex trafficking media is produced in hotels. So being able to match furniture, bedspreads, carpet patterns, wallpaper, curtains, etc in the background to a specific hotel helps investigators narrow down when and where it was produced.

Wouldnt this be so much better if we got hoteliers on board instead of individuals

-

I once saw a list of defederated lemmy instances. In most cases, and I mean like 95% of them, the reason of thedefederation was pretty much in the instance name. CP everywhere. Humanity is a freaking mistake.

Isnit not encouraging that it is ostracised and removed from normal people. There are horrible parts of everything in nature, life is good despite those people and because of the rest combatting their shittiness

-

It doesn't though.

The most effective way to shut these forums down is to register bot accounts scraping links to the clearnet direct-download sites hosting the material and then reporting every single one.

If everything posted to these forums is deleted within a couple of days, their popularity would falter. And victims much prefer having their footage deleted than letting it stay up for years to catch a handful of site admins.

Frankly, I couldn't care less about punishing the people hosting these sites. It's an endless game of cat and mouse and will never be fast enough to meaningfully slow down the spread of CSAM.

Also, these sites don't produce CSAM themselves. They just spread it - most of the CSAM exists already and isn't made specifically for distribution.

Who said anything about punishing the people hosting the sites. I was talking about punishing the people uploading and producing the content. The ones doing the part that is orders of magnitude worse than anything else about this.

-

It definitely seems weird how easy it is to stumble upon CP online, and how open people are about sharing it, with no effort made, in many instances, to hide what they're doing. I've often wondered how much of the stuff is spread by pedo rings and how much is shared by cops trying to see how many people they can catch with it.

If you have stumbled on CP online in the last 10 years, you're either really unlucky or trawling some dark waters. This ain't 2006. The internet has largely been cleaned up.

-

Every now and again I am reminded of my sentiment that the introduction of "media" onto the Internet is a net harm. Maybe 256 dithered color photos like you'd see in Encarta 95 and that's the maximum extent of what should be allowed. There's just so much abuse from this kind of shit... despicable.

With that logic, I might as well throw away my computer and phone and go full Uncle Ted.

-

This post did not contain any content.

If that's the actual splash screen that pops up when you try to access it (no, I'm not going to go to it and check, I don't want to be on a new and exciting list) then kudos to the person who put that together. Shit goes hard. So do all the agency logos.

-

This post did not contain any content.

Massive congratulations to Europol and its partners in taking this shit down and putting these perverts away. However, they shouldn't rest on their laurels. The objective now is to ensure that the distribution of this disgusting material is stopped outright and that no further children are harmed.