The vibecoders are becoming sentient

-

Why not both

?

?With a pinch of PFAS for good measure?

-

Nah, it's the microplastics.

Microplastics are stored in the balls.

-

No idea, but I am not sure your family member is qualified. I would estimate that a coding LLM can code as well as a fresh CS grad. The big advantage that fresh grads have is that after you give them a piece of advice once or twice, they stop making that same mistake.

What's this based on? Have you met a fresh CS graduate and compared them to an LLM? Does it not vary person to person? Or fuck it, LLM to LLM? Calling them not qualified seems harsh when it's based on sod all.

-

Interesting. Curious for a point of comparison how The Onion reads to you.

(Only a mediocre point of comparison I fear, but)

wrote last edited by [email protected]That's a bit difficult because I already go into anything from The Onion knowing it's intended to be humorous/satirical.

What I lack in ability to recognize satire or outright deception from posts written online, I make up for by reading comment threads: seeing people accuse things of being fake, seeing people defend it as true, seeing people point out the entire intention of a website is satire, seeing people who had a joke go over their heads get it explained... relying on the collective hivemind to help me out where I am deficient. It's not a perfect solution at all, especially since people can judge wrong—I bet some "omg so fake" threads were actually real, and some astroturf-type things written to influence others without real experience behind it got through as real.

-

I think the key to good LLM usage is a light touch. Let the LLM know what you want, maybe refine it if you see where the result went wrong. But if you find yourself deep in conversation trying to explain to the LLM why it's not getting your idea, you're going to wind up with a bad product. Just abandon it and try to do the thing yourself or get someone who knows what you want.

They get confused easily, and despite what is being pitched, they don't really learn very well. So if they get something wrong the first time they aren't going to figure it out after another hour or two.

In my experience, they're better at poking holes in code than writing it, whether that's green or brownfield.

I've tried to get it to make sections of changes for me, and it feels very productive, but when I time myself I find I spend probably more time correcting the LLM's work than if I'd just written it myself.

But if you ask it to judge a refactor, then you might actually get one or two good points. You just have to really be careful to double check its assertions if you're unfamiliar with anything, because it will lead you to some real boners if you just follow it blindly.

-

Not me, I'd rather work on a clean code base without any slop, even if it pays a little less. QoL > TC

I'm not above slinging a little spaghetti if it pays the bills.

-

No idea, but I am not sure your family member is qualified. I would estimate that a coding LLM can code as well as a fresh CS grad. The big advantage that fresh grads have is that after you give them a piece of advice once or twice, they stop making that same mistake.

Where is this coming from? I don't think an LLM can code at the level of a recent cs grad unless it's piloted by a cs grad.

Maybe you've had much better luck than me, but coding LLMs seem largely useless without prior coding knowledge.

-

I think the key to good LLM usage is a light touch. Let the LLM know what you want, maybe refine it if you see where the result went wrong. But if you find yourself deep in conversation trying to explain to the LLM why it's not getting your idea, you're going to wind up with a bad product. Just abandon it and try to do the thing yourself or get someone who knows what you want.

They get confused easily, and despite what is being pitched, they don't really learn very well. So if they get something wrong the first time they aren't going to figure it out after another hour or two.

But if you find yourself deep in conversation trying to explain to the LLM why it’s not getting your idea, you’re going to wind up with a bad product.

Yes. Kind of. It takes ( a couple of days) experience with LLMs to know that failing to understand your corrections means immediate delete and try another LLM. The only OpenAI llm I tried was their 120g open source release. It insisted that it was correct in its stupidity. That's worse than LLMs that forget the corrections from 3 prompts ago, though I also learned that is also grounds for delete over any hope for their usefulness.

-

He needs at least a decade of industry experience. That helps me find jobs.

It would be nice if software development were a real profession and people could get that experience properly.

-

In my experience, they're better at poking holes in code than writing it, whether that's green or brownfield.

I've tried to get it to make sections of changes for me, and it feels very productive, but when I time myself I find I spend probably more time correcting the LLM's work than if I'd just written it myself.

But if you ask it to judge a refactor, then you might actually get one or two good points. You just have to really be careful to double check its assertions if you're unfamiliar with anything, because it will lead you to some real boners if you just follow it blindly.

At work we've got coderabbit set up on our github and it has found bugs that I wrote. Sometimes the thing drives me insane with pointless comments, but just today found a spot that would have been a big bug in prod in like 3 months.

-

This post did not contain any content.

-

True that. But I often find that the search engine is not very good at giving me a solution if I don't know the name of a problem and only have my spaghetti thoughts on what the thing is supposed to do, and translating spaghetti thoughts into something a search engine can find is where the chatbot excels.

Part of your trouble there is that Google is absolute dog shit these days. It used to be like magic; simple search terms would find you exactly what you were looking for in the first handful of results. Now you're lucky to find it on the second page.

-

You read "new projects" in, actually. And the whole unit test thing was just an example demonstrating how AI use has to be tightly bounded to be arguably useful.

-

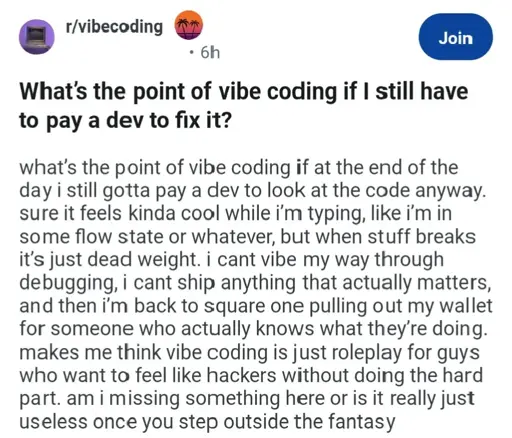

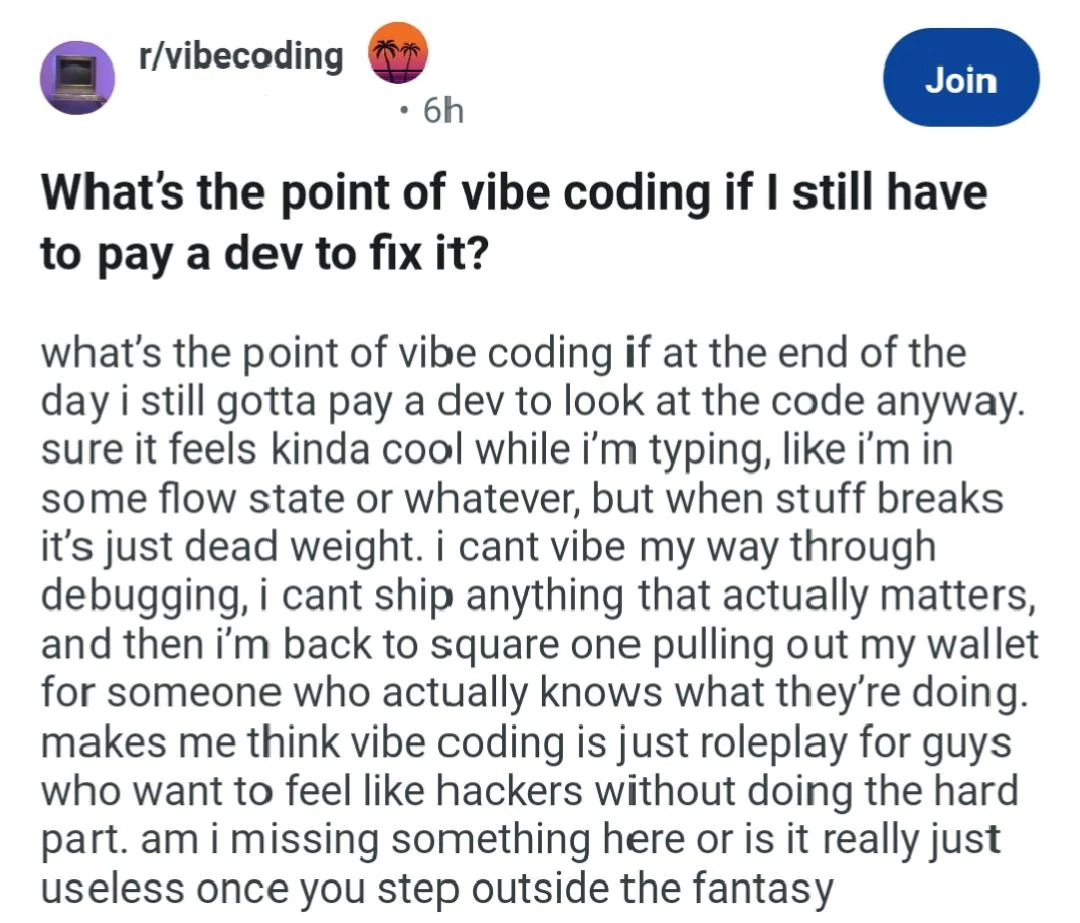

It is not useless. You should absolutely continue to vibes code. Don't let a professional get involved at the ground floor. Don't inhouse a professional staff.

Please continue paying me $200/hr for months on end debugging your Baby's First Web App tier coding project long after anyone else can salvage it.

And don't forget to tell your investors how smart you are by Vibes Coding! That's the most important part. Secure! That! Series! B! Go public! Get yourself a billion dollar valuation on these projects!

Keep me in the good wine and the nice car! I love vibes coding.

Also, don’t waste money on doctor visits. Let Bing diagnose your problems for pennies on the dollar. Be smart! Don’t let some doctor tell you what to do.

IANAL so: /s

-

It would be nice if software development were a real profession and people could get that experience properly.

It was. Wall St is destroying it, along with everything else in its insatiable drive for more profit. Everything must be sacrificed to the golden idol.

-

I'm sure it's fun to see a series of text prompts turn into an app, but if you don't understand the code and can't fix it when it doesn't work without starting over, you're going to have a bad time. Sure, it takes time and effort to learn to program, but it pays off in the end.

wrote last edited by [email protected]Yeah, mostly agreed. In my experience so far, an experienced dev that's really putting time into their setup can greatly accelerate their output with these tools, while an inexperienced dev will end up taking way longer (and they'll understand less) than it would have if they worked normally

-

This is in no way new. 20 years ago I used to refer to some job postings as H1Bait because they'd have requirements that were physically impossible (like having 5 years experience with a piece of software <2 years old) specifically so they could claim they couldn't find anyone qualified (because anyone claiming to be qualified was definitely lying) to justify an H1B for which they would be suddenly way less thorough about checking qualifications.

It's so much worse than it was. AIs have absolutely murdered entry-level positions

-

This post did not contain any content.

I'm not a programmer by any stretch but what LLM's have been great for is getting my homelab set up. I've even done some custom UI stuff for work that talks to open source backend things we run. I think I've actually learned a fair bit from the experience and if I had to start over I'd be able to do way way more on my own than I was able to when I first started. It's not perfect and as others have mentioned I have broken things and had to start projects completely from scratch but the second time through I knew where pitfalls were and I'm getting better at knowing what to ask for and telling it what to avoid.

I'm not a programmer but I'm not trying to ship anything either. In general I'm a pretty anti-AI guy but for the non-initiated that want to get started with a homelab I'd say its damn near instrumental in a quick turnaround and a fairly decent educational tool.

-

I think storyboards is a great example of how it could be used properly.

Storyboards are a great way for someone to communicate "this is how I want it to look" in a rough way. But, a storyboard will never show up in the final movie (except maybe fun clips during the credits or something). It's something that helps you on your way, but along the way 100% of it is replaced.

Similarly, the way I think of generative AI is that it's basically a really good props department.

In the past, if a props / graphics / FX department had to generate some text on a computer screen that looked like someone was Hacking the Planet they'd need to come up with something that looked completely realistic. But, it would either be something hand-crafted, or they'd just go grab some open-source file and spew it out on the screen. What generative AI does is that it digests vast amounts of data to be able to come up with something that looks realistic for the prompt it was given. For something like a hacking scene, an LLM can probably generate something that's actually much better than what the humans would make given the time and effort required. A hacking scene that a computer security professional would think is realistic is normally way beyond the required scope. But, an LLM can probably do one that is actually plausible for a computer security professional because of what that LLM has been trained on. But, it's still a prop. If there are any IP addresses or email addresses in the LLM-generated output they may or may not work. And, for a movie prop, it might actually be worse if they do work.

When you're asking an AI something like "What does a selection sort algorithm look like in Rust?", what you're really doing is asking "What does a realistic answer to that question look like?" You're basically asking for a prop.

Now, some props can be extremely realistic looking. Think of the cockpit of an airplane in a serious aviation drama. The props people will probably either build a very realistic cockpit, or maybe even buy one from a junkyard and fix it up. The prop will be realistic enough that even a pilot will look at it and say that it's correctly laid out and accurate. Similarly, if you ask an LLM to produce code for you, sometimes it will give you something that is realistic enough that it actually works.

Having said that, fundamentally, there's a difference between "What is the answer to this question?" and "What would a realistic answer to this question look like?" And that's the fundamental flaw of LLMs. Answering a question requires understanding the question. Simulating an answer just requires pattern matching.

See, I agree with everything up to the end. There you are getting into the philosophy of cognition. How do humans answer a question? I would argue, for many, the answer for most topics would be "I am repeating what I was taught/learned/read. An argument could be made that your description of responding with "What would a realistic answer to this question look like?" is fundamentally symmetric with "This is what I was taught." Both are regurgitating information fed to them by someone who presumably (hopefully) actually had a firm understanding of the material themselves. As an example: we are all taught that 2+2=4, but most people are not taught WHY 2+2=4. Even fewer are taught that 2+2=11 in base 3 or how to convert bases at all. So do people "know" that 2+2=4 or are they just repeating the answer that they were told was correct?

I am not saying that LLMs understand or know anything, I am saying that most humans don't either for most topics.

-

I'm not a programmer by any stretch but what LLM's have been great for is getting my homelab set up. I've even done some custom UI stuff for work that talks to open source backend things we run. I think I've actually learned a fair bit from the experience and if I had to start over I'd be able to do way way more on my own than I was able to when I first started. It's not perfect and as others have mentioned I have broken things and had to start projects completely from scratch but the second time through I knew where pitfalls were and I'm getting better at knowing what to ask for and telling it what to avoid.

I'm not a programmer but I'm not trying to ship anything either. In general I'm a pretty anti-AI guy but for the non-initiated that want to get started with a homelab I'd say its damn near instrumental in a quick turnaround and a fairly decent educational tool.

This is the correct way to do it, use it, see if it works for you and try to understand what happened.

It's not that different from using examples or stack overflow. With time you get better, but you need to have that last critical thinking step. Otherwise you will never learn and will just copy paste hoping it works