Is It Just Me?

-

And Google would never lie about how much energy a prompt costs, right?

Especially not since they have an invested interest in having people use their AI products, right?

That’s not really Google’s style when it comes to data center whitepapers. They did, however, omit all information about training energy use.

-

You may not like this, but I still don't care.

Mm, I see, you're one of those people...

-

For instance, there are soft science, social interaction areas where AI is doing wonders.

Specifically, in the field of law, now that lawyers have learned not to rely on AI for citations, they are instead offloading hundreds of thousands or millions of pages of documents that they were never actually going to read, and getting salient results from allowing an AI to scan through them to pull out interesting talking points.

Pulling out these interesting talking points and fact checking them and, you know, A/B testing the ways to interact and bring them in front of the jury with an AI has made it so that many law firms are getting thousands or millions of dollars more on a lawsuit than they anticipated.

And you may be against American law for all of its frivolous plaintiffs' lawsuits or something, but each of these outcomes are decided by human beings, and there are real damages that are lifelong that are being addressed by these lawsuits, or at least in some way compensated.

The more money these plaintiffs get for the injuries that they have to live with for the rest of their lives, the better for them, and AI made the difference.

Not that lawyers are fundamentally incapable or uncaring, but for every one, I don't know who the fuck is a super lawyer nowadays, but you know, for every, you know, madman lawyer on the planet, there's 999 that are working hard and just do not have the raw plot armor Deus Ex Machina dropping everything directly into their lap to solve all of their problems that they would need to operate at that level.

And yes, if you want to be particular, a human being should have done the work. A human being can do the work. A human being is actually being paid to do the work. But when you can offload grunt work to a computer and get usable results from it that improves a human's life, that's the whole fucking reason why we invented computers in the first place.

I'd like to hear more about this because I'm fairly tech savvy and interested in legal nonsense (not American) and haven't heard of it. Obviously, I'll look it up but if you have a particularly good source I'd be grateful.

I have lawyer friends. I've seen snippets of their work lives. It continues to baffle me how much relies on people who don't have the waking hours or physical capabilities to consume and collate that much information somehow understanding it well enough to present a true, comprehensive argument on a deadline.

-

This post did not contain any content.

It's important to remember that there's a lot of money being put into A.I. and therefore a lot of propaganda about it.

This happened with a lot of shitty new tech, and A.I. is one of the biggest examples of this I've known about.

All I can write is that, if you know what kind of tech you want and it's satisfactory, just stick to that. That's what I do.

Don't let ads get to you.First post on a lemmy server, by the way. Hello!

-

It's our own version of The Matrix

When you order your Matrix from Wish...

-

That’s not really Google’s style when it comes to data center whitepapers. They did, however, omit all information about training energy use.

Ahahah. Not their style to lie and betray people for profit? Get out!

-

Maybe not an individual prompt, but with how many prompts are made for stupid stuff every day, it will stack up to quite a lot of CO2 in the long run.

Not denying the training of AI is demanding way more energy, but that doesn't really matter as both the action of manufacturing, training and millions of people using AI amounts to the same bleak picture long term.

Considering how the discussion about environmental protection has only just started to be taken seriously and here they come and dump this newest bomb on humanity, it is absolutely devastating that AI has been allowed to run rampant everywhere.

According to this article, 500.000 AI prompts amounts to the same CO2 outlet as a

round-trip flight from London to New York.

I don't know how many times a day 500.000 AI prompts are reached, but I'm sure it is more than twice or even thrice. As time moves on it will be much more than that. It will probably outdo the number of actual flights between London and New York in a day. Every day. It will probably also catch up to whatever energy cost it took to train the AI in the first place and surpass it.

Because you know. People need their memes and fake movies and AI therapist chats and meal suggestions and history lessons and a couple of iterations on that book report they can't be fucked to write. One person can easily end up prompting hundreds of times in a day without even thinking about it. And if everybody starts using AI to think for them at work and at home, it'll end up being many, many, many flights back and forth between London and New York every day.

wrote on last edited by [email protected]I had the discussion regarding generated CO2 a while ago here, and with the numbers my discussion partner gave me, the calculation said that the yearly usage of ChatGPT is appr. 0.0017% of our CO2 reduction during the covid lockdowns - chatbots are not what is kiling the climate. What IS killing the climate has not changed since the green movement started: cars, planes, construction (mainly concrete production) and meat.

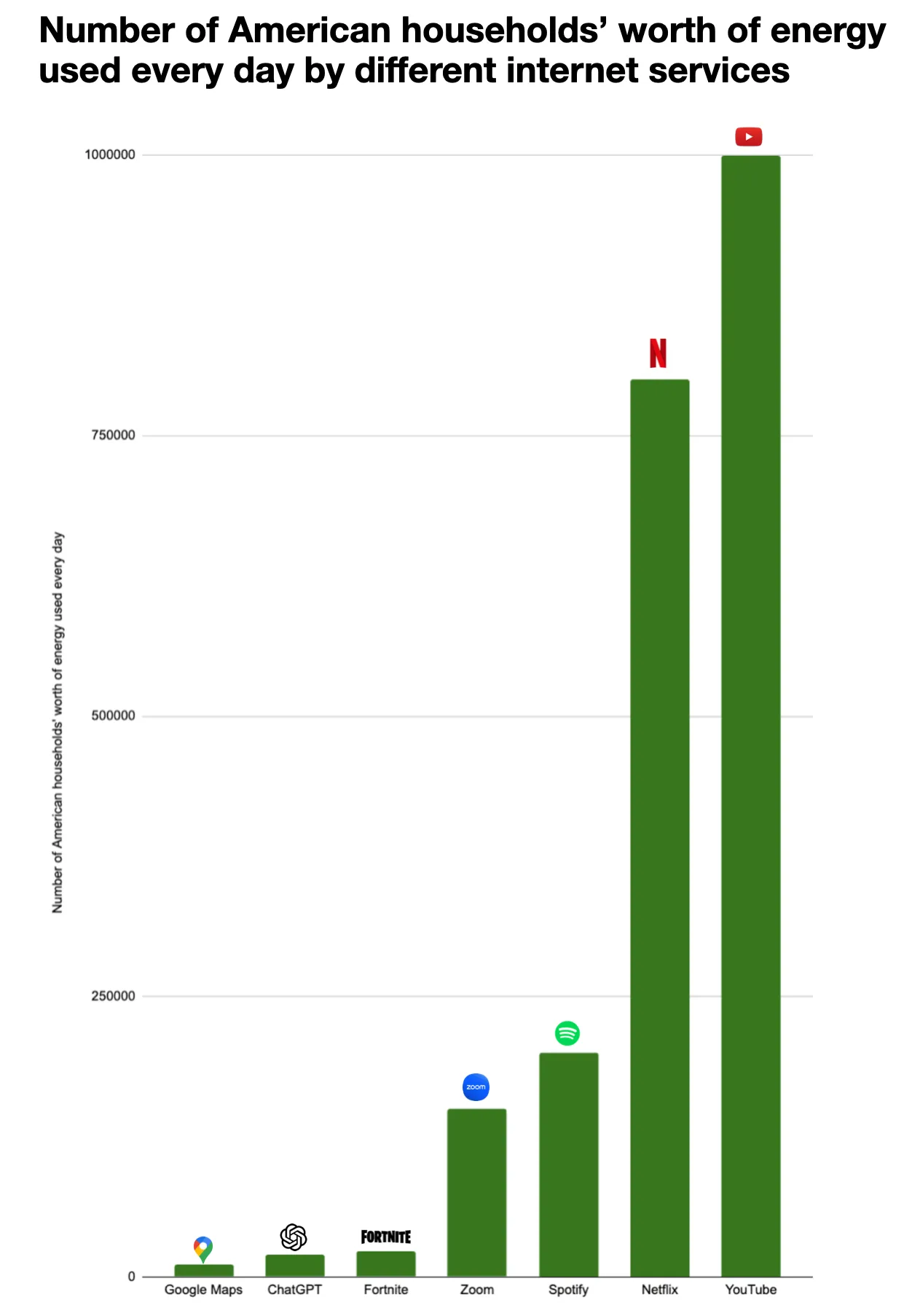

The exact energy costs are not published, but 3Wh / request for ChatGPT-4 is the upper limit from what we know (and thats in line with the appr. power consumption on my graphics card when running an LLM). Since Google uses it for every search, they will probably have optimized for their use case, and some sources cite 0.3Wh/request for chatbots - it depends on what model you use. The training is a one-time cost, and for ChatGPT-4 it raises the maximum cost/request to 4Wh. That's nothing. The combined worldwide energy usage of ChatGPT is equivalent to about 20k American households. This is for one of the most downloaded apps on iPhone and Android - setting this in comparison with the massive usage makes clear that saving here is not effective for anyone interested in reducing climate impact, or you have to start scolding everyone who runs their microwave 10 seconds too long.

Even compared to other online activities that use data centers ChatGPT's power usage is small change. If you use ChatGPT instead of watching Netflix you actually safe energy!

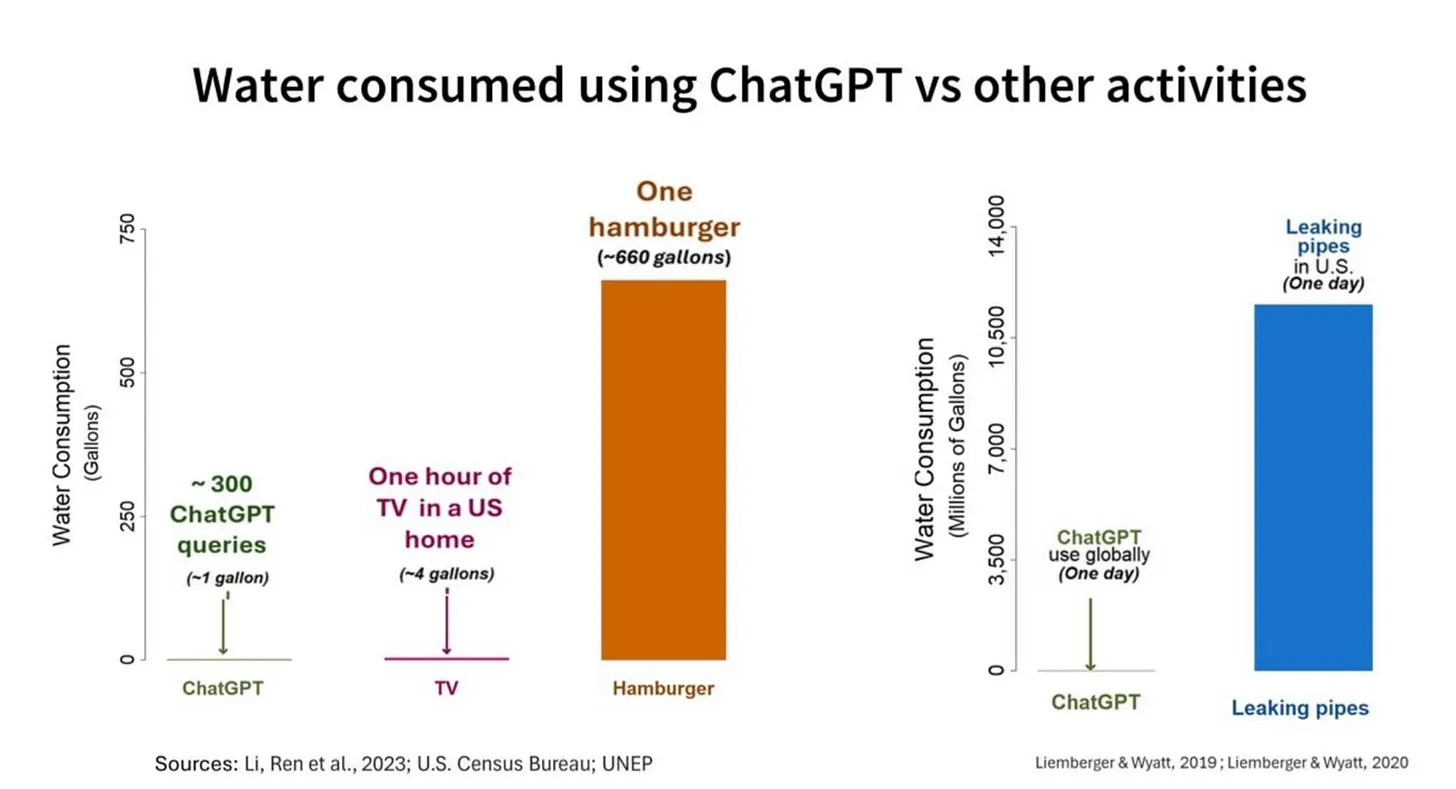

Water is about the same, although the positioning of data centers in the US sucks. The used water doesn't disappear tho - it's mostly returned to the rivers or is evaporated. The water usage in the US is 58,000,000,000,000 gallons (220 Trillion Liters) of water per year. A ChatGPT request uses between 10-25ml of water for cooling. A Hamburger uses about 600 galleons of water. 2 Trillion Liters are lost due to aging infrastructure. If you want to reduce water usage, go vegan or fix water pipes.

Read up here!

-

This post did not contain any content.

One thing I don't get with people fearing AI is when something adds AI and suddenly it's a privacy nightmare. Yeah, in some cases it does make it worse, but in most cases, what was stopping the company from taking your data anyways? LLMs are just algorithms that process data and output something, they don't inherently give firms any additional data. Now, in some cases that means data that previously wasn't or that shouldn't be sent to a server is now being sent, but I've seen people complain about privacy so often in cases where I don't understand why AI is your tipping point, if you don't trust the company to not store your data when using AI, why trust it in the first place?

-

This post did not contain any content.

It did help me make a basic script and add it to task scheduler so it runs and fixes my broken WiFi card so I don't have to manually do it. (or better said, helped me avoid asking arrogant people that feel smug when I tell them I haven't opened a command prompt in ten years)

-

One thing I don't get with people fearing AI is when something adds AI and suddenly it's a privacy nightmare. Yeah, in some cases it does make it worse, but in most cases, what was stopping the company from taking your data anyways? LLMs are just algorithms that process data and output something, they don't inherently give firms any additional data. Now, in some cases that means data that previously wasn't or that shouldn't be sent to a server is now being sent, but I've seen people complain about privacy so often in cases where I don't understand why AI is your tipping point, if you don't trust the company to not store your data when using AI, why trust it in the first place?

It's more about them feeding it into an LLM which then decides to incorporate it in an answer to some random person.

-

This post did not contain any content.

-

It did help me make a basic script and add it to task scheduler so it runs and fixes my broken WiFi card so I don't have to manually do it. (or better said, helped me avoid asking arrogant people that feel smug when I tell them I haven't opened a command prompt in ten years)

I feel like I would have been able to do that easily 10 years ago, because search engines worked, and the 'web wasn't full of garbage. I reckon I'd have near zero chance now.

-

It's important to remember that there's a lot of money being put into A.I. and therefore a lot of propaganda about it.

This happened with a lot of shitty new tech, and A.I. is one of the biggest examples of this I've known about.

All I can write is that, if you know what kind of tech you want and it's satisfactory, just stick to that. That's what I do.

Don't let ads get to you.First post on a lemmy server, by the way. Hello!

There was a quote about how Silicon Valley isn't a fortune teller betting on the future. It's a group of rich assholes that have decided what the future would look like and are pushing technology that will make that future a reality.

Welcome to Lemmy!

-

I'd like to hear more about this because I'm fairly tech savvy and interested in legal nonsense (not American) and haven't heard of it. Obviously, I'll look it up but if you have a particularly good source I'd be grateful.

I have lawyer friends. I've seen snippets of their work lives. It continues to baffle me how much relies on people who don't have the waking hours or physical capabilities to consume and collate that much information somehow understanding it well enough to present a true, comprehensive argument on a deadline.

I do tech support for a few different law firms in my area, and AI companies that offer document ingest and processing are crawling out of every crack.

Some of them are pretty good and they take into account the hallucination issues and offer direct links to the quotes that they're pulling and I mean it's an area where things are developing and soon it should be easier for a lawyer to put on more cases or to charge less per case because being a lawyer becomes so much easier.

-

This post did not contain any content.

Early computers were massive and consumed a lot of electricity. They were heavy, prone to failure, and wildly expensive.

We learned to use transistors and integrated circuits to make them smaller and more affordable. We researched how to manufacture them, how to power them, and how to improve their abilities.

Critics at the time said they were a waste of time and money, and that we should stop sinking resources into them.

-

Early computers were massive and consumed a lot of electricity. They were heavy, prone to failure, and wildly expensive.

We learned to use transistors and integrated circuits to make them smaller and more affordable. We researched how to manufacture them, how to power them, and how to improve their abilities.

Critics at the time said they were a waste of time and money, and that we should stop sinking resources into them.

Even if you accept that LLMs are a necessary, but ultimately disappointing, step on the way to a much more useful technology like AGI there's still a very good argument to be made that we should stop investing in it now.

I'm not talking about the "AI is going to enslave humanity" theories either. We already have human overlords who have no issues doing exactly that and giving them the technology to make most of us redundant, at the precise moment when human populations are higher than they've ever been, is a recipe for disaster that could make what's happening in Gaza seem like a relaxing vacation. They will have absolutely no problem condemning billions to untold suffering and death if it means they can make a few more dollars.

We need to figure our shit out as a species before we birth that kind of technology or else we're all going to suffer immensely.

-

Probably right, but to be fair it’s “been” quantum computing since the 90’s.

AI has been AI since the 50s.

-

Early computers were massive and consumed a lot of electricity. They were heavy, prone to failure, and wildly expensive.

We learned to use transistors and integrated circuits to make them smaller and more affordable. We researched how to manufacture them, how to power them, and how to improve their abilities.

Critics at the time said they were a waste of time and money, and that we should stop sinking resources into them.

Making machines think for you, badly, is a lot different than having machines do computation with controlled inputs and outputs. LLMs are a dead-end in the hunt of AGI and they actively make us stupider and are killing the planet. There's a lot to fucking hate on.

I do think that generative ai can have its uses, but LLMs are the most cursed thing. The fact that the word guesser has emergent properties is interesting, but we definitely shouldn't be using those properties like this.

-

Even if you accept that LLMs are a necessary, but ultimately disappointing, step on the way to a much more useful technology like AGI there's still a very good argument to be made that we should stop investing in it now.

I'm not talking about the "AI is going to enslave humanity" theories either. We already have human overlords who have no issues doing exactly that and giving them the technology to make most of us redundant, at the precise moment when human populations are higher than they've ever been, is a recipe for disaster that could make what's happening in Gaza seem like a relaxing vacation. They will have absolutely no problem condemning billions to untold suffering and death if it means they can make a few more dollars.

We need to figure our shit out as a species before we birth that kind of technology or else we're all going to suffer immensely.

Even if we had an AGI that gave the steps to fix the world and prevent mass extinction, and generate a solution for the US to stop all wars. It wouldn't make a difference because those in charge simply wouldn't listen to it. In fact, generative AI gives you answers for these peace and slowing down climate change based off real academic work on and those in charge ignore both AI they claim to trust and the scholars who spend their whole lives finding solutions to.

-

This post did not contain any content.

I must be one of the few reaming people that have never, and will never- type a sentence into an AI prompt.

I despise that garbage.